highlights:

- flex attention: better compilation of blockmask creation, better support for dynamic shapes

- cuDNN SDPA: fixes for memory layout

- CUDA 12.6

- python 3.13

- MaskedTensor memory leak fix

highlights:

- flex attention: better compilation of blockmask creation, better support for dynamic shapes

- cuDNN SDPA: fixes for memory layout

- CUDA 12.6

- python 3.13

- MaskedTensor memory leak fix

heat rises. "the top is cool enough to drink" implies "everything below it is colder".

drinking from the bottom lets us access safe temperatures earlier and before the whole cup cools.

heat rises. "the top is cool enough to drink" implies "everything below it is colder".

drinking from the bottom lets us access safe temperatures earlier and before the whole cup cools.

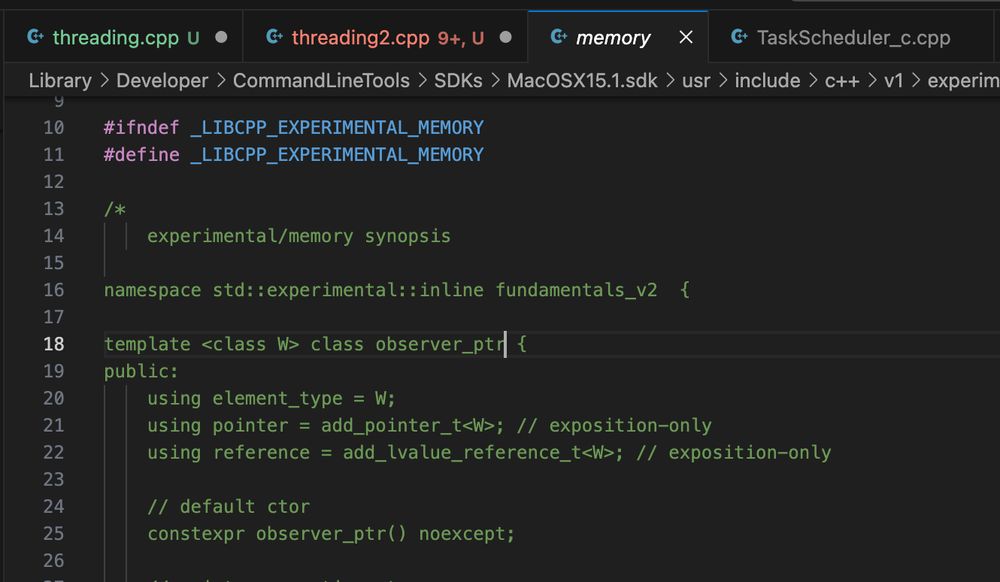

so we don't have to click terminate a hundred times

github.com/microsoft/vs...

so we don't have to click terminate a hundred times

github.com/microsoft/vs...

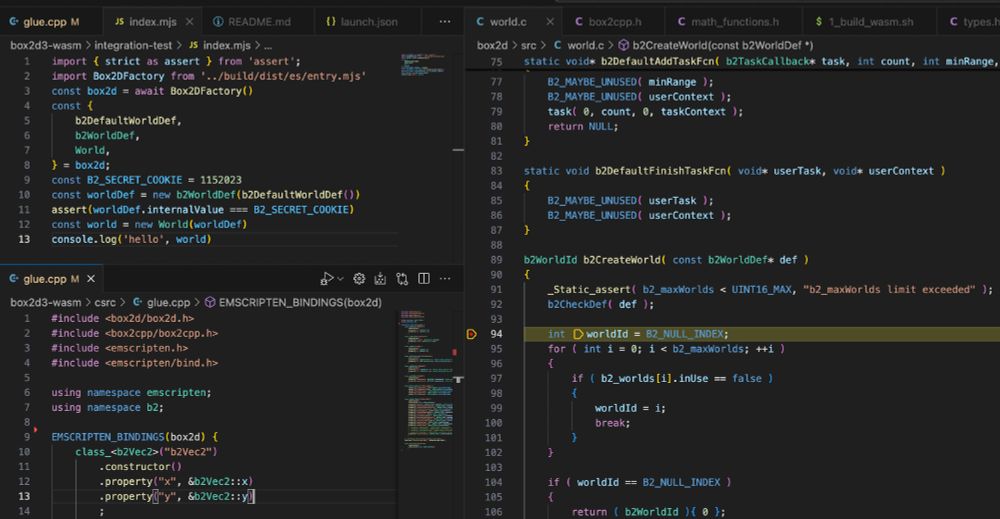

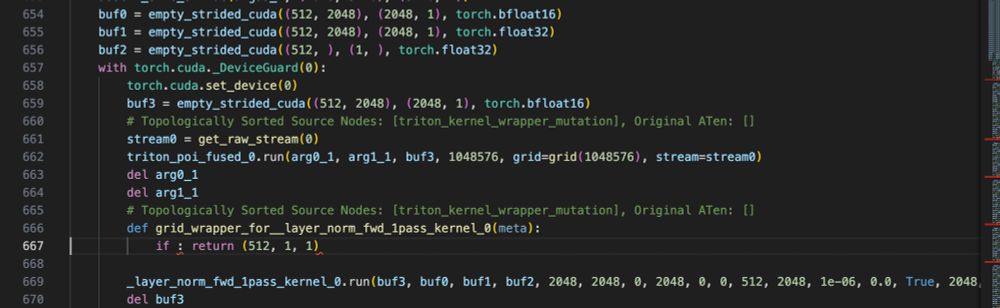

it could be fixed by omitting the guard altogether, or perhaps eliding the function grid wrapper entirely

github.com/pytorch/pyto...

it could be fixed by omitting the guard altogether, or perhaps eliding the function grid wrapper entirely

github.com/pytorch/pyto...

www.youtube.com/watch?v=tuDA...

www.youtube.com/watch?v=tuDA...

what's the safest stage to be KO'd on?

Great Bay looks alright if you're a confident swimmer…

what's the safest stage to be KO'd on?

Great Bay looks alright if you're a confident swimmer…

counting flops makes your compiled model slower.

benchmark your model first, count flops after.

github.com/pytorch/pyto...

counting flops makes your compiled model slower.

benchmark your model first, count flops after.

github.com/pytorch/pyto...

pytorch.org/docs/stable/...

pytorch.org/docs/stable/...

if you're inferencing smaller, the probability distribution is sharper than in training. you can scale the logits to compensate.

left = orig

right = entropy-scaled.

arxiv.org/abs/2306.08645

if you're inferencing smaller, the probability distribution is sharper than in training. you can scale the logits to compensate.

left = orig

right = entropy-scaled.

arxiv.org/abs/2306.08645

arxiv.org/abs/2310.07702

arxiv.org/abs/2310.07702

convolution padding creates an edge, which nested convolutions look for to understand position.

arxiv.org/abs/2101.12322

convolution padding creates an edge, which nested convolutions look for to understand position.

arxiv.org/abs/2101.12322