Introducing our new non-saturated (for at least the coming week? 😉) benchmark:

✨BigO(Bench)✨ - Can LLMs Generate Code with Controlled Time and Space Complexity?

Check out the details below !👇

Introducing our new non-saturated (for at least the coming week? 😉) benchmark:

✨BigO(Bench)✨ - Can LLMs Generate Code with Controlled Time and Space Complexity?

Check out the details below !👇

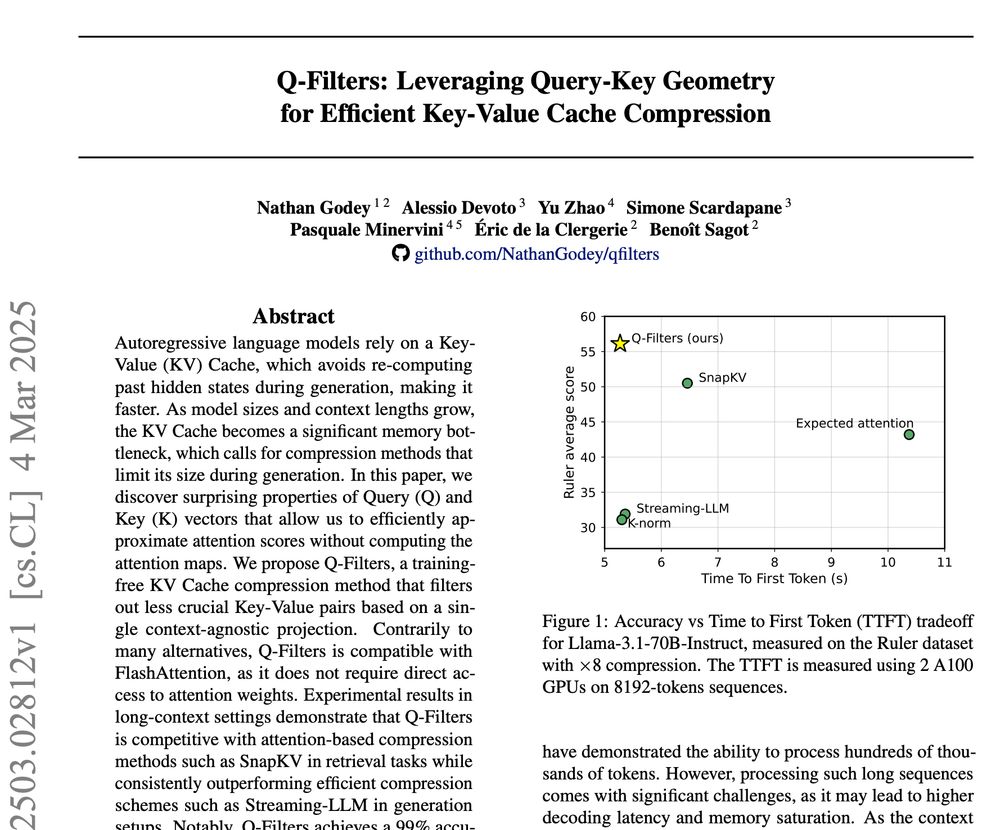

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention