fig (aka:[phil])

@bad-example.com

art and transistors, they/them plant-mom building community infra

🌌 constellation.microcosm.blue

🚒 relay.fire.hose.cam jetstream.fire.hose.cam

🛸 UFOs.microcosm.blue

🎇 spacedust.microcosm.blue

💥 notifications.microcosm.blue

🛰️ slingshot.microcosm.blue

🌌 constellation.microcosm.blue

🚒 relay.fire.hose.cam jetstream.fire.hose.cam

🛸 UFOs.microcosm.blue

🎇 spacedust.microcosm.blue

💥 notifications.microcosm.blue

🛰️ slingshot.microcosm.blue

hmm something must be happening rn

November 5, 2025 at 2:50 AM

hmm something must be happening rn

yeah, seems likely. also apparently i haven't been watching closely in the last >1 week

November 1, 2025 at 10:17 PM

yeah, seems likely. also apparently i haven't been watching closely in the last >1 week

i have never seen mentions in bsky posts outpace *likes* globally. spam wave or somehow organic?

this is a log scale: normally bsky likes are 10x(!) more frequent than mentions in posts: this peak just hit >200x the normal baseline mention rate

this is a log scale: normally bsky likes are 10x(!) more frequent than mentions in posts: this peak just hit >200x the normal baseline mention rate

November 1, 2025 at 9:56 PM

i have never seen mentions in bsky posts outpace *likes* globally. spam wave or somehow organic?

this is a log scale: normally bsky likes are 10x(!) more frequent than mentions in posts: this peak just hit >200x the normal baseline mention rate

this is a log scale: normally bsky likes are 10x(!) more frequent than mentions in posts: this peak just hit >200x the normal baseline mention rate

zooming way in on network metrics from the host, there was a very short network throughput drop. i wonder if maybe it was just some provider networking problem leading to PDS connections being dropped?

along with the gap in metrics being able to scrape, a network problem is my top suspect.

along with the gap in metrics being able to scrape, a network problem is my top suspect.

November 1, 2025 at 9:07 PM

zooming way in on network metrics from the host, there was a very short network throughput drop. i wonder if maybe it was just some provider networking problem leading to PDS connections being dropped?

along with the gap in metrics being able to scrape, a network problem is my top suspect.

along with the gap in metrics being able to scrape, a network problem is my top suspect.

follow-up: it's a weird one. relay logs are out of retention unfortunately so i'm not sure if i'll get to a full root-cause.

the server did not reboot, the relay container stayed up. dmesg on the host has nothing since oct 25

pds hosts connected dropped abruptly and there are some gaps in metrics

the server did not reboot, the relay container stayed up. dmesg on the host has nothing since oct 25

pds hosts connected dropped abruptly and there are some gaps in metrics

November 1, 2025 at 9:01 PM

follow-up: it's a weird one. relay logs are out of retention unfortunately so i'm not sure if i'll get to a full root-cause.

the server did not reboot, the relay container stayed up. dmesg on the host has nothing since oct 25

pds hosts connected dropped abruptly and there are some gaps in metrics

the server did not reboot, the relay container stayed up. dmesg on the host has nothing since oct 25

pds hosts connected dropped abruptly and there are some gaps in metrics

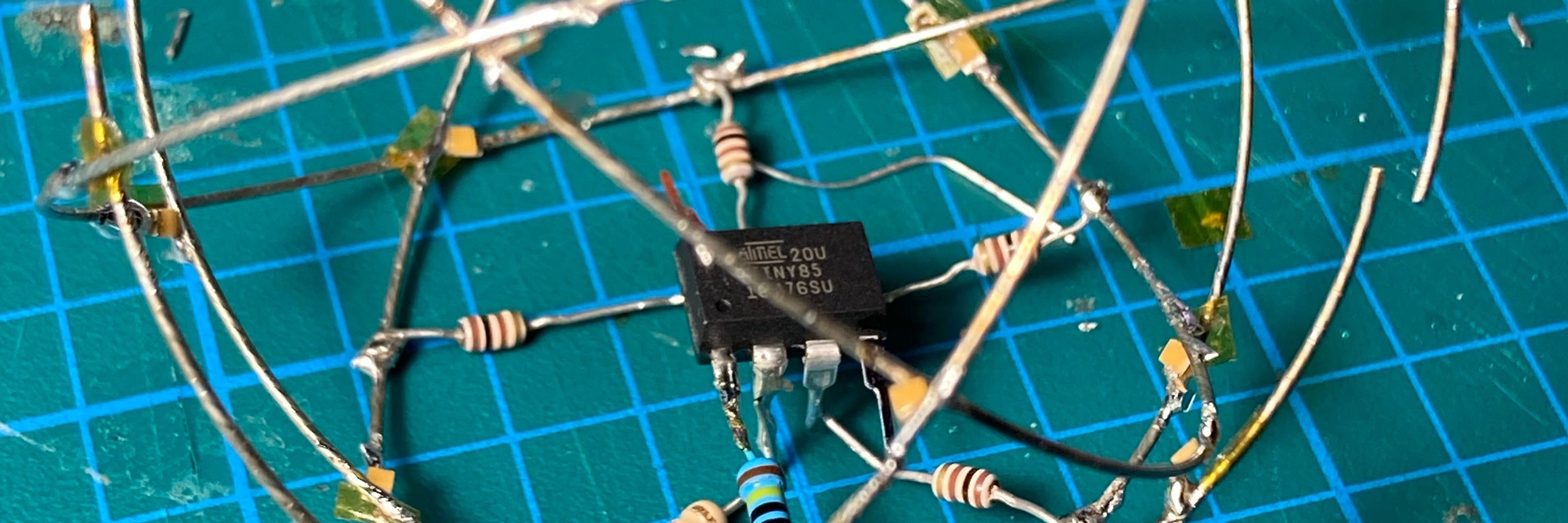

how it started

how it’s going

how it’s going

![skywarch link to pull request by mozzius: “[not for merge] Update xcode” 3h ago

skywatch reply 8mins ago: “Merged PR into main at bluesky-social/social-app”

the title was updated before the merge but it’s a bit deemphasized by being link text: “Update Xcode in CI to 26”

the github pr number is #9215](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hdhoaan3xa3jiuq4fg4mefid/bafkreicby3m2zrifezvr7b653ejusz64m3rhtn67q7od5vor3u6uagt2om@jpeg)

October 16, 2025 at 2:53 PM

how it started

how it’s going

how it’s going

@tangled.org push server text getting all the fancy features ✨

![a git push output in terminal. highlighted area says

> Create a PR pointing to main

> https://tangled.org/microcosm.blue/repo-stream/compare/main...disk

the opening line is: Welcome to Tangled's hosted knot! 🧶

full text:

repo-stream »‽git push -u origin disk (git)-[disk]-

Welcome to Tangled's hosted knot! 🧶

Enumerating objects: 31, done.

Counting objects: 100% (31/31), done.

Delta compression using up to 10 threads

Compressing objects: 100% (24/24), done.

Writing objects: 100% (25/25), 11.42 KiB | 5.71 MiB/s, done.

Total 25 (delta 13), reused 0 (delta 0), pack-reused 0

remote:

remote: Create a PR pointing to main

remote: https://tangled.org/microcosm.blue/repo-stream/compare/main...disk

remote:

To tangled.sh:microcosm.blue/repo-stream

* [new branch] disk -> disk

branch 'disk' set up to track 'origin/disk'.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hdhoaan3xa3jiuq4fg4mefid/bafkreib46rti2jdb3d242obredw63ho5tgbwm7c74glwyu5ishfiwt72oa@jpeg)

October 13, 2025 at 11:02 PM

@tangled.org push server text getting all the fancy features ✨

toil. churn. thanks a lot, @example.com

(ty for real person who gave me a heads up *hours* after the change happened)

(ty for real person who gave me a heads up *hours* after the change happened)

October 12, 2025 at 5:22 PM

toil. churn. thanks a lot, @example.com

(ty for real person who gave me a heads up *hours* after the change happened)

(ty for real person who gave me a heads up *hours* after the change happened)

oh that’s 9 billion atproto backlinks indexed!

![This server has indexed 9,030,923,833 links between 1,713,360,256 targets and sources from 20,216,394 identities over 5 days.

in small text: (indexing new records in real time, backfill coming soon!)

the text above is cropped: […] the firehose, searching for anything that looks like a link.

Links are indexed by the target they point at, the collection the record came from, and the JSON path to the link in that record.

text below: You're welcome to use this public instance! Please do not[…]

the address bar shows “constellation.microcosm.blue”](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hdhoaan3xa3jiuq4fg4mefid/bafkreih77nrcnlyjubnpvqggorvpz5rnbr6pc3zjec2lc5cu257krxccpm@jpeg)

October 9, 2025 at 3:07 AM

oh that’s 9 billion atproto backlinks indexed!

ah! suspicion confirmed.

floating-point numbers are not support in the atproto dag-cbor data model, so these records are invalid. put floats in strings and parse them out where needed :(

unfortunately current pds and relay still admit some kinds of invalid data (allowed in cbor but not dag-cbor)

floating-point numbers are not support in the atproto dag-cbor data model, so these records are invalid. put floats in strings and parse them out where needed :(

unfortunately current pds and relay still admit some kinds of invalid data (allowed in cbor but not dag-cbor)

October 6, 2025 at 4:12 PM

ah! suspicion confirmed.

floating-point numbers are not support in the atproto dag-cbor data model, so these records are invalid. put floats in strings and parse them out where needed :(

unfortunately current pds and relay still admit some kinds of invalid data (allowed in cbor but not dag-cbor)

floating-point numbers are not support in the atproto dag-cbor data model, so these records are invalid. put floats in strings and parse them out where needed :(

unfortunately current pds and relay still admit some kinds of invalid data (allowed in cbor but not dag-cbor)

i think this is every common potential many-to-many record type in atproto right now

you can just do things etc

you can just do things etc

October 3, 2025 at 6:40 PM

i think this is every common potential many-to-many record type in atproto right now

you can just do things etc

you can just do things etc

ate up about 29GiB of constellation's disk to add the reverse mapping from interned link-target ids, but now we have actual many-to-many link traversal!

just a casual friday morning making my raspberry pi walk and write 1.7 billion database keys

(updated api docs in progress)

just a casual friday morning making my raspberry pi walk and write 1.7 billion database keys

(updated api docs in progress)

!["target iter at 1675000000" followed by "invalid iter, are we done repairing?" "repair iterator done." "repair finished: Ok(false)"

logs from cassiopeia main[185644]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hdhoaan3xa3jiuq4fg4mefid/bafkreif5efreaf34clwumauuanqmatsoz5ijlkcy4ovycgs6pwvwtlaady@jpeg)

October 3, 2025 at 5:20 PM

ate up about 29GiB of constellation's disk to add the reverse mapping from interned link-target ids, but now we have actual many-to-many link traversal!

just a casual friday morning making my raspberry pi walk and write 1.7 billion database keys

(updated api docs in progress)

just a casual friday morning making my raspberry pi walk and write 1.7 billion database keys

(updated api docs in progress)

it's a little silly but the little "try it!" widget in the microcosm docs now works with multi-valued keys

October 3, 2025 at 4:44 PM

it's a little silly but the little "try it!" widget in the microcosm docs now works with multi-valued keys

We've got some good-first-issues for @microcosm.blue, come get em!!

October 3, 2025 at 3:16 PM

We've got some good-first-issues for @microcosm.blue, come get em!!

ok wait the fact that @mackuba.eu 's indie-pds-only relay now has enough activity in the stream to actually chart on this full-firehose tool ---- pds self-hosting really is taking off

October 1, 2025 at 1:50 PM

ok wait the fact that @mackuba.eu 's indie-pds-only relay now has enough activity in the stream to actually chart on this full-firehose tool ---- pds self-hosting really is taking off

22 bad vs 65 ok, it's not a good ratio from people i follow :(

September 30, 2025 at 8:22 PM

22 bad vs 65 ok, it's not a good ratio from people i follow :(

ha that's a new for me, thanks atproto

September 30, 2025 at 2:14 PM

ha that's a new for me, thanks atproto

plc.wtf reference mirror now has a place for PLC experiments:

- experimental.plc.wtf

experiment #1: upstream op forwarding. submit a PLC op and Allegedly will forward it upstream to `plc.directory` for you.

everything stays in sync, but you don't have to touch the official directory at all

- experimental.plc.wtf

experiment #1: upstream op forwarding. submit a PLC op and Allegedly will forward it upstream to `plc.directory` for you.

everything stays in sync, but you don't have to touch the official directory at all

September 29, 2025 at 8:28 PM

plc.wtf reference mirror now has a place for PLC experiments:

- experimental.plc.wtf

experiment #1: upstream op forwarding. submit a PLC op and Allegedly will forward it upstream to `plc.directory` for you.

everything stays in sync, but you don't have to touch the official directory at all

- experimental.plc.wtf

experiment #1: upstream op forwarding. submit a PLC op and Allegedly will forward it upstream to `plc.directory` for you.

everything stays in sync, but you don't have to touch the official directory at all

plc.wtf has caught up

disk space maxed for this node size, next size up is ~100Euros/mo (pushing it for my available upcloud creditssss)

pushed a little defence on the /_health endpoint to protect from upstream DOS but otherwise it's probably going to stay under tmux over the weekend. oh well.

disk space maxed for this node size, next size up is ~100Euros/mo (pushing it for my available upcloud creditssss)

pushed a little defence on the /_health endpoint to protect from upstream DOS but otherwise it's probably going to stay under tmux over the weekend. oh well.

September 26, 2025 at 5:07 PM

plc.wtf has caught up

disk space maxed for this node size, next size up is ~100Euros/mo (pushing it for my available upcloud creditssss)

pushed a little defence on the /_health endpoint to protect from upstream DOS but otherwise it's probably going to stay under tmux over the weekend. oh well.

disk space maxed for this node size, next size up is ~100Euros/mo (pushing it for my available upcloud creditssss)

pushed a little defence on the /_health endpoint to protect from upstream DOS but otherwise it's probably going to stay under tmux over the weekend. oh well.

oh that was unexpected. the db has 105GiB and i was estimating under 60.

is postgres pretty space-inefficient or

is postgres pretty space-inefficient or

September 26, 2025 at 2:05 PM

oh that was unexpected. the db has 105GiB and i was estimating under 60.

is postgres pretty space-inefficient or

is postgres pretty space-inefficient or

anyway i updated the readme to make the placeholder values more obvious and also move the sensitive bits into an environment variable instead of passing as a cli arg.

September 23, 2025 at 2:27 PM

anyway i updated the readme to make the placeholder values more obvious and also move the sensitive bits into an environment variable instead of passing as a cli arg.

ok so this is ~working

- polls upstream PLC directory's /export

- writes it to a local PLC reference server's postgres

- proxies all GETs to the local PLC

it's running the PLC reference code as a mirror!

- polls upstream PLC directory's /export

- writes it to a local PLC reference server's postgres

- proxies all GETs to the local PLC

it's running the PLC reference code as a mirror!

September 23, 2025 at 12:00 AM

ok so this is ~working

- polls upstream PLC directory's /export

- writes it to a local PLC reference server's postgres

- proxies all GETs to the local PLC

it's running the PLC reference code as a mirror!

- polls upstream PLC directory's /export

- writes it to a local PLC reference server's postgres

- proxies all GETs to the local PLC

it's running the PLC reference code as a mirror!