Artidoro Pagnoni

@artidoro.bsky.social

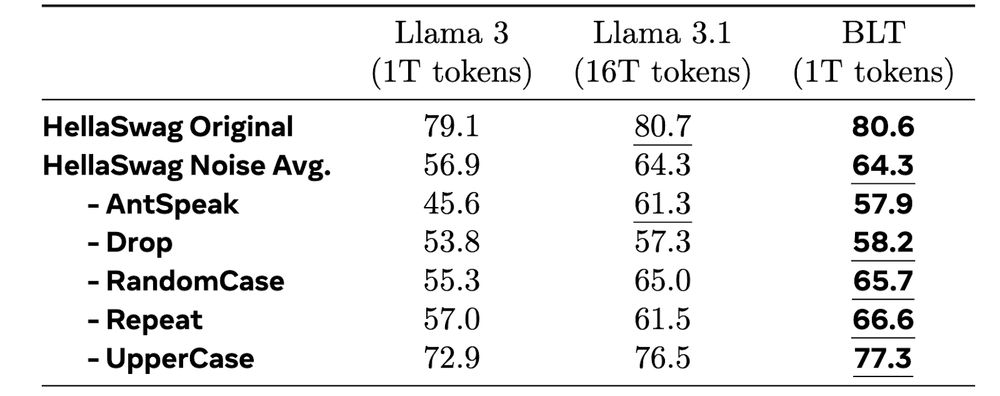

6/ Without direct modeling of bytes Llama 3.1 trained on 16x more data still lags behind on some of these tasks!

December 13, 2024 at 4:53 PM

6/ Without direct modeling of bytes Llama 3.1 trained on 16x more data still lags behind on some of these tasks!

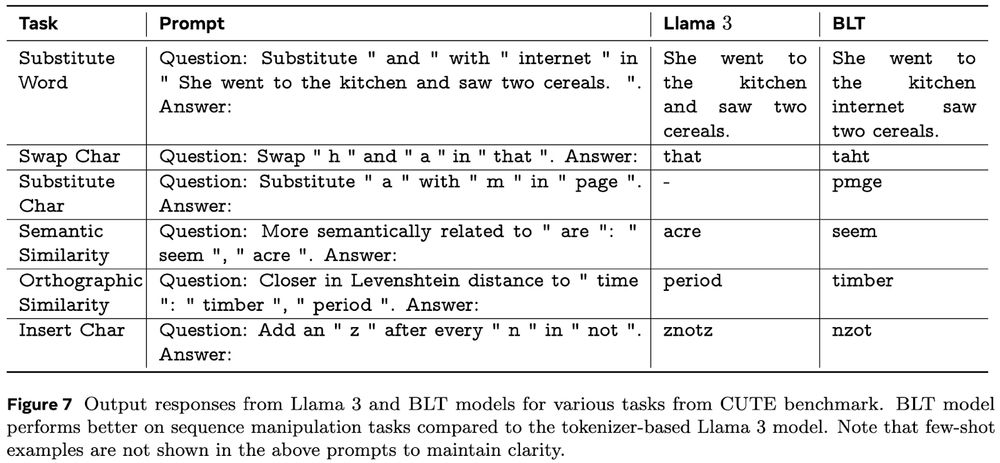

5/ 💪 But where BLT excels is at modeling the long-tail of data with better robustness to noise and improved understanding and manipulation of substrings.

December 13, 2024 at 4:53 PM

5/ 💪 But where BLT excels is at modeling the long-tail of data with better robustness to noise and improved understanding and manipulation of substrings.

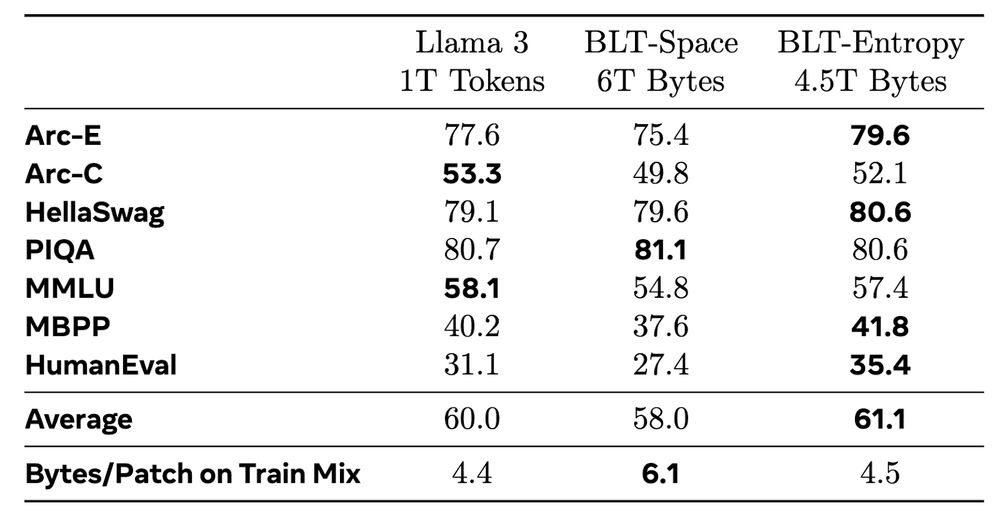

4/ ⚡ Parameter matched training runs up to 8B params and 4T bytes show that BLT performs well on standard benchmarks, and can trade minor losses in evaluation metrics for up to 50% reductions in inference flops.

December 13, 2024 at 4:53 PM

4/ ⚡ Parameter matched training runs up to 8B params and 4T bytes show that BLT performs well on standard benchmarks, and can trade minor losses in evaluation metrics for up to 50% reductions in inference flops.

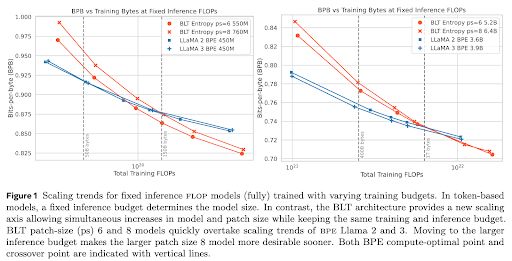

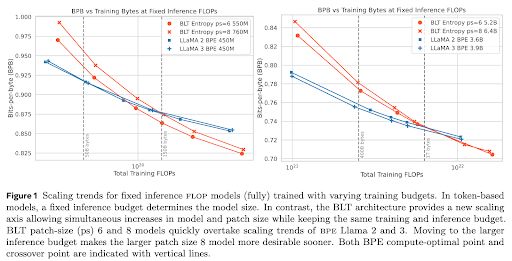

3/ 📈 BLT unlocks a new scaling dimension by simultaneously growing patch and model size without changing training or inference cost. Patch length scaling quickly overtakes BPE transformer scaling, and the trends look even better at larger scales!

December 13, 2024 at 4:53 PM

3/ 📈 BLT unlocks a new scaling dimension by simultaneously growing patch and model size without changing training or inference cost. Patch length scaling quickly overtakes BPE transformer scaling, and the trends look even better at larger scales!

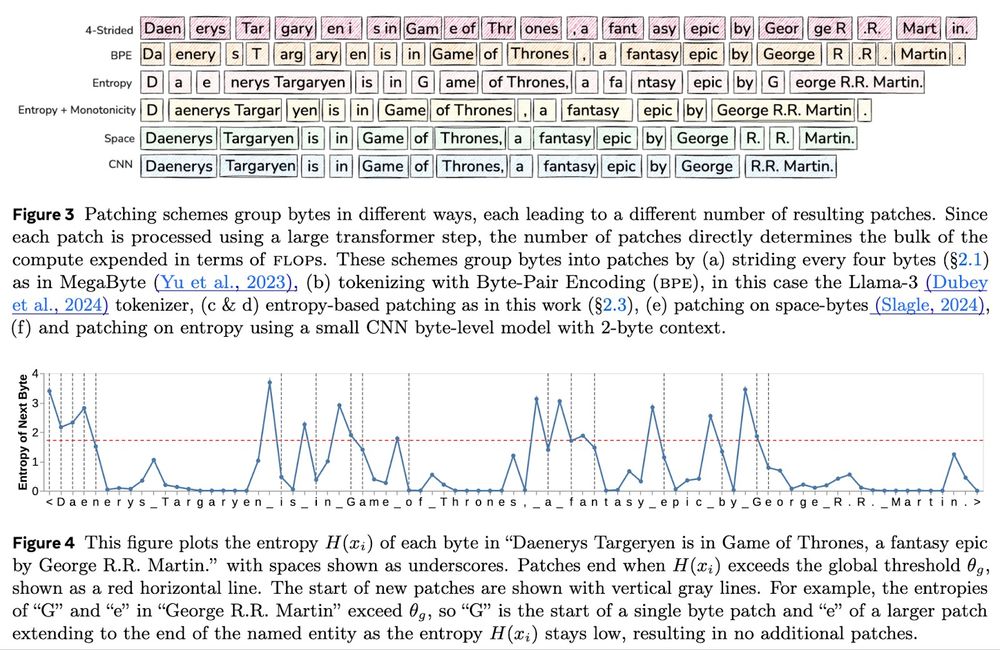

2/ 🧩 Entropy patching dynamically adjusts patch sizes based on data complexity, allowing BLT to allocate more compute to hard predictions and use larger patches for simpler ones. This results in fewer larger processing steps to cover the same data.

December 13, 2024 at 4:53 PM

2/ 🧩 Entropy patching dynamically adjusts patch sizes based on data complexity, allowing BLT to allocate more compute to hard predictions and use larger patches for simpler ones. This results in fewer larger processing steps to cover the same data.

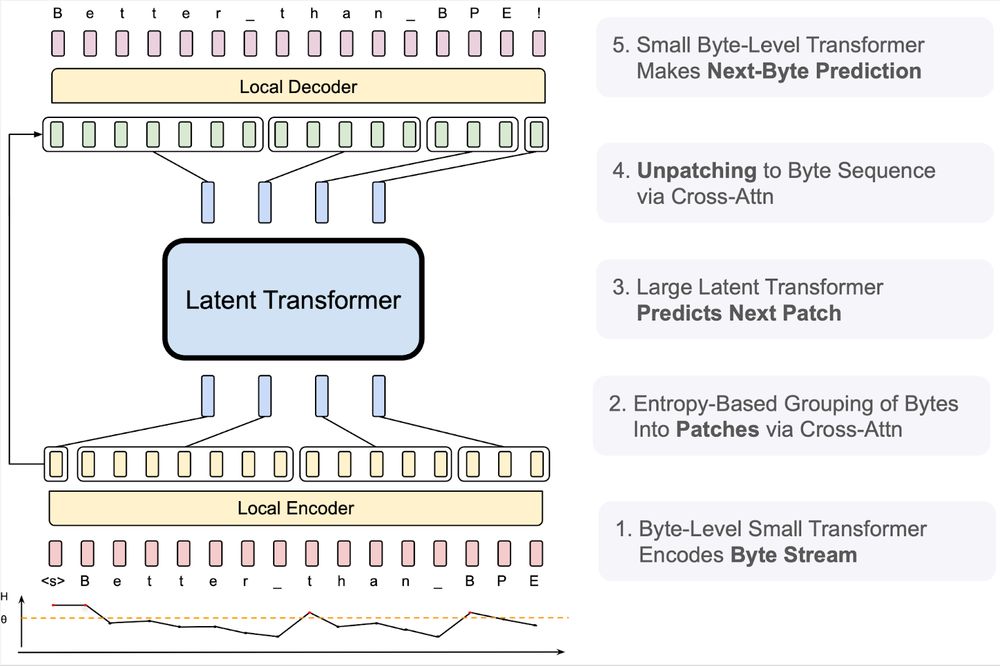

1/ 🧱 BLT encodes bytes into dynamic patches using light-weight local models and processes them with a large latent transformer. Think of it as a transformer sandwich! 🥪

December 13, 2024 at 4:53 PM

1/ 🧱 BLT encodes bytes into dynamic patches using light-weight local models and processes them with a large latent transformer. Think of it as a transformer sandwich! 🥪

🚀 Introducing the Byte Latent Transformer (BLT) – A LLM architecture that scales better than Llama 3 using patches instead of tokens 🤯

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

December 13, 2024 at 4:53 PM

🚀 Introducing the Byte Latent Transformer (BLT) – A LLM architecture that scales better than Llama 3 using patches instead of tokens 🤯

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...