arkilpatel.github.io

We study the reasoning chains of DeepSeek-R1 across a variety of tasks and find several surprising and interesting phenomena!

Incredible effort by the entire team!

🌐: mcgill-nlp.github.io/thoughtology/

We study the reasoning chains of DeepSeek-R1 across a variety of tasks and find several surprising and interesting phenomena!

Incredible effort by the entire team!

🌐: mcgill-nlp.github.io/thoughtology/

Check out our new Web Agents ∩ Safety benchmark: SafeArena!

Paper: arxiv.org/abs/2503.04957

Check out our new Web Agents ∩ Safety benchmark: SafeArena!

Paper: arxiv.org/abs/2503.04957

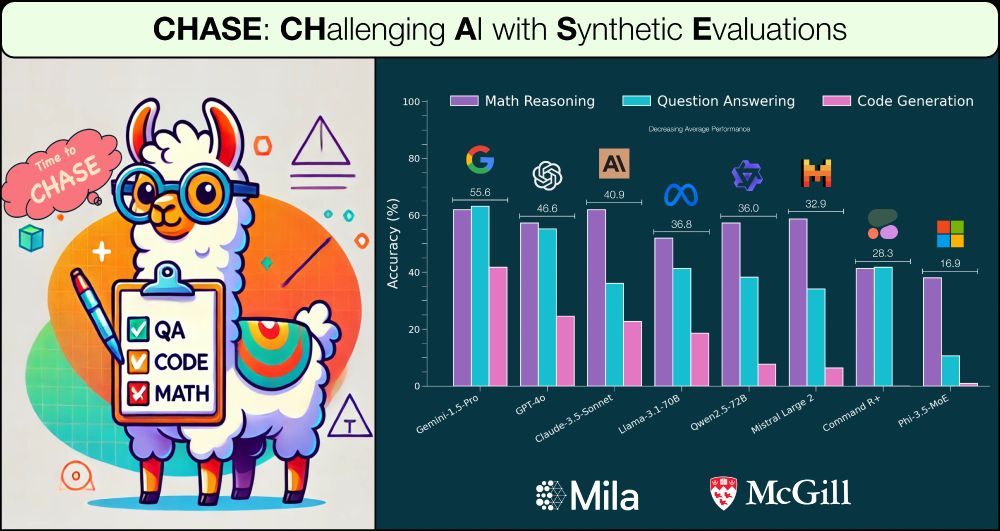

1. Bottom-up creation of complex context by “hiding” components of reasoning process

2. Decomposing generation pipeline into simpler, "soft-verifiable" sub-tasks

1. Bottom-up creation of complex context by “hiding” components of reasoning process

2. Decomposing generation pipeline into simpler, "soft-verifiable" sub-tasks

1. 𝐂𝐇𝐀𝐒𝐄-𝐐𝐀: Long-context question answering

2. 𝐂𝐇𝐀𝐒𝐄-𝐂𝐨𝐝𝐞: Repo-level code generation

3. 𝐂𝐇𝐀𝐒𝐄-𝐌𝐚𝐭𝐡: Math reasoning

1. 𝐂𝐇𝐀𝐒𝐄-𝐐𝐀: Long-context question answering

2. 𝐂𝐇𝐀𝐒𝐄-𝐂𝐨𝐝𝐞: Repo-level code generation

3. 𝐂𝐇𝐀𝐒𝐄-𝐌𝐚𝐭𝐡: Math reasoning

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵: