And Arize AX is layered across TheFork's stack, with tracing helping drive tangible wins in terms of lower latency, clearer cost signals, and faster iteration.

And Arize AX is layered across TheFork's stack, with tracing helping drive tangible wins in terms of lower latency, clearer cost signals, and faster iteration.

This course covers the latest in:

🟣 Agent architectures and frameworks

🟣 Tools & MCP

🟣 Agentic RAG

🟣 Agent evaluation

🟣 Post-deployment and monitoring

Each module has a lab.

This course covers the latest in:

🟣 Agent architectures and frameworks

🟣 Tools & MCP

🟣 Agentic RAG

🟣 Agent evaluation

🟣 Post-deployment and monitoring

Each module has a lab.

In this session focused on meta-evaluation, you’ll learn advanced techniques -- like using high-temperature stress tests to detect prompt ambiguity or unstable reasoning.

In this session focused on meta-evaluation, you’ll learn advanced techniques -- like using high-temperature stress tests to detect prompt ambiguity or unstable reasoning.

Learn about the prompt playground:

arize.com/docs/phoeni...

Sign up for Phoenix Cloud:

app.phoenix.arize.com/

Release notes:

github.com/Arize-ai/ph...

Learn about the prompt playground:

arize.com/docs/phoeni...

Sign up for Phoenix Cloud:

app.phoenix.arize.com/

Release notes:

github.com/Arize-ai/ph...

Agent Graph gives you a node-based visual map of your agent workflows, so you can instantly see execution paths, identify failure points, spot self-looping behavior, and more!

arize.com/docs/ax/obs...

Agent Graph gives you a node-based visual map of your agent workflows, so you can instantly see execution paths, identify failure points, spot self-looping behavior, and more!

arize.com/docs/ax/obs...

Learn more:

arize.com/docs/ax/obs...

Learn more:

arize.com/docs/ax/obs...

New blog + notebook outlines how to create self-improving agent security: arize.com/blog/how-to...

New blog + notebook outlines how to create self-improving agent security: arize.com/blog/how-to...

From the floor of #MSIgnite, a new notebook + blog walks through a concrete content safety evaluation example.

📓 Explore: arize.com/blog/evalua...

From the floor of #MSIgnite, a new notebook + blog walks through a concrete content safety evaluation example.

📓 Explore: arize.com/blog/evalua...

You can run experiments in the UI, track every iteration in the Prompt Hub, and test new versions in the Prompt Playground.

Great for teams who want collaboration + governance without managing a giant text blob in Git.

You can run experiments in the UI, track every iteration in the Prompt Hub, and test new versions in the Prompt Playground.

Great for teams who want collaboration + governance without managing a giant text blob in Git.

Prompt Learning is framework-agnostic — LangChain, CrewAI, Mastra, AutoGen, vector DBs, custom stacks, whatever.

Just add tracing (OpenInference), export the traces, and optimize.

Start tracing: arize.com/docs/ax/obs...

Prompt Learning is framework-agnostic — LangChain, CrewAI, Mastra, AutoGen, vector DBs, custom stacks, whatever.

Just add tracing (OpenInference), export the traces, and optimize.

Start tracing: arize.com/docs/ax/obs...

run → evaluate → improve → repeat.

They both use meta-prompting and trace-level evals so the optimizer can learn from application behavior — not just static prompts.

Under the hood, both systems are applying RL-style feedback loops.

run → evaluate → improve → repeat.

They both use meta-prompting and trace-level evals so the optimizer can learn from application behavior — not just static prompts.

Under the hood, both systems are applying RL-style feedback loops.

Since we launched Prompt Learning in July, the most common question we get is:

“Prompt Learning or GEPA — which should I use?”

We break down the results below.

Since we launched Prompt Learning in July, the most common question we get is:

“Prompt Learning or GEPA — which should I use?”

We break down the results below.

With our new Session Annotations, you can now add notes directly from the Session Page, eliminating the need to switch between views or lose context.

With our new Session Annotations, you can now add notes directly from the Session Page, eliminating the need to switch between views or lose context.

Learn how to design your eval from scratch -- including what to measure, which model to use, how to prompt effectively, and how to improve your eval.

Learn how to design your eval from scratch -- including what to measure, which model to use, how to prompt effectively, and how to improve your eval.

Aparna has a session on building & evaluating self-improving agents with Arize, Databricks MLFlow, & Mosaic AI.

Info here: www.databricks.com/dataaisummit...

Aparna has a session on building & evaluating self-improving agents with Arize, Databricks MLFlow, & Mosaic AI.

Info here: www.databricks.com/dataaisummit...

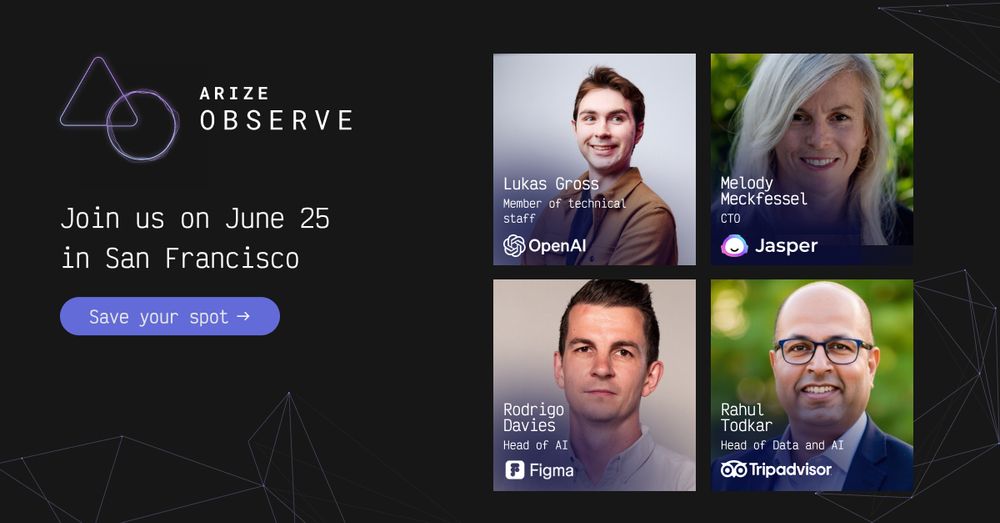

Shack15 in SF on June 25. Builders, researchers, and leaders from @anthropic.com @microsoft.com @llamaindex.bsky.social (+ many more).

Get tickets: arize.com/observe-2025

Shack15 in SF on June 25. Builders, researchers, and leaders from @anthropic.com @microsoft.com @llamaindex.bsky.social (+ many more).

Get tickets: arize.com/observe-2025

Join us May 19. Space is limited!

Register: lu.ma/d6mo5zxs

Join us May 19. Space is limited!

Register: lu.ma/d6mo5zxs

Hear from the people building the next generation of AI systems—it's conference by engineers, for engineers.

Most of our speakers on the site. 👀

Register: arize.com/observe-2025/

Hear from the people building the next generation of AI systems—it's conference by engineers, for engineers.

Most of our speakers on the site. 👀

Register: arize.com/observe-2025/

Apply here: docs.google.com/forms/d/e/1F...

Apply here: docs.google.com/forms/d/e/1F...