@profsophie.bsky.social! Also, Boston is quite nice :)

@profsophie.bsky.social! Also, Boston is quite nice :)

We introduce Temporal Feature Analysis (TFA) to separate what's inferred from context vs. novel information. A big effort led by @ekdeepl.bsky.social, @sumedh-hindupur.bsky.social, @canrager.bsky.social!

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

We introduce Temporal Feature Analysis (TFA) to separate what's inferred from context vs. novel information. A big effort led by @ekdeepl.bsky.social, @sumedh-hindupur.bsky.social, @canrager.bsky.social!

🗓️ October 10th, Room 518C

🔹 Invited talks from @sarah-nlp.bsky.social John Hewitt @amuuueller.bsky.social @kmahowald.bsky.social

🔹 Paper presentations and posters

🔹 Closing roundtable discussion.

Join us in Montréal! @colmweb.org

🗓️ October 10th, Room 518C

🔹 Invited talks from @sarah-nlp.bsky.social John Hewitt @amuuueller.bsky.social @kmahowald.bsky.social

🔹 Paper presentations and posters

🔹 Closing roundtable discussion.

Join us in Montréal! @colmweb.org

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

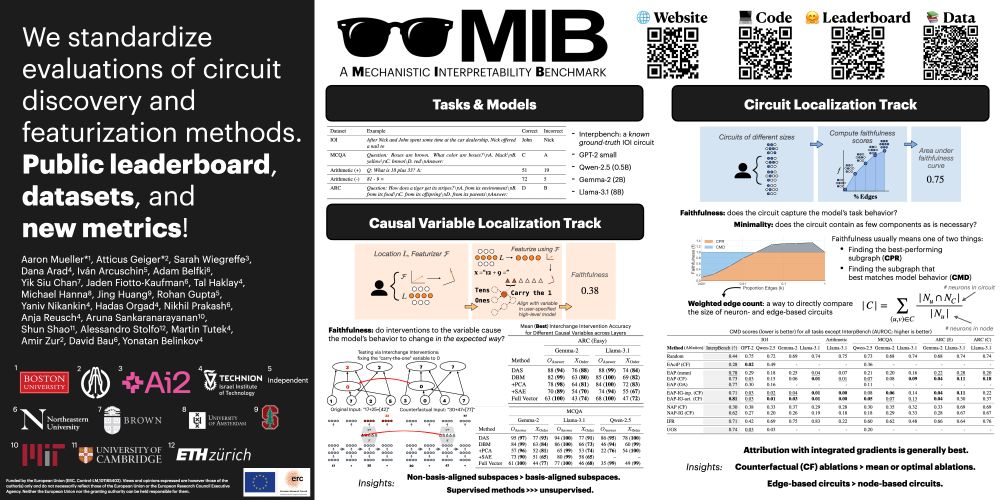

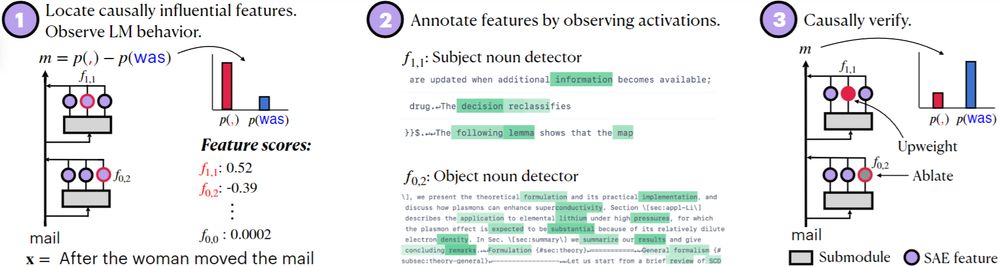

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

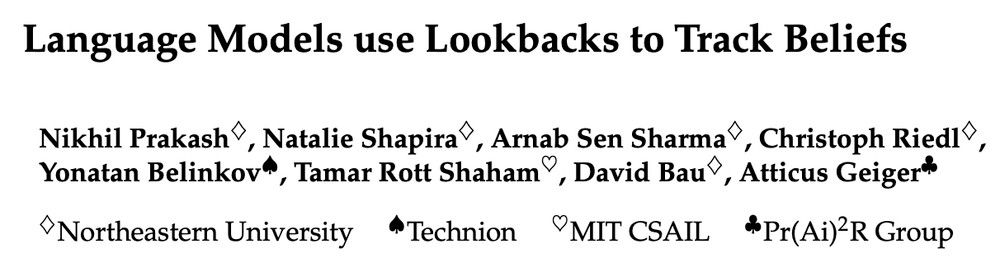

70b/405b LLMs use double pointers, akin to C programmers' double (**) pointers. They show up when the LLM is "knowing what Sally knows Ann knows", i.e., Theory of Mind.

bsky.app/profile/nik...

70b/405b LLMs use double pointers, akin to C programmers' double (**) pointers. They show up when the LLM is "knowing what Sally knows Ann knows", i.e., Theory of Mind.

bsky.app/profile/nik...

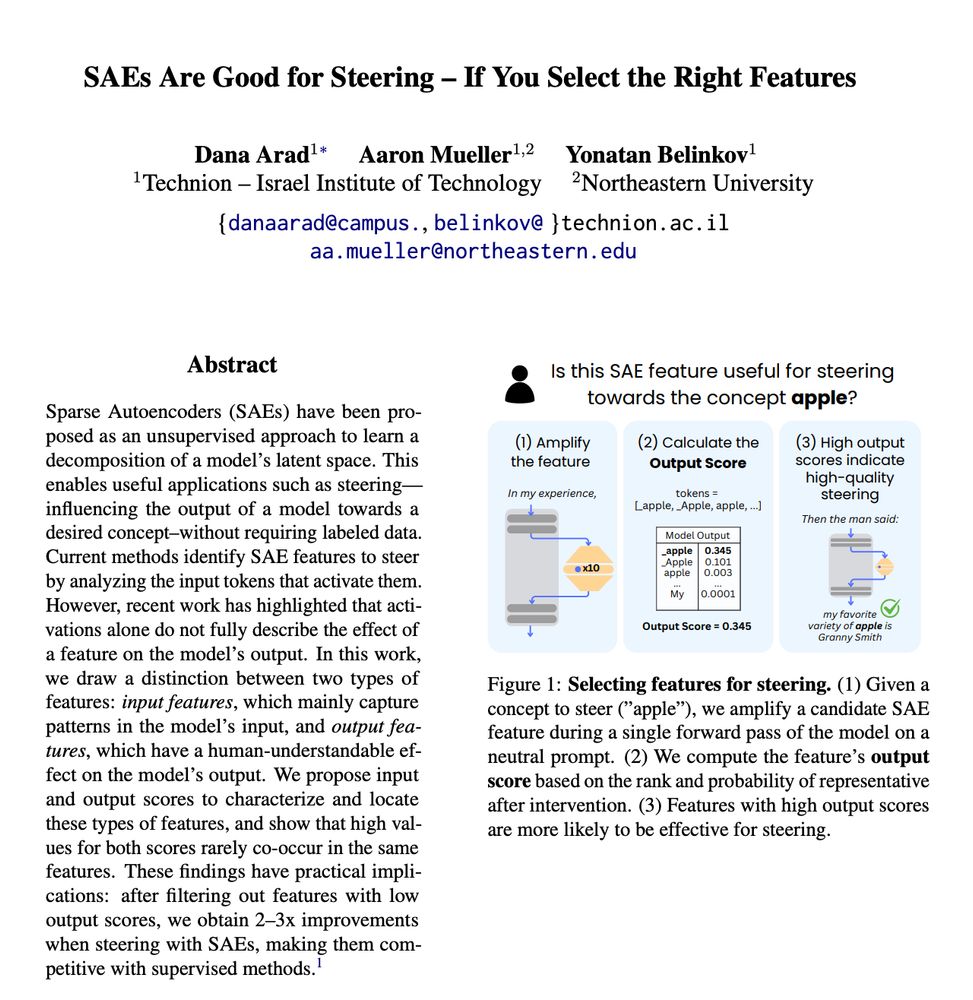

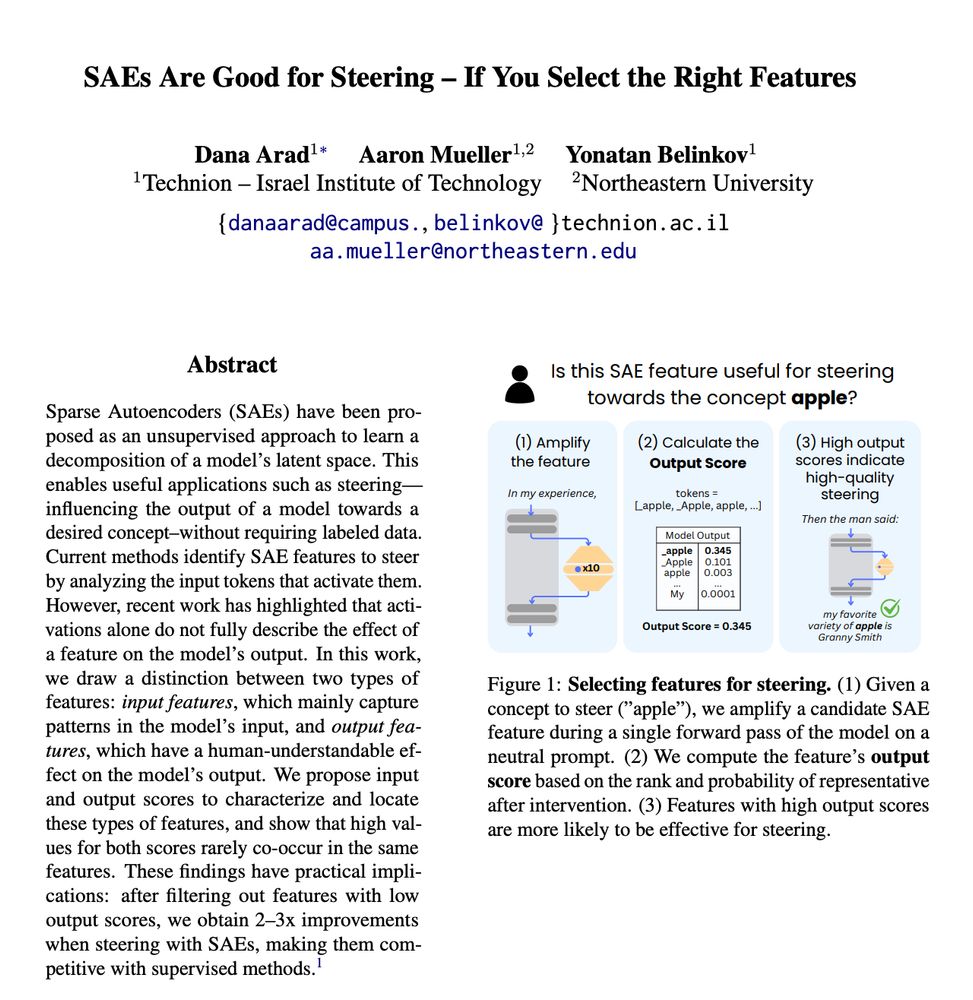

In new work led by @danaarad.bsky.social, we find that this problem largely disappears if you select the right features!

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

In new work led by @danaarad.bsky.social, we find that this problem largely disappears if you select the right features!

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose a way to find 𝗽𝗼𝘀𝗶𝘁𝗶𝗼𝗻-𝗮𝘄𝗮𝗿𝗲 circuits. (Highlight: using LLMs to help us create multi-token causal abstractions!)

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

We propose a way to find 𝗽𝗼𝘀𝗶𝘁𝗶𝗼𝗻-𝗮𝘄𝗮𝗿𝗲 circuits. (Highlight: using LLMs to help us create multi-token causal abstractions!)

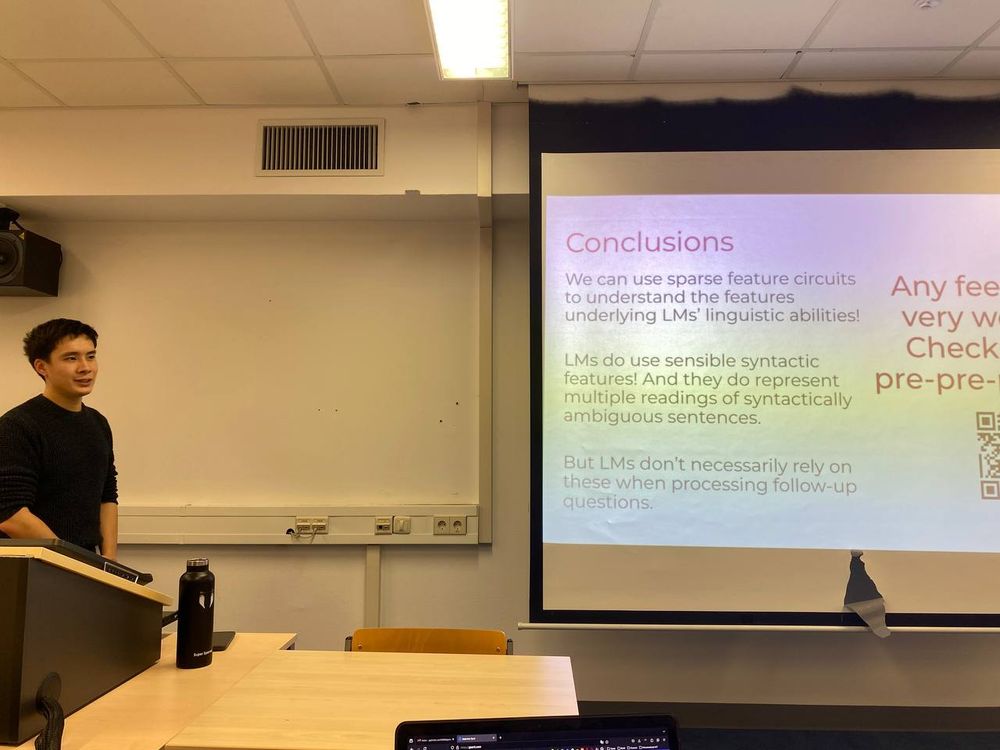

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

Highlighting some findings of BabyLM

Architectures & Training objective matter a lot (and got the highest scores)

alphaxiv.org/pdf/2410.24159

🤖

Highlighting some findings of BabyLM

Architectures & Training objective matter a lot (and got the highest scores)

alphaxiv.org/pdf/2410.24159

🤖

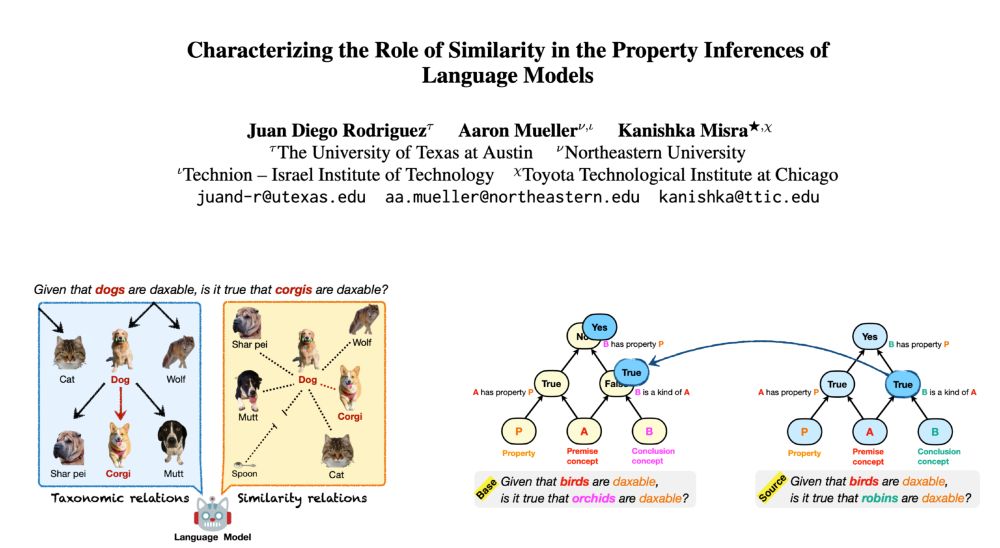

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social