Now, the objective has a CrossEnt(pi_ref, pi_theta) term. KL(P,Q) = CrossEnt(P,Q) - Entropy(P), so this is related to KL, but note the direction of KL is reversed.

6/

![**Mathematical formulation of an alternative KL estimator and its gradient.**

The alternative KL estimator is defined as:

\[

\widehat{KL}_\theta(x) := \frac{\pi_{\text{ref}}(x)}{\pi_\theta(x)} + \log \pi_\theta(x) - \log \pi_{\text{ref}}(x) - 1

\]

From this, it follows that:

\[

\mathbb{E}_{x \sim \pi_{\text{old}}} [\nabla_\theta \widehat{KL}_\theta(x)] = \nabla_\theta \mathbb{E}_{x \sim \pi_{\text{old}}} \left[ \frac{\pi_{\text{ref}}(x)}{\pi_\theta(x)} + \log \pi_\theta(x) \right]

\]

Approximating when \(\pi_{\text{old}} \approx \pi_\theta\), we get:

\[

\approx \nabla_\theta \mathbb{E}_{x \sim \pi_{\text{ref}}} [-\log \pi_\theta(x)] + \nabla_\theta \mathbb{E}_{x \sim \pi_{\text{old}}} [\log \pi_\theta(x)]

\]

The annotated explanation in purple states that this results in:

\[

\text{CrossEnt}(\pi_{\text{ref}}, \pi_\theta) - \text{CrossEnt}(\pi_{\text{old}}, \pi_\theta)

\]

where \(\text{CrossEnt}(\cdot, \cdot)\) denotes cross-entropy.

----

alt text generated by ChatGPT](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:qcllgepvb7hg5gsxvkgoe37i/bafkreie24fwpuxelcccq3gj42af2tlh27dujfqagohzsslelof25g5u5cm@jpeg)

Now, the objective has a CrossEnt(pi_ref, pi_theta) term. KL(P,Q) = CrossEnt(P,Q) - Entropy(P), so this is related to KL, but note the direction of KL is reversed.

6/

More evidence that there's something odd about their approach. And maybe one reason they turned to Schulman's estimator.

5/

![**Mathematical explanation of the standard KL estimator and its gradient.**

The standard KL estimator is defined as:

\[

\widehat{KL}_\theta(x) := \log \pi_\theta(x) - \log \pi_{\text{ref}}(x)

\]

From this, it follows that:

\[

\mathbb{E}_{x \sim \pi_{\text{old}}} [\nabla_\theta \widehat{KL}_\theta(x)] = \nabla_\theta \mathbb{E}_{x \sim \pi_{\text{old}}} [\log \pi_\theta(x)]

\]

Annotated explanation in purple states that this term represents the *negative cross-entropy from \(\pi_{\text{old}}\) to \(\pi_\theta\).*

--

alt text automatically generated by ChatGPT](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:qcllgepvb7hg5gsxvkgoe37i/bafkreiarwn5s236xblh76hunn7pkimyhivh2kcsbn6u7impq74ztond23i@jpeg)

More evidence that there's something odd about their approach. And maybe one reason they turned to Schulman's estimator.

5/

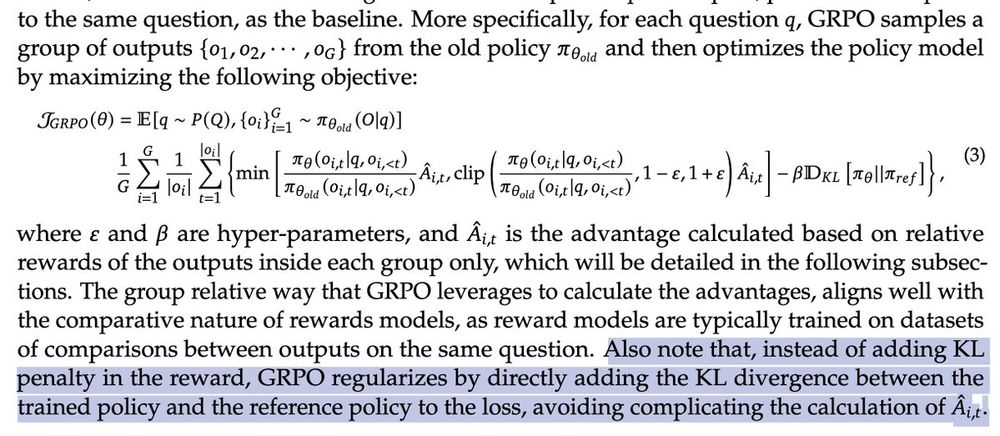

A few people have noticed that GRPO uses a non-standard KL estimator, from a blog post by Schulman.

4/

A few people have noticed that GRPO uses a non-standard KL estimator, from a blog post by Schulman.

4/

But the point of policy gradient is that you can't just "differentiate the estimator": you need to account for the gradient of the sampling process.

3/

![**Mathematical formulation of the GRPO (Group-Relative Policy Optimization) objective and its gradient.**

The objective function is defined as:

\[

J_{\text{GRPO}}(\theta) = \mathbb{E}_{x \sim \pi_\theta} [R_\theta(x)] - \beta \cdot \mathbb{E}_{x \sim \pi_{\text{old}}} [\widehat{KL}_\theta(x)]

\]

The gradient of this objective is:

\[

\nabla J_{\text{GRPO}}(\theta) = \nabla_\theta \mathbb{E}_{x \sim \pi_\theta} [R_\theta(x)] - \beta \cdot \mathbb{E}_{x \sim \pi_{\text{old}}} [\nabla_\theta \widehat{KL}_\theta(x)]

\]

Annotated explanations in purple indicate that the first term is *unbiasedly estimated via the group-relative policy gradient*, while the second term is *not* the derivative of the KL divergence, even when \(\pi_{\text{old}} = \pi_\theta\).

----

(alt text automatically generated by ChatGPT)](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:qcllgepvb7hg5gsxvkgoe37i/bafkreiclfcpvnd45wnhxldssr6qhzjl35esmkpvyxax4qxlpgs2meaauqa@jpeg)

But the point of policy gradient is that you can't just "differentiate the estimator": you need to account for the gradient of the sampling process.

3/

A way to implement is to modify the reward, so E[R~]=E[R] - KL term. Then you can apply standard RL (e.g. policy gradient).

2/

![**Mathematical expression describing KL-penalized reinforcement learning objective.**

The objective function is given by:

\[

J(\theta) = \mathbb{E}_{x \sim \pi_\theta} [R_\theta(x)] - \beta \cdot D_{\text{KL}}(\pi_\theta, \pi_{\text{ref}})

\]

Rewritten as:

\[

J(\theta) = \mathbb{E}_{x \sim \pi_\theta} [\tilde{R}_\theta(x)]

\]

where:

\[

\tilde{R}_\theta(x) := R_\theta(x) - \beta \cdot \widehat{KL}_\theta(x)

\]

\[

\widehat{KL}_\theta(x) := \log \pi_\theta(x) - \log \pi_{\text{ref}}(x)

\]

Annotations in purple indicate that \( R_\theta(x) \) represents the reward, and \( \widehat{KL}_\theta(x) \) is an unbiased estimator of the KL divergence.

----

(alt text generated by ChatGPT)](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:qcllgepvb7hg5gsxvkgoe37i/bafkreigq27u6ammyhocjpimpn5nlf4j5spts4e6bkzafnftmgxqxalhhj4@jpeg)

A way to implement is to modify the reward, so E[R~]=E[R] - KL term. Then you can apply standard RL (e.g. policy gradient).

2/

It's a little hard to reason about what this does to the objective. 1/

It's a little hard to reason about what this does to the objective. 1/

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

Surprisal of 'o' following 'Treatment '? 0.11

Surprisal that title includes surprisal of each title character? Priceless [...I did not know titles could do this]

Surprisal of 'o' following 'Treatment '? 0.11

Surprisal that title includes surprisal of each title character? Priceless [...I did not know titles could do this]

LLMs serve as *likelihoods*: how likely would the human be to have issued this (English) command, given a particular (symbolic) plan? No generation, just scoring :)

A Bayesian agent can then resolve ambiguity in really sensible ways

LLMs serve as *likelihoods*: how likely would the human be to have issued this (English) command, given a particular (symbolic) plan? No generation, just scoring :)

A Bayesian agent can then resolve ambiguity in really sensible ways

Younger researchers may not realize due to Moore's Law (Lin-Manuel Miranda becomes roughly half as cool every two years), but back when this was published in 2021, it was considered mildly topical

Younger researchers may not realize due to Moore's Law (Lin-Manuel Miranda becomes roughly half as cool every two years), but back when this was published in 2021, it was considered mildly topical