Ai2

@ai2.bsky.social

Breakthrough AI to solve the world's biggest problems.

› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

🌊 Global Mangrove Watch is using OlmoEarth to refresh mangrove map baselines faster, with higher accuracy & less manual annotation—allowing orgs + governments to respond to threats more quickly.

Learn more → buff.ly/6xLHLk6

Learn more → buff.ly/6xLHLk6

November 4, 2025 at 2:53 PM

🌊 Global Mangrove Watch is using OlmoEarth to refresh mangrove map baselines faster, with higher accuracy & less manual annotation—allowing orgs + governments to respond to threats more quickly.

Learn more → buff.ly/6xLHLk6

Learn more → buff.ly/6xLHLk6

🇰🇪 With @ifpri.org in Nandi County, Kenya & Mozambique, we built in-season crop-type maps to vastly improve seasonal planning + trusted info for officials.

Learn more → buff.ly/8gprRye

Learn more → buff.ly/8gprRye

November 4, 2025 at 2:53 PM

🇰🇪 With @ifpri.org in Nandi County, Kenya & Mozambique, we built in-season crop-type maps to vastly improve seasonal planning + trusted info for officials.

Learn more → buff.ly/8gprRye

Learn more → buff.ly/8gprRye

🔥 Wildfire deployments with @nasajpl.bsky.social are mapping live fuel moisture at scale to inform readiness.

Learn more → buff.ly/76UxsX9

Learn more → buff.ly/76UxsX9

November 4, 2025 at 2:53 PM

🔥 Wildfire deployments with @nasajpl.bsky.social are mapping live fuel moisture at scale to inform readiness.

Learn more → buff.ly/76UxsX9

Learn more → buff.ly/76UxsX9

OlmoEarth Platform is focused on impact across a range of sectors—agriculture, wildfire prevention, & more. 👇

November 4, 2025 at 2:53 PM

OlmoEarth Platform is focused on impact across a range of sectors—agriculture, wildfire prevention, & more. 👇

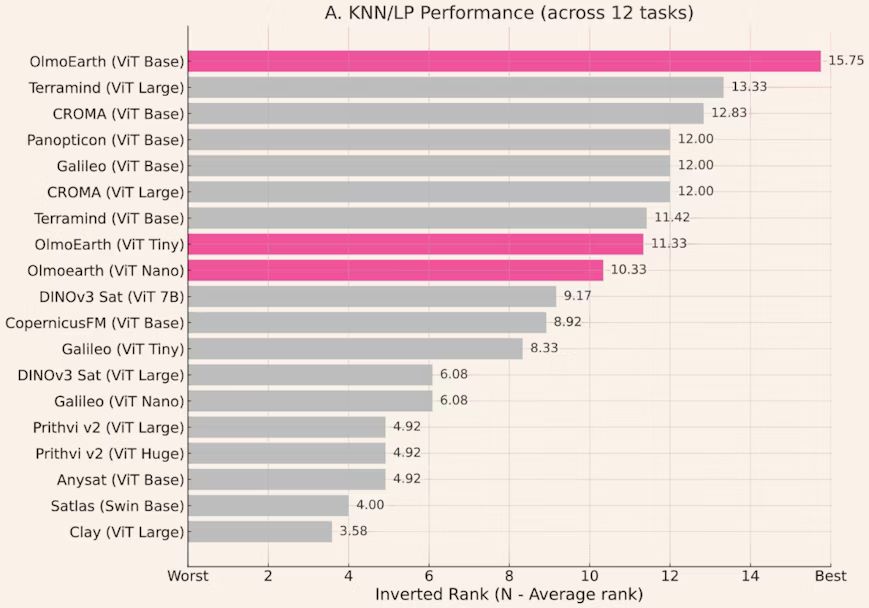

Under the hood is our industry-leading OlmoEarth foundation model family—AI that fuses 🛰️ satellite imagery, 📡 radar, ⛰️ elevation, & 🗺️ detailed map layers.

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

November 4, 2025 at 2:53 PM

Under the hood is our industry-leading OlmoEarth foundation model family—AI that fuses 🛰️ satellite imagery, 📡 radar, ⛰️ elevation, & 🗺️ detailed map layers.

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

Open, fast to adapt + deploy—industry-leading on key benchmarks and real-world applications for our partners.

Learn more → buff.ly/L8PKLTf

By applying AI to a planet’s worth of data, OlmoEarth Platform is already empowering orgs to act faster & with confidence to secure a sustainable future. 🌲

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

November 4, 2025 at 2:52 PM

By applying AI to a planet’s worth of data, OlmoEarth Platform is already empowering orgs to act faster & with confidence to secure a sustainable future. 🌲

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

OlmoEarth delivers intelligence for anything from aiding restoration efforts to protecting natural resources & communities.

Introducing OlmoEarth 🌍, state-of-the-art AI foundation models paired with ready-to-use open infrastructure to turn Earth data into clear, up-to-date insights within hours—not years.

November 4, 2025 at 2:52 PM

Introducing OlmoEarth 🌍, state-of-the-art AI foundation models paired with ready-to-use open infrastructure to turn Earth data into clear, up-to-date insights within hours—not years.

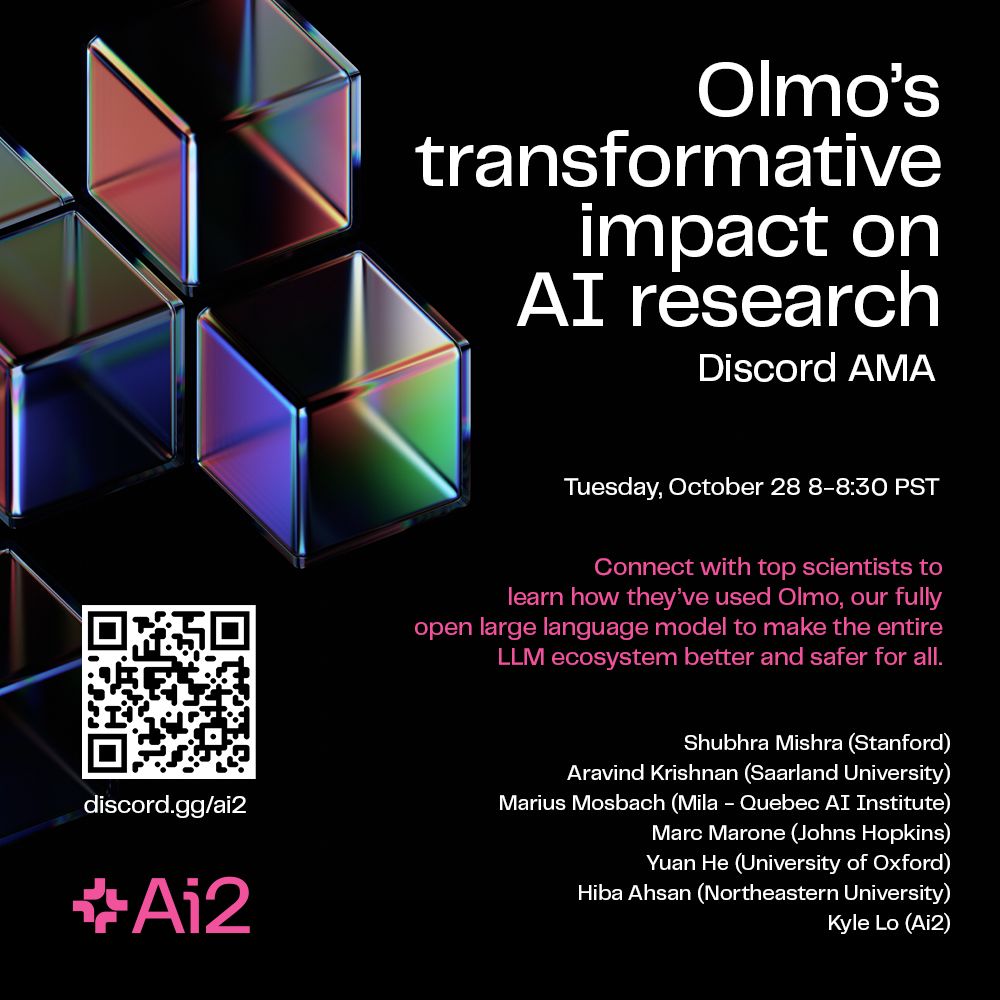

Join us for a live Discord AMA Tues, Oct 28 @ 8:00 a.m. PT with researchers who’ve run real studies using our Olmo language model family—from machine unlearning to knowledge cutoffs to how models acquire new skills. 👇

October 27, 2025 at 4:01 PM

Join us for a live Discord AMA Tues, Oct 28 @ 8:00 a.m. PT with researchers who’ve run real studies using our Olmo language model family—from machine unlearning to knowledge cutoffs to how models acquire new skills. 👇

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: buff.ly/3umETws

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

October 24, 2025 at 6:36 PM

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: buff.ly/3umETws

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

6/ 🪞 Two sentences can mean the same thing (“A is B” and “B is A”), but not always to LLMs, depending on their training data makeup. Researchers proved this using Olmo’s open data + identified fixes.

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

October 24, 2025 at 6:36 PM

6/ 🪞 Two sentences can mean the same thing (“A is B” and “B is A”), but not always to LLMs, depending on their training data makeup. Researchers proved this using Olmo’s open data + identified fixes.

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

5/ 📅 Using Olmo’s open data + pipelines, a research group traced which documents made it into training data and showed some facts are staler than a model claims them to be—plus how to detect and fix this.

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

October 24, 2025 at 6:36 PM

5/ 📅 Using Olmo’s open data + pipelines, a research group traced which documents made it into training data and showed some facts are staler than a model claims them to be—plus how to detect and fix this.

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

4/ 🧮 With Olmo’s checkpoints, Stanford’s MathCAMPS team tracked when skills like breaking down fractions or solving equations “turn on” in a model during training, and how small training changes impact this.

→ buff.ly/rWE5xGP

→ buff.ly/rWE5xGP

October 24, 2025 at 6:36 PM

4/ 🧮 With Olmo’s checkpoints, Stanford’s MathCAMPS team tracked when skills like breaking down fractions or solving equations “turn on” in a model during training, and how small training changes impact this.

→ buff.ly/rWE5xGP

→ buff.ly/rWE5xGP

3/ 🏥 A separate team at Northeastern located where certain signals live inside Olmo and made targeted edits that reduced biased clinical predictions. This kind of audit is only possible because Olmo exposes all its components.

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

October 24, 2025 at 6:36 PM

3/ 🏥 A separate team at Northeastern located where certain signals live inside Olmo and made targeted edits that reduced biased clinical predictions. This kind of audit is only possible because Olmo exposes all its components.

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

2/ 🤓 Thanks to Olmo being fully open, researchers at KAIST could watch it learn in real time. They slipped in a new fact during training and watched the model’s confidence in recalling that fact later. More varied exposure made the info stick better.

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

October 24, 2025 at 6:36 PM

2/ 🤓 Thanks to Olmo being fully open, researchers at KAIST could watch it learn in real time. They slipped in a new fact during training and watched the model’s confidence in recalling that fact later. More varied exposure made the info stick better.

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

1/ 🧽 Can AI “forget”?

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

October 24, 2025 at 6:36 PM

1/ 🧽 Can AI “forget”?

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Our fully open Olmo models enable rigorous, reproducible science—from unlearning to clinical NLP, math learning, & fresher knowledge. Here’s how the research community has leveraged Olmo to make the entire AI ecosystem better + more transparent for all. 🧵

October 24, 2025 at 6:36 PM

Our fully open Olmo models enable rigorous, reproducible science—from unlearning to clinical NLP, math learning, & fresher knowledge. Here’s how the research community has leveraged Olmo to make the entire AI ecosystem better + more transparent for all. 🧵

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: playground.allenai.org

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

October 24, 2025 at 6:29 PM

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: playground.allenai.org

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: discord.gg/ai2

6/ 🪞 Two sentences can mean the same thing (“A is B” and “B is A”), but not always to LLMs, depending on their training data makeup. Researchers proved this using Olmo’s open data + identified fixes.

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

October 24, 2025 at 6:29 PM

6/ 🪞 Two sentences can mean the same thing (“A is B” and “B is A”), but not always to LLMs, depending on their training data makeup. Researchers proved this using Olmo’s open data + identified fixes.

→ buff.ly/IM4VZcK

→ buff.ly/IM4VZcK

5/ 📅 Using Olmo’s open data + pipelines, a research group traced which documents made it into training data and showed some facts are staler than a model claims them to be—plus how to detect and fix this.

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

October 24, 2025 at 6:29 PM

5/ 📅 Using Olmo’s open data + pipelines, a research group traced which documents made it into training data and showed some facts are staler than a model claims them to be—plus how to detect and fix this.

→ buff.ly/qtlKMd4

→ buff.ly/qtlKMd4

4/ 🧮 With Olmo’s checkpoints, Stanford’s MathCAMPS team tracked when skills like breaking down fractions or solving equations “turn on” in a model during training, and how small training changes impact this.

buff.ly/rWE5xGP

buff.ly/rWE5xGP

October 24, 2025 at 6:29 PM

4/ 🧮 With Olmo’s checkpoints, Stanford’s MathCAMPS team tracked when skills like breaking down fractions or solving equations “turn on” in a model during training, and how small training changes impact this.

buff.ly/rWE5xGP

buff.ly/rWE5xGP

3/ 🏥 A separate team at Northeastern located where certain signals live inside Olmo and made targeted edits that reduced biased clinical predictions. This kind of audit is only possible because Olmo exposes all its components.

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

October 24, 2025 at 6:29 PM

3/ 🏥 A separate team at Northeastern located where certain signals live inside Olmo and made targeted edits that reduced biased clinical predictions. This kind of audit is only possible because Olmo exposes all its components.

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

2/ 🤓 Thanks to Olmo being fully open, researchers at KAIST could watch it learn in real time. They slipped in a new fact during training and watched the model’s confidence in recalling that fact later. More varied exposure made the info stick better.

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

October 24, 2025 at 6:29 PM

2/ 🤓 Thanks to Olmo being fully open, researchers at KAIST could watch it learn in real time. They slipped in a new fact during training and watched the model’s confidence in recalling that fact later. More varied exposure made the info stick better.

→ buff.ly/FFrKOTn

→ buff.ly/FFrKOTn

1/ 🧽 Can AI "forget"?

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

October 24, 2025 at 6:29 PM

1/ 🧽 Can AI "forget"?

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Unlearning = removing a fact from a model without retraining everything or breaking other skills. Using Olmo + our open Dolma corpus, researchers found the more often a fact appears in training, the harder it is to erase.

→ buff.ly/81wSpzp

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: buff.ly/3umETws

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

October 24, 2025 at 6:01 PM

Olmo isn’t just open weights—it’s an open research stack. Try it in the Ai2 Playground: buff.ly/3umETws

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

AMA on Discord: Tues, Oct 28 @ 8:00 AM PT with some of the researchers behind these studies + an Ai2 Olmo teammate. Join: buff.ly/zIW8KFL

olmOCR 2 can also extract complex tables from papers, like our DataDecide report, making them easier to parse and work with. 📋

October 22, 2025 at 9:20 PM

olmOCR 2 can also extract complex tables from papers, like our DataDecide report, making them easier to parse and work with. 📋