Check out the preprint here: arxiv.org/pdf/2506.10150

Check out the preprint here: arxiv.org/pdf/2506.10150

Our results show we can use LLMs-as-judge to monitor LLMs-as-companion!

Our results show we can use LLMs-as-judge to monitor LLMs-as-companion!

If we use flawed evaluations to train and monitor "empathic" AI, we risk creating systems that propagate a broken standard of what good communication looks like.

If we use flawed evaluations to train and monitor "empathic" AI, we risk creating systems that propagate a broken standard of what good communication looks like.

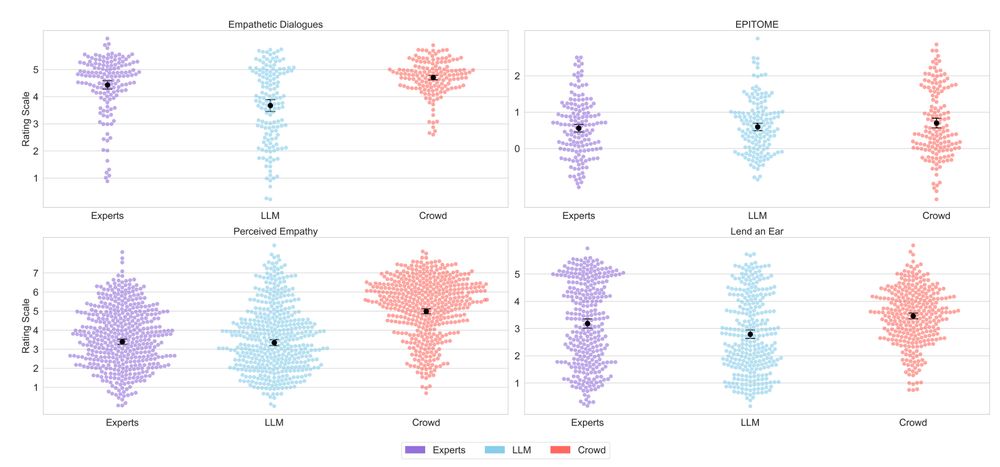

Crowdworkers often

- have limited attention

- rely on heuristics like “it’s the thought that counts”

- focusing on intentions rather than actual wording

show systematic rating inflation due to social desirability bias

Crowdworkers often

- have limited attention

- rely on heuristics like “it’s the thought that counts”

- focusing on intentions rather than actual wording

show systematic rating inflation due to social desirability bias

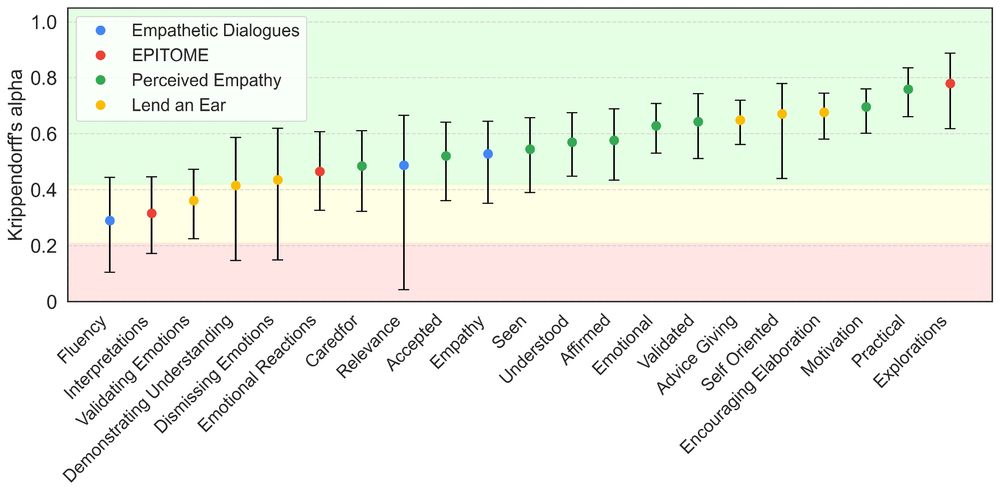

Here’s how expert agreement (Krippendorff's alpha) varied across empathy sub-components:

Here’s how expert agreement (Krippendorff's alpha) varied across empathy sub-components:

The reliability of expert judgments depends on the clarity of the construct. For nuanced, subjective components of empathic communication, experts often disagree.

The reliability of expert judgments depends on the clarity of the construct. For nuanced, subjective components of empathic communication, experts often disagree.

And specifically looked at 21 sub-components of empathic communication from 4 evaluative frameworks

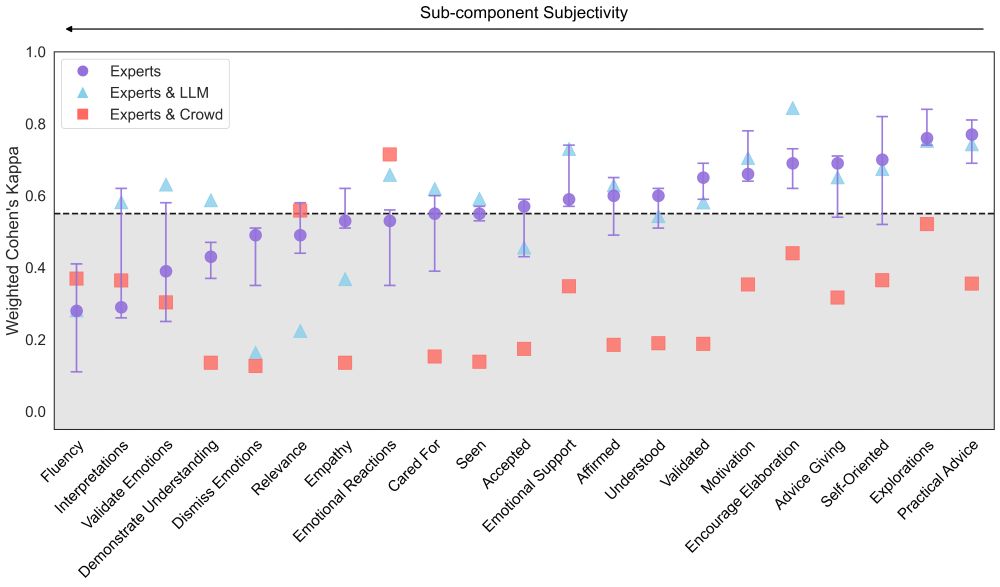

The result? LLMs consistently matched expert judgments better than crowdworkers did! 🔥

And specifically looked at 21 sub-components of empathic communication from 4 evaluative frameworks

The result? LLMs consistently matched expert judgments better than crowdworkers did! 🔥

We show that a simple approach to learn safe RL policies can outperform most offline RL methods. (+theoretical guarantees!)

How? Just allow the state-actions that have been seen enough times! 🤯

arxiv.org/abs/2410.09361

We show that a simple approach to learn safe RL policies can outperform most offline RL methods. (+theoretical guarantees!)

How? Just allow the state-actions that have been seen enough times! 🤯

arxiv.org/abs/2410.09361