Find more on openfeature.dev

#OFREP is short for OpenFeature #Remote #Evaluation #Protocol. It is a protocol specification for the #communication between #providers and feature flag management systems that support it.

Find it on github.com/open-feature/protocol

#OFREP is short for OpenFeature #Remote #Evaluation #Protocol. It is a protocol specification for the #communication between #providers and feature flag management systems that support it.

Find it on github.com/open-feature/protocol

With the specification of the #TrackingAPI, we can tie application #metrics & the feature flag system together; without requiring a vendor to support flag #analytics or having 2 separate systems; we can send all data to the same analytics backend!

With the specification of the #TrackingAPI, we can tie application #metrics & the feature flag system together; without requiring a vendor to support flag #analytics or having 2 separate systems; we can send all data to the same analytics backend!

TIL: using the #OpenTelemetry #protocol ( #OTLP ) applications can be instrumented to send #telemetry data to analytics backends. This even works when running the app #locally!

Pro tip: set the env var 'OTEL_LOG_LEVEL=debug' for development

TIL: using the #OpenTelemetry #protocol ( #OTLP ) applications can be instrumented to send #telemetry data to analytics backends. This even works when running the app #locally!

Pro tip: set the env var 'OTEL_LOG_LEVEL=debug' for development

The OF #project consists of >40 #repositories. Most essential to the standard are:

/spec: contains the standardization

/*-sdk repos: language-specific #SDK implementations of the spec

/*-sdk-contrib: lang-specific provider & hook #implementations

The OF #project consists of >40 #repositories. Most essential to the standard are:

/spec: contains the standardization

/*-sdk repos: language-specific #SDK implementations of the spec

/*-sdk-contrib: lang-specific provider & hook #implementations

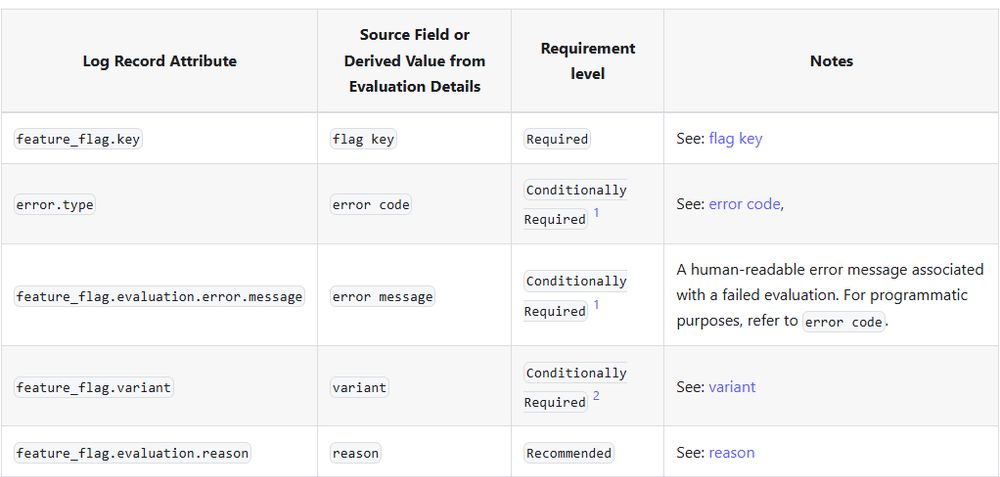

#EvaluationDetails are a flag evaluation's returned data structure. It contains data like the #flagKey, #errorCode, #variant, #reason & #metadata, such as #contextId or #flagSetId. These can be mapped to #OpenTelemetry #log #record conventions.

#EvaluationDetails are a flag evaluation's returned data structure. It contains data like the #flagKey, #errorCode, #variant, #reason & #metadata, such as #contextId or #flagSetId. These can be mapped to #OpenTelemetry #log #record conventions.

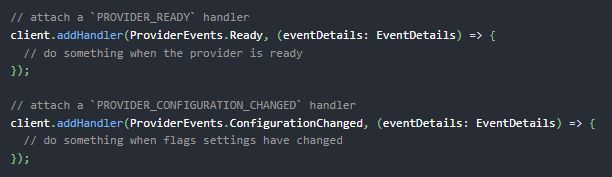

#Events are state changes of the #provider: ready, configuration changed, error, stale

#Event #handlers can be implemented to react to those. Eg. maybe we want to wait until the provider is 'ready' before evaluating feature flags for rendering UI.

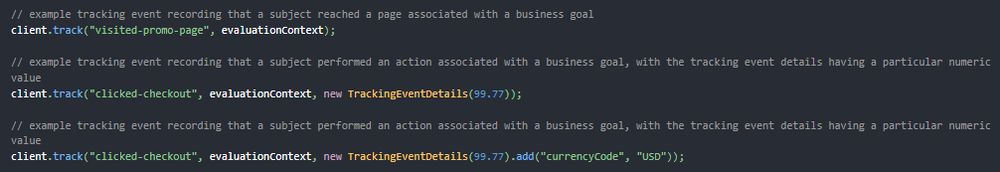

Looking at actual #code examples makes it obvious what #tracking calls are used for. Calling the #track function via the #OF #client and passing #TrackingEventDetails a numeric value and other optional data can be sent to the backend:

Looking at actual #code examples makes it obvious what #tracking calls are used for. Calling the #track function via the #OF #client and passing #TrackingEventDetails a numeric value and other optional data can be sent to the backend:

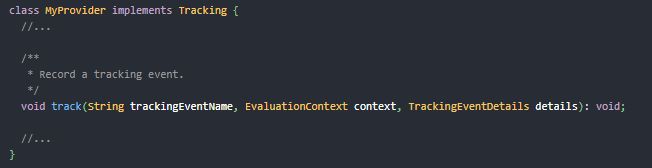

To support tracking the OpenFeature #provider needs to implement the #Tracking interface, which contains a single function called track. This function performs #side-effects to record the tracking #event (e.g. store a specific metric).

To support tracking the OpenFeature #provider needs to implement the #Tracking interface, which contains a single function called track. This function performs #side-effects to record the tracking #event (e.g. store a specific metric).

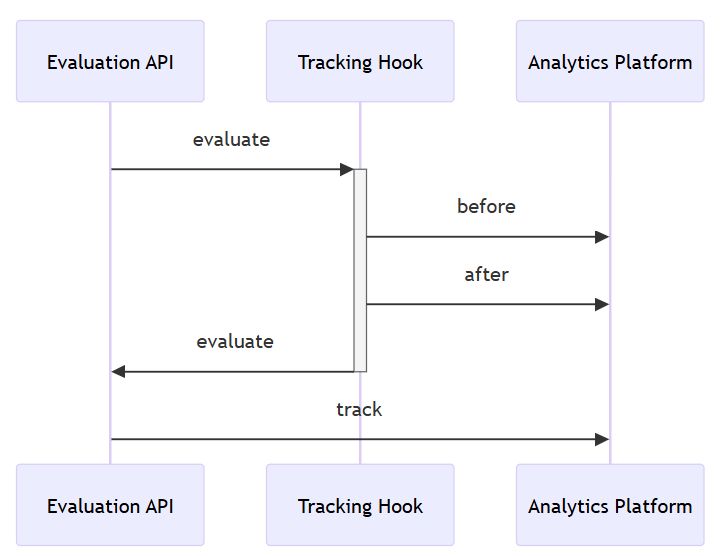

How does #tracking work?

Calling a #track function stores certain #metrics for a user session (e.g. total purchase amount). #Hooks can be used to store info about #flag #evaluations. Combined, one can see, e.g., how a new feature impacts purchases.

How does #tracking work?

Calling a #track function stores certain #metrics for a user session (e.g. total purchase amount). #Hooks can be used to store info about #flag #evaluations. Combined, one can see, e.g., how a new feature impacts purchases.

See us at the summit, as well as at KubeCon EU!

See us at the summit, as well as at KubeCon EU!

#Experimentation is the term for testing the #impact of a new feature. Certain #metrics of the new feature can be #tracked to, e.g., check if users even like to use the new feature. #Tracking enables product decisions based on #data.

#Experimentation is the term for testing the #impact of a new feature. Certain #metrics of the new feature can be #tracked to, e.g., check if users even like to use the new feature. #Tracking enables product decisions based on #data.

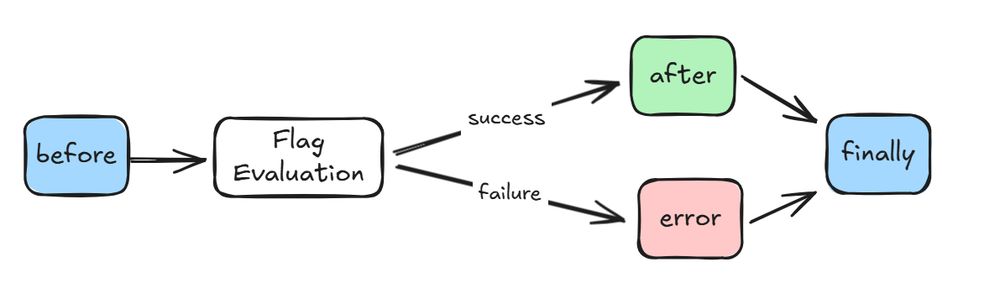

The #Flag #Evaluation #Life-Cycle has 4 stages: before, after, error and finally.

In each stage, so-called #Hooks can be implemented to perform certain activities such as changing a context variable, #logging or cleaning up resources.

The #Flag #Evaluation #Life-Cycle has 4 stages: before, after, error and finally.

In each stage, so-called #Hooks can be implemented to perform certain activities such as changing a context variable, #logging or cleaning up resources.

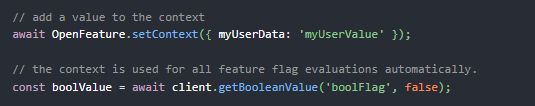

There are #client-side and #server-side OpenFeature #SDKs. The main difference lies in the usage of the #evaluation #context which changes with every server request but usually stays the same for a single user on the client-side.

There are #client-side and #server-side OpenFeature #SDKs. The main difference lies in the usage of the #evaluation #context which changes with every server request but usually stays the same for a single user on the client-side.

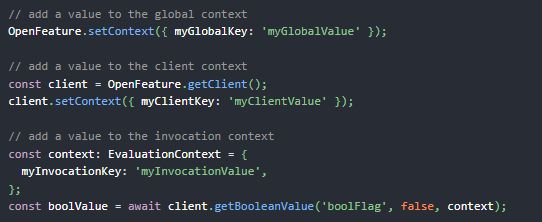

What is an OF evaluation context?

A context is a variable that can be used for #dynamic flag #evaluations. A feature can be toggled for specific users by using e.g. the email address as a context variable.

What is an OF evaluation context?

A context is a variable that can be used for #dynamic flag #evaluations. A feature can be toggled for specific users by using e.g. the email address as a context variable.

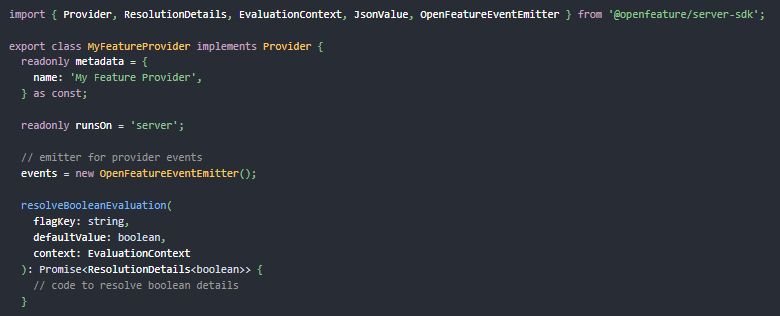

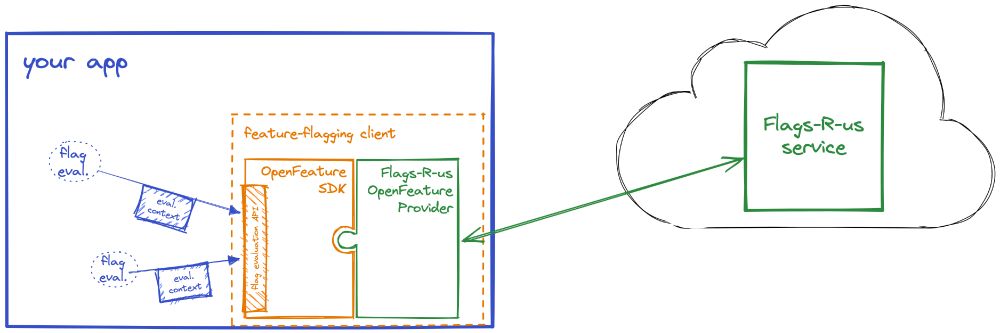

What is an OpenFeature Provider?

A provider is the adapter between the Evaluation API and the feature flag management system. In other words: if a flag value is requested via Eval API the provider knows where to find the flag configuration.

What is an OpenFeature Provider?

A provider is the adapter between the Evaluation API and the feature flag management system. In other words: if a flag value is requested via Eval API the provider knows where to find the flag configuration.

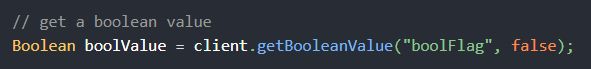

What is the Evaluation API?

It is part of the OpenFeature SDK and, as the name suggests, a standardized API for evaluating feature flags. The resulting value can be used in code to toggle specific features on and off remotely.

What is the Evaluation API?

It is part of the OpenFeature SDK and, as the name suggests, a standardized API for evaluating feature flags. The resulting value can be used in code to toggle specific features on and off remotely.

Let's tackle the most obvious question to start this with:

What is OpenFeature?

A standardization of feature flagging.

It's an open-source project, part of the CNCF landscape & and standardizes the look and feel of feature flags.

Let's tackle the most obvious question to start this with:

What is OpenFeature?

A standardization of feature flagging.

It's an open-source project, part of the CNCF landscape & and standardizes the look and feel of feature flags.