venkatasg.net

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Our paper (co w/ Vinith Suriyakumar) on syntax-domain spurious correlations will appear at #NeurIPS2025 as a ✨spotlight!

+ @marzyehghassemi.bsky.social, @byron.bsky.social, Levent Sagun

Our paper (co w/ Vinith Suriyakumar) on syntax-domain spurious correlations will appear at #NeurIPS2025 as a ✨spotlight!

+ @marzyehghassemi.bsky.social, @byron.bsky.social, Levent Sagun

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

New work with @kmahowald.bsky.social and @cgpotts.bsky.social!

🧵👇!

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

Looking forward to chatting with people about interpretability, data efficient training, cog sci and LLM consistency.

Looking forward to chatting with people about interpretability, data efficient training, cog sci and LLM consistency.

Come join me, @kmahowald.bsky.social, and @jessyjli.bsky.social as we tackle interesting research questions at the intersection of ling, cogsci, and ai!

Some topics I am particularly interested in:

Come join me, @kmahowald.bsky.social, and @jessyjli.bsky.social as we tackle interesting research questions at the intersection of ling, cogsci, and ai!

Some topics I am particularly interested in:

Gift article from NYT www.nytimes.com/2025/08/15/u...

Gift article from NYT www.nytimes.com/2025/08/15/u...

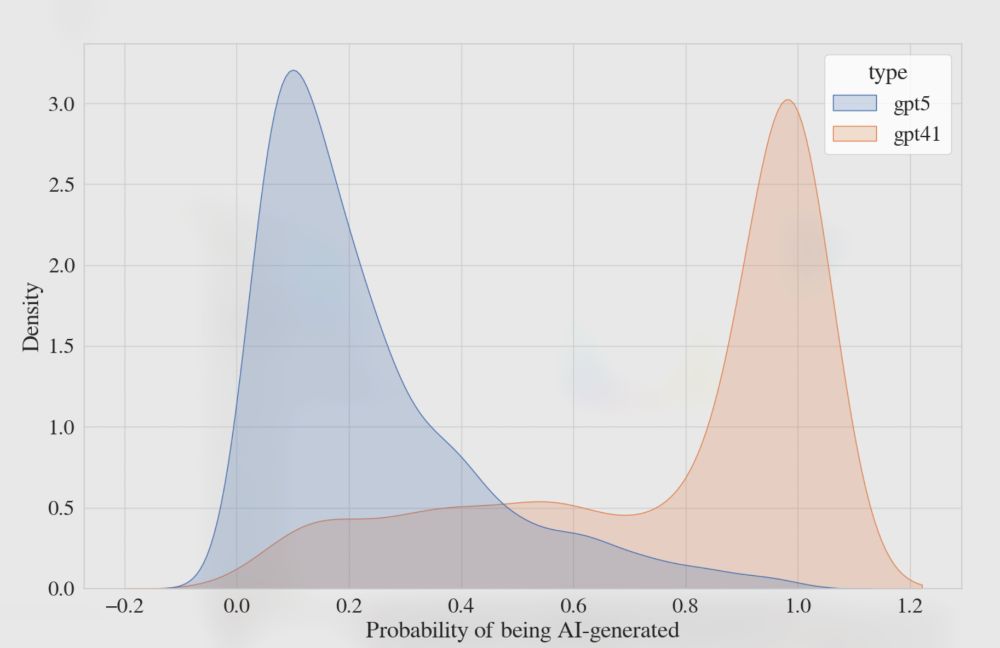

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

I’m excited to announce a new paper accepted to ACL 2025, in collaboration with Patrick Sui, Philippe Laban, and others!

I’m excited to announce a new paper accepted to ACL 2025, in collaboration with Patrick Sui, Philippe Laban, and others!

✨linguistically + cognitively-motivated evals (as always!)

✨understanding multilingualism + representation learning (new!)

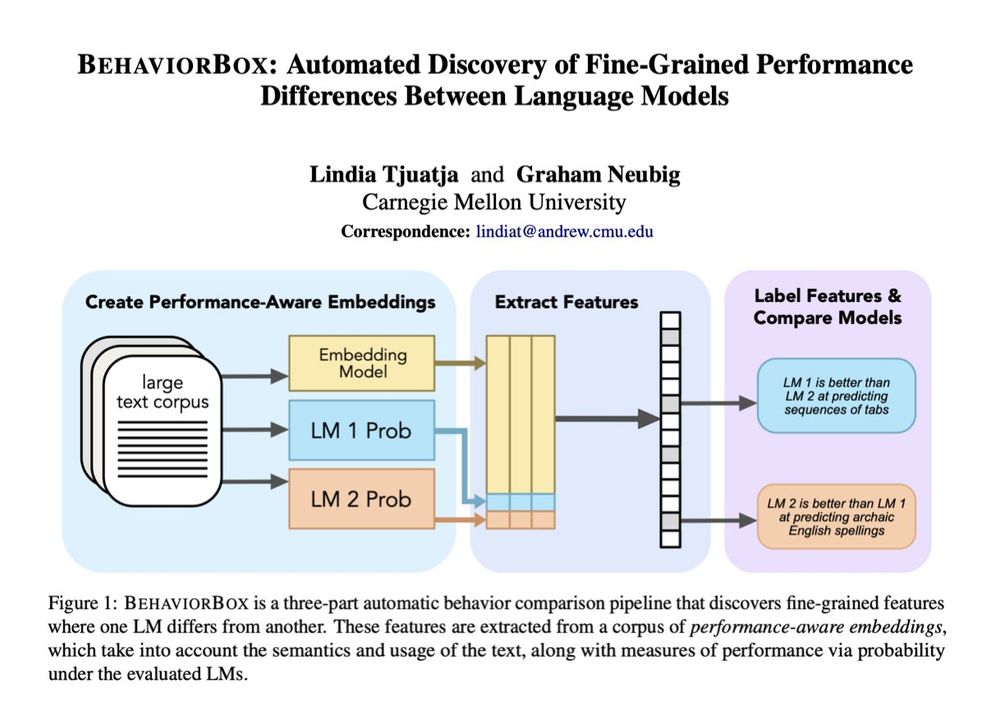

I'll also be presenting a poster for BehaviorBox on Wed @ Poster Session 4 (Hall 4/5, 10-11:30)!

🧵1/9

✨linguistically + cognitively-motivated evals (as always!)

✨understanding multilingualism + representation learning (new!)

I'll also be presenting a poster for BehaviorBox on Wed @ Poster Session 4 (Hall 4/5, 10-11:30)!

www.theatlantic.com/magazine/arc...

www.theatlantic.com/magazine/arc...

🛎️ in our #ACL2025 paper, we uncover fascinating trends about multilingual discourse representations!

joint work w/ @florian-eichin.com @barbaraplank.bsky.social @mhedderich.bsky.social

📄 arxiv.org/abs/2503.10515

🛎️ in our #ACL2025 paper, we uncover fascinating trends about multilingual discourse representations!

joint work w/ @florian-eichin.com @barbaraplank.bsky.social @mhedderich.bsky.social

📄 arxiv.org/abs/2503.10515

🚀TL;DR: We introduce Situated-PRInciples (SPRI), a framework that automatically generates input-specific principles to align responses — with minimal human effort.

🧵

🚀TL;DR: We introduce Situated-PRInciples (SPRI), a framework that automatically generates input-specific principles to align responses — with minimal human effort.

🧵

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!