@vasilijee.bsky.social

Founder @cognee.bsky.social | cognee.ai

OSS: github.com/topoteretes/...

Community: discord.gg/m63hxKsp4p

OSS: github.com/topoteretes/...

Community: discord.gg/m63hxKsp4p

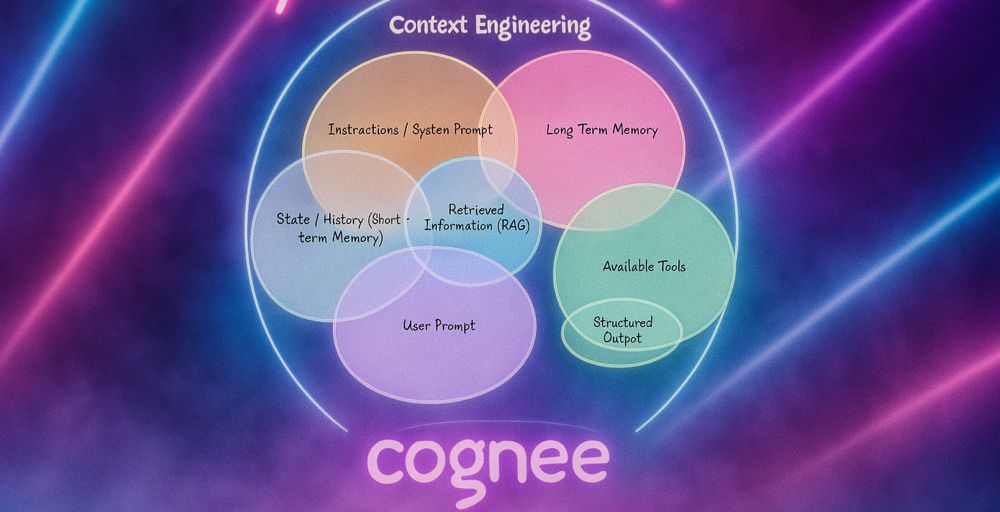

Here’s the distilled version of what everyone has been talking about “context engineering”.

Straight talk on memory layers, GraphRAG and why prompt hacks alone won’t cut it.

Give it a read 👉🏼 dub.sh/context_engi...

Straight talk on memory layers, GraphRAG and why prompt hacks alone won’t cut it.

Give it a read 👉🏼 dub.sh/context_engi...

Cognee - Context Engineering: Boost AI Memory for Reliable, Smart LLMs

Master context engineering and AI memory to craft personalized LLM outputs, reduce token costs, and future-proof your AI agents—read the full guide now!

dub.sh

July 23, 2025 at 9:04 AM

Here’s the distilled version of what everyone has been talking about “context engineering”.

Straight talk on memory layers, GraphRAG and why prompt hacks alone won’t cut it.

Give it a read 👉🏼 dub.sh/context_engi...

Straight talk on memory layers, GraphRAG and why prompt hacks alone won’t cut it.

Give it a read 👉🏼 dub.sh/context_engi...

Hello, world—with context! 🧠

🚀 We are launching “Insights into AI Memory”—a monthly signal on the tech, tools & people teaching AI to remember.

Grab the initial post & subscribe 👉 aimemory.substack.com

🚀 We are launching “Insights into AI Memory”—a monthly signal on the tech, tools & people teaching AI to remember.

Grab the initial post & subscribe 👉 aimemory.substack.com

June 23, 2025 at 3:09 PM

Hello, world—with context! 🧠

🚀 We are launching “Insights into AI Memory”—a monthly signal on the tech, tools & people teaching AI to remember.

Grab the initial post & subscribe 👉 aimemory.substack.com

🚀 We are launching “Insights into AI Memory”—a monthly signal on the tech, tools & people teaching AI to remember.

Grab the initial post & subscribe 👉 aimemory.substack.com

We’re days away from opening the cognee SaaS beta 🚀 where you’ll bring your knowledge graphs & LLM workflows to life without the infra pain.

🔍 Built for everyone who cares about clean data, speed, and reproducibility.

Want in on day 1? Join the waitlist →

dub.sh/beta-saas-co...

🔍 Built for everyone who cares about clean data, speed, and reproducibility.

Want in on day 1? Join the waitlist →

dub.sh/beta-saas-co...

Improve your AI infrastructure - AI memory engine

Cognee is an open source AI memory engine. Try it today to find hidden connections in your data and improve your AI infrastructure.

dub.sh

June 19, 2025 at 5:08 PM

We’re days away from opening the cognee SaaS beta 🚀 where you’ll bring your knowledge graphs & LLM workflows to life without the infra pain.

🔍 Built for everyone who cares about clean data, speed, and reproducibility.

Want in on day 1? Join the waitlist →

dub.sh/beta-saas-co...

🔍 Built for everyone who cares about clean data, speed, and reproducibility.

Want in on day 1? Join the waitlist →

dub.sh/beta-saas-co...

Tired of asking brilliant questions and getting “meh” answers from your LLM?

We just shipped “The Art of Intelligent Retrieval”—a deep dive into how Cognee layers semantic search, vector DBs & knowledge-graph magic across specialized retrievers (RAG, Cypher, CoT, more)

We just shipped “The Art of Intelligent Retrieval”—a deep dive into how Cognee layers semantic search, vector DBs & knowledge-graph magic across specialized retrievers (RAG, Cypher, CoT, more)

June 18, 2025 at 3:43 PM

Tired of asking brilliant questions and getting “meh” answers from your LLM?

We just shipped “The Art of Intelligent Retrieval”—a deep dive into how Cognee layers semantic search, vector DBs & knowledge-graph magic across specialized retrievers (RAG, Cypher, CoT, more)

We just shipped “The Art of Intelligent Retrieval”—a deep dive into how Cognee layers semantic search, vector DBs & knowledge-graph magic across specialized retrievers (RAG, Cypher, CoT, more)

Why file-based AI Memory will power next-gen AI apps?

We break down how a simple folder in the cloud becomes the semantic backbone for agents & copilots. Let’s unpack it 🧵👇

We break down how a simple folder in the cloud becomes the semantic backbone for agents & copilots. Let’s unpack it 🧵👇

June 12, 2025 at 2:48 PM

Why file-based AI Memory will power next-gen AI apps?

We break down how a simple folder in the cloud becomes the semantic backbone for agents & copilots. Let’s unpack it 🧵👇

We break down how a simple folder in the cloud becomes the semantic backbone for agents & copilots. Let’s unpack it 🧵👇

Woke up to 🚀 cognee hitting 5000 stars!

Thank you for the trust, feedback and code you pour in. Let’s keep building 🛠️

Thank you for the trust, feedback and code you pour in. Let’s keep building 🛠️

June 10, 2025 at 11:08 AM

Woke up to 🚀 cognee hitting 5000 stars!

Thank you for the trust, feedback and code you pour in. Let’s keep building 🛠️

Thank you for the trust, feedback and code you pour in. Let’s keep building 🛠️

Yesterday, we released our paper, "Optimizing the Interface Between Knowledge Graphs and LLMs for Complex Reasoning"

We have developed a new tool to enable AI memory optimization that considerably improve AI memory accuracy for AI Apps and Agents. Let’s dive into the details of our work 📚

We have developed a new tool to enable AI memory optimization that considerably improve AI memory accuracy for AI Apps and Agents. Let’s dive into the details of our work 📚

June 3, 2025 at 2:01 PM

Yesterday, we released our paper, "Optimizing the Interface Between Knowledge Graphs and LLMs for Complex Reasoning"

We have developed a new tool to enable AI memory optimization that considerably improve AI memory accuracy for AI Apps and Agents. Let’s dive into the details of our work 📚

We have developed a new tool to enable AI memory optimization that considerably improve AI memory accuracy for AI Apps and Agents. Let’s dive into the details of our work 📚

We're ramping up our r/AIMemory channel for broader discussions on AI Memory and to share in the conversation outside of cognee.

We'd love to see you share what you're working on, your thoughts and questions on AI memory, and any resources that would benefit the broader community.

We'd love to see you share what you're working on, your thoughts and questions on AI memory, and any resources that would benefit the broader community.

Join the conversation at r/AIMemory. dub.sh/ai-memory

May 30, 2025 at 2:36 PM

We're ramping up our r/AIMemory channel for broader discussions on AI Memory and to share in the conversation outside of cognee.

We'd love to see you share what you're working on, your thoughts and questions on AI memory, and any resources that would benefit the broader community.

We'd love to see you share what you're working on, your thoughts and questions on AI memory, and any resources that would benefit the broader community.

Reposted

Join the conversation at r/AIMemory. dub.sh/ai-memory

May 30, 2025 at 2:29 PM

Join the conversation at r/AIMemory. dub.sh/ai-memory

We just dropped a follow-up to last week’s graph-DB explainer—this one dives into vector databases and why they’re the workhorse behind semantic search.

Quick recap:

Graph DB → “How are things connected?”

Vector DB → “What *feels* like this thing?”

🙂

Quick recap:

Graph DB → “How are things connected?”

Vector DB → “What *feels* like this thing?”

🙂

May 21, 2025 at 4:34 PM

We just dropped a follow-up to last week’s graph-DB explainer—this one dives into vector databases and why they’re the workhorse behind semantic search.

Quick recap:

Graph DB → “How are things connected?”

Vector DB → “What *feels* like this thing?”

🙂

Quick recap:

Graph DB → “How are things connected?”

Vector DB → “What *feels* like this thing?”

🙂

🚨 4 Big Updates to cognee MCP Server (and what devs need to know):

If you're building with LLMs, graphs, or agentic applications - keep reading. 👇

If you're building with LLMs, graphs, or agentic applications - keep reading. 👇

May 19, 2025 at 1:50 PM

🚨 4 Big Updates to cognee MCP Server (and what devs need to know):

If you're building with LLMs, graphs, or agentic applications - keep reading. 👇

If you're building with LLMs, graphs, or agentic applications - keep reading. 👇

Relational tables thrive on set operations.

Document stores shine with nested data.

But when you need to ask “How are things connected?” you step into graph territory.

Document stores shine with nested data.

But when you need to ask “How are things connected?” you step into graph territory.

May 15, 2025 at 1:54 PM

Relational tables thrive on set operations.

Document stores shine with nested data.

But when you need to ask “How are things connected?” you step into graph territory.

Document stores shine with nested data.

But when you need to ask “How are things connected?” you step into graph territory.

Got featured in @neo4j.com ’s weekly wrap-up!

From the links below, you can find us chatting on GraphRAG + cognitive science (a small demo included)

+

fresh Neo4j news (Aura Analytics, NODES CFP, MCP hacks).

Don’t miss it out.

From the links below, you can find us chatting on GraphRAG + cognitive science (a small demo included)

+

fresh Neo4j news (Aura Analytics, NODES CFP, MCP hacks).

Don’t miss it out.

May 14, 2025 at 8:47 AM

Got featured in @neo4j.com ’s weekly wrap-up!

From the links below, you can find us chatting on GraphRAG + cognitive science (a small demo included)

+

fresh Neo4j news (Aura Analytics, NODES CFP, MCP hacks).

Don’t miss it out.

From the links below, you can find us chatting on GraphRAG + cognitive science (a small demo included)

+

fresh Neo4j news (Aura Analytics, NODES CFP, MCP hacks).

Don’t miss it out.

🧵 Insights from Data Council 2025, viewed through my lens:

May 12, 2025 at 7:38 AM

🧵 Insights from Data Council 2025, viewed through my lens:

2k Github stars!

And, even more important, telemetry shows people running cognee in production!

And, even more important, telemetry shows people running cognee in production!

May 11, 2025 at 3:52 PM

2k Github stars!

And, even more important, telemetry shows people running cognee in production!

And, even more important, telemetry shows people running cognee in production!

Curious if small Ollama-hosted LLMs (e.g., Phi-4, Gemma-3) can reliably create knowledge graphs?

Our 3-minute video answers exactly that:

- small local model limitations

- tips (schema optimization, YAML/HTML requests)

- need for larger models (Llama3.3, 14B)

watch here: youtu.be/P2ZaSnnl7z0

Our 3-minute video answers exactly that:

- small local model limitations

- tips (schema optimization, YAML/HTML requests)

- need for larger models (Llama3.3, 14B)

watch here: youtu.be/P2ZaSnnl7z0

Compare Small Ollama Models in Generating Knowledge Graphs Using cognee

YouTube video by cognee

youtu.be

April 28, 2025 at 2:04 PM

Curious if small Ollama-hosted LLMs (e.g., Phi-4, Gemma-3) can reliably create knowledge graphs?

Our 3-minute video answers exactly that:

- small local model limitations

- tips (schema optimization, YAML/HTML requests)

- need for larger models (Llama3.3, 14B)

watch here: youtu.be/P2ZaSnnl7z0

Our 3-minute video answers exactly that:

- small local model limitations

- tips (schema optimization, YAML/HTML requests)

- need for larger models (Llama3.3, 14B)

watch here: youtu.be/P2ZaSnnl7z0

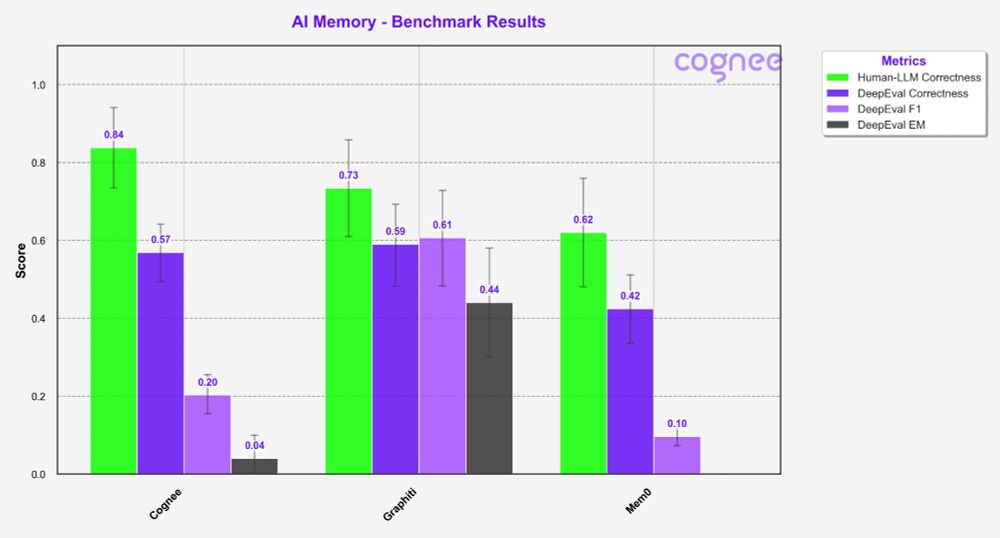

We benchmarked cognee, Mem0 and Zep/Graphiti using HotPotQA! Cognee topped the charts, boosted further by Dreamify, our optimized pipeline 🚀. Graphiti shared their scores with us and we'll verify them soon!

Dive in the details 👇🏼

Dive in the details 👇🏼

April 15, 2025 at 11:41 AM

We benchmarked cognee, Mem0 and Zep/Graphiti using HotPotQA! Cognee topped the charts, boosted further by Dreamify, our optimized pipeline 🚀. Graphiti shared their scores with us and we'll verify them soon!

Dive in the details 👇🏼

Dive in the details 👇🏼

Join us at @pyconde.bsky.social in Darmstadt - this is your opportunity to dive into open-source, network with passionate devs, and shape the future of AI and Python ecosystems!

We are sprinting with @cognee.bsky.social alongside 9 other awesome open-source projects.

We are sprinting with @cognee.bsky.social alongside 9 other awesome open-source projects.

April 14, 2025 at 9:55 AM

Join us at @pyconde.bsky.social in Darmstadt - this is your opportunity to dive into open-source, network with passionate devs, and shape the future of AI and Python ecosystems!

We are sprinting with @cognee.bsky.social alongside 9 other awesome open-source projects.

We are sprinting with @cognee.bsky.social alongside 9 other awesome open-source projects.

TLDR; we launched on ProductHunt!

After months packed with community-driven features our AI memory tool @cognee.bsky.social has evolved to new heights. Finally AI agents meet the memory they deserve - structured, accurate, and reliable - in 5 lines of code.

After months packed with community-driven features our AI memory tool @cognee.bsky.social has evolved to new heights. Finally AI agents meet the memory they deserve - structured, accurate, and reliable - in 5 lines of code.

April 11, 2025 at 7:30 AM

TLDR; we launched on ProductHunt!

After months packed with community-driven features our AI memory tool @cognee.bsky.social has evolved to new heights. Finally AI agents meet the memory they deserve - structured, accurate, and reliable - in 5 lines of code.

After months packed with community-driven features our AI memory tool @cognee.bsky.social has evolved to new heights. Finally AI agents meet the memory they deserve - structured, accurate, and reliable - in 5 lines of code.

First time presenting our 89,4% accuracy on HotPot QA at a meetup and it was great.

We showed up as almost the whole @cognee.bsky.social team, answered great questions, met amazing people.

For the evals, go and check our repo 👇

We showed up as almost the whole @cognee.bsky.social team, answered great questions, met amazing people.

For the evals, go and check our repo 👇

April 10, 2025 at 10:34 AM

First time presenting our 89,4% accuracy on HotPot QA at a meetup and it was great.

We showed up as almost the whole @cognee.bsky.social team, answered great questions, met amazing people.

For the evals, go and check our repo 👇

We showed up as almost the whole @cognee.bsky.social team, answered great questions, met amazing people.

For the evals, go and check our repo 👇

Exciting news friends!

Our latest @cognee.bsky.social update is live, featuring:

• Ontology support

• Direct relational DB imports

• Dreamify, our shiny new hyperparameter tuning system

• Big performance boosts

Plus, Pinki our dog has finally conquered the indoor puke challenge.

Our latest @cognee.bsky.social update is live, featuring:

• Ontology support

• Direct relational DB imports

• Dreamify, our shiny new hyperparameter tuning system

• Big performance boosts

Plus, Pinki our dog has finally conquered the indoor puke challenge.

April 4, 2025 at 9:17 AM

Exciting news friends!

Our latest @cognee.bsky.social update is live, featuring:

• Ontology support

• Direct relational DB imports

• Dreamify, our shiny new hyperparameter tuning system

• Big performance boosts

Plus, Pinki our dog has finally conquered the indoor puke challenge.

Our latest @cognee.bsky.social update is live, featuring:

• Ontology support

• Direct relational DB imports

• Dreamify, our shiny new hyperparameter tuning system

• Big performance boosts

Plus, Pinki our dog has finally conquered the indoor puke challenge.

Give LLM your rules?

Your definition of a session, GBV or the details of your accounting practices.

Yes.

With ontologies!

Connecting related concepts across different documents is often necessary but traditional search methods can’t help since they treat each paper as an isolated document.

Your definition of a session, GBV or the details of your accounting practices.

Yes.

With ontologies!

Connecting related concepts across different documents is often necessary but traditional search methods can’t help since they treat each paper as an isolated document.

April 1, 2025 at 11:32 AM

Give LLM your rules?

Your definition of a session, GBV or the details of your accounting practices.

Yes.

With ontologies!

Connecting related concepts across different documents is often necessary but traditional search methods can’t help since they treat each paper as an isolated document.

Your definition of a session, GBV or the details of your accounting practices.

Yes.

With ontologies!

Connecting related concepts across different documents is often necessary but traditional search methods can’t help since they treat each paper as an isolated document.

Short read, big insights 👇

We took knowledge graphs up a notch with formal ontologies.

You’ll find how we merge domain expertise + graph structures for more detailed insights at

@cognee.bsky.social in the below blog. Check it out.

We took knowledge graphs up a notch with formal ontologies.

You’ll find how we merge domain expertise + graph structures for more detailed insights at

@cognee.bsky.social in the below blog. Check it out.

March 31, 2025 at 9:33 AM

Short read, big insights 👇

We took knowledge graphs up a notch with formal ontologies.

You’ll find how we merge domain expertise + graph structures for more detailed insights at

@cognee.bsky.social in the below blog. Check it out.

We took knowledge graphs up a notch with formal ontologies.

You’ll find how we merge domain expertise + graph structures for more detailed insights at

@cognee.bsky.social in the below blog. Check it out.

How do you run your own local AI stack?

In 4 minutes, you can setup Ollama with @cognee.bsky.social to go local with Phi-4 and Mistral

Going local mostly means:

- Better privacy

- Reduced costs

- More control & experimentation

But.. beware:

In 4 minutes, you can setup Ollama with @cognee.bsky.social to go local with Phi-4 and Mistral

Going local mostly means:

- Better privacy

- Reduced costs

- More control & experimentation

But.. beware:

March 26, 2025 at 8:47 AM

How do you run your own local AI stack?

In 4 minutes, you can setup Ollama with @cognee.bsky.social to go local with Phi-4 and Mistral

Going local mostly means:

- Better privacy

- Reduced costs

- More control & experimentation

But.. beware:

In 4 minutes, you can setup Ollama with @cognee.bsky.social to go local with Phi-4 and Mistral

Going local mostly means:

- Better privacy

- Reduced costs

- More control & experimentation

But.. beware: