https://tomsilver.github.io/

I like using learning to "fail fast", with guarantees. Important for TAMP, where there are other MP problems to try next.

PDF: www.roboticsproceedings.org/rss17/p064.pdf

I like using learning to "fail fast", with guarantees. Important for TAMP, where there are other MP problems to try next.

PDF: www.roboticsproceedings.org/rss17/p064.pdf

Impressive results on a difficult and subtle problem, with a nice combo of planning + learning.

PDF: arxiv.org/abs/2202.01426

Impressive results on a difficult and subtle problem, with a nice combo of planning + learning.

PDF: arxiv.org/abs/2202.01426

I like this use of planning to fill in the gaps between subgoals that are directly programmed by end users.

PDF: arxiv.org/abs/2403.13988

I like this use of planning to fill in the gaps between subgoals that are directly programmed by end users.

PDF: arxiv.org/abs/2403.13988

DreamCoder-like robot skill learning. Refactoring helps!

PDF: arxiv.org/abs/2406.18746

DreamCoder-like robot skill learning. Refactoring helps!

PDF: arxiv.org/abs/2406.18746

(1/8)

A creative synthesis of control theory and search. I like using the Gramian to branch.

PDF: arxiv.org/abs/2412.11270

A creative synthesis of control theory and search. I like using the Gramian to branch.

PDF: arxiv.org/abs/2412.11270

This is some Tony Stark level stuff! XR + robots = future.

Website: mkari.de/reality-prom...

PDF: mkari.de/reality-prom...

This is some Tony Stark level stuff! XR + robots = future.

Website: mkari.de/reality-prom...

PDF: mkari.de/reality-prom...

This is one of those papers that I return to over the years and appreciate more every time. Chock full of ideas.

PDF: arxiv.org/abs/1807.09962

This is one of those papers that I return to over the years and appreciate more every time. Chock full of ideas.

PDF: arxiv.org/abs/1807.09962

This and others have convinced me that I need to learn Koopman! Another perspective on abstraction learning.

PDF: arxiv.org/abs/2303.13446

This and others have convinced me that I need to learn Koopman! Another perspective on abstraction learning.

PDF: arxiv.org/abs/2303.13446

A lesser known classic that is overdue for a revival. Fans of POMDPs will enjoy.

PDF: web.eecs.umich.edu/~baveja/Pape...

A lesser known classic that is overdue for a revival. Fans of POMDPs will enjoy.

PDF: web.eecs.umich.edu/~baveja/Pape...

Nice work on using fast local simulators to plan & learn in large partially observed worlds.

PDF: arxiv.org/abs/2202.01534

Nice work on using fast local simulators to plan & learn in large partially observed worlds.

PDF: arxiv.org/abs/2202.01534

I always enjoy a surprising connection between one problem (COIL) and another (UFL). And I always like work by Shivam Vats!

PDF: arxiv.org/abs/2505.00490

I always enjoy a surprising connection between one problem (COIL) and another (UFL). And I always like work by Shivam Vats!

PDF: arxiv.org/abs/2505.00490

I'm often asked: how might we combine ideas from hierarchical planning and VLAs? This is a good start!

PDF: arxiv.org/abs/2502.19417

I'm often asked: how might we combine ideas from hierarchical planning and VLAs? This is a good start!

PDF: arxiv.org/abs/2502.19417

A very clear introduction to and improvement of RTDP, an online MDP planner that we should all have in our toolkits.

PDF: ftp.cs.ucla.edu/pub/stat_ser...

A very clear introduction to and improvement of RTDP, an online MDP planner that we should all have in our toolkits.

PDF: ftp.cs.ucla.edu/pub/stat_ser...

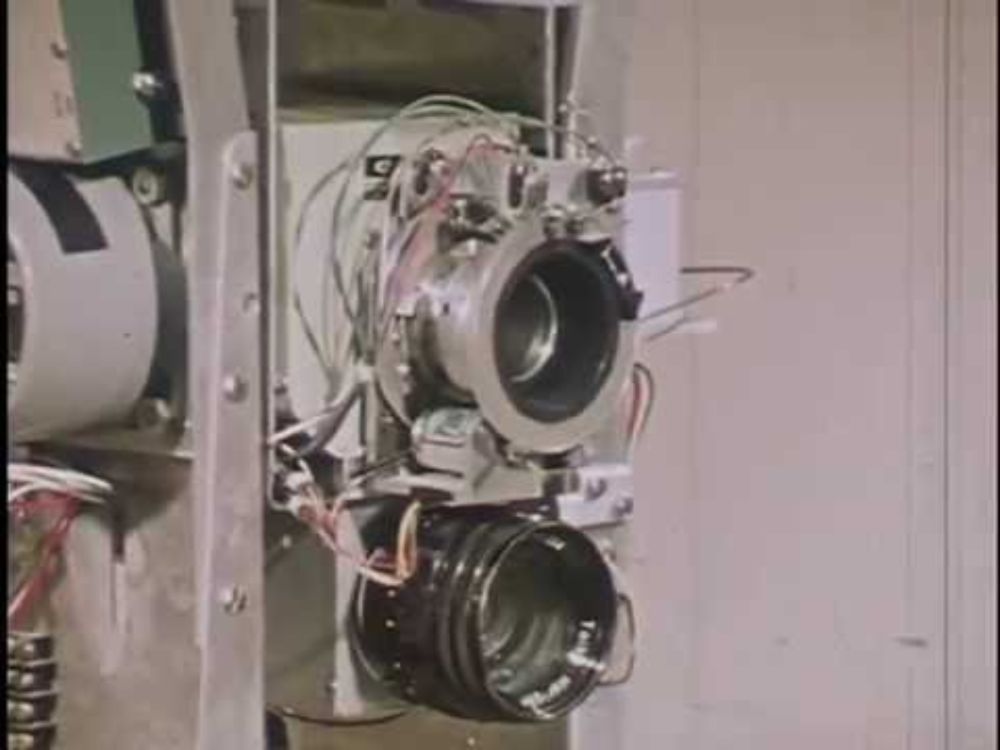

Classic early work on learning & planning from the team behind STRIPS, A* search, and Shakey the robot (www.youtube.com/watch?v=GmU7...).

PDF: stacks.stanford.edu/file/druid:c...

Classic early work on learning & planning from the team behind STRIPS, A* search, and Shakey the robot (www.youtube.com/watch?v=GmU7...).

PDF: stacks.stanford.edu/file/druid:c...

My favorite part is the clear running example in 2D (Fig 2 & 4). I want examples like this in my papers!

PDF: arxiv.org/abs/2409.15610

My favorite part is the clear running example in 2D (Fig 2 & 4). I want examples like this in my papers!

PDF: arxiv.org/abs/2409.15610

And other recent papers by the same group---exciting progress in programmatic RL with applications to robotics.

PDF: herowanzhu.github.io/roboscribe.pdf

And other recent papers by the same group---exciting progress in programmatic RL with applications to robotics.

PDF: herowanzhu.github.io/roboscribe.pdf

I especially like the focus on *representations* for supporting learning and planning.

PDF: proceedings.mlr.press/v100/kim20a/...

I especially like the focus on *representations* for supporting learning and planning.

PDF: proceedings.mlr.press/v100/kim20a/...

My favorite underrated paper in hierarchical RL. Unpacks how options can help *or hurt* learning performance. Fun writing.

PDF: www.ifaamas.org/Proceedings/...

My favorite underrated paper in hierarchical RL. Unpacks how options can help *or hurt* learning performance. Fun writing.

PDF: www.ifaamas.org/Proceedings/...

If you only read a few classical planning papers, this should be one! Illuminating and practically useful.

PDF: www-i6.informatik.rwth-aachen.de/~hector.geff...

If you only read a few classical planning papers, this should be one! Illuminating and practically useful.

PDF: www-i6.informatik.rwth-aachen.de/~hector.geff...

Metareasoning is increasingly important as we continue to make progress on "reasoning."

PDF: ojs.aaai.org/index.php/AA...

Metareasoning is increasingly important as we continue to make progress on "reasoning."

PDF: ojs.aaai.org/index.php/AA...

Fans of benchmarks like ARC will enjoy the simple mechanics and the difficult reasoning required.

PDF: arxiv.org/abs/2301.10289

Fans of benchmarks like ARC will enjoy the simple mechanics and the difficult reasoning required.

PDF: arxiv.org/abs/2301.10289

Addresses the meta-reasoning challenge that is core to TAMP. Toussaint is always worth a read.

PDF: www.user.tu-berlin.de/mtoussai/24-...

Addresses the meta-reasoning challenge that is core to TAMP. Toussaint is always worth a read.

PDF: www.user.tu-berlin.de/mtoussai/24-...

If you're doing RL in sim, why not use the sim to its full potential? Reset to any state! (gym.Env.reset() is not all we need.)

PDF: arxiv.org/abs/2404.15417

If you're doing RL in sim, why not use the sim to its full potential? Reset to any state! (gym.Env.reset() is not all we need.)

PDF: arxiv.org/abs/2404.15417

A highly original combination of learning + planning that is still underrated (despite winning a CoRL award!)

PDF: proceedings.mlr.press/v87/stein18a...

A highly original combination of learning + planning that is still underrated (despite winning a CoRL award!)

PDF: proceedings.mlr.press/v87/stein18a...