Prev. PhD @Brown, @Google, @GoPro. Crêpe lover.

📍 Boston | 🔗 thomasfel.me

Continuing our interpretation of DINOv2, the second part of our study concerns the *geometry of concepts* and the synthesis of our findings toward a new representational *phenomenology*:

the Minkowski Representation Hypothesis

Our latest Deeper Learning blog post is an #interpretability deep dive into one of today’s leading vision foundation models: DINOv2.

📖Read now: bit.ly/4nNfq8D

Stay tuned — Part 2 coming soon.

#AI #VLMs #DINOv2

Our latest Deeper Learning blog post is an #interpretability deep dive into one of today’s leading vision foundation models: DINOv2.

📖Read now: bit.ly/4nNfq8D

Stay tuned — Part 2 coming soon.

#AI #VLMs #DINOv2

In new work yesterday, @arnabsensharma.bsky.social et al identify a data type for *predicates*.

bsky.app/profile/arn...

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

Our PhD students also run an application mentoring program for prospective students. Mentoring requests due November 15.

tinyurl.com/2nrn4jf9

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

"From Prediction to Understanding: Will AI Foundation Models Transform Brain Science?"

AI foundation models are coming to neuroscience—if scaling laws hold, predictive power will be unprecedented.

But is that enough?

Thread 🧵👇

"From Prediction to Understanding: Will AI Foundation Models Transform Brain Science?"

AI foundation models are coming to neuroscience—if scaling laws hold, predictive power will be unprecedented.

But is that enough?

Thread 🧵👇

Continuing our interpretation of DINOv2, the second part of our study concerns the *geometry of concepts* and the synthesis of our findings toward a new representational *phenomenology*:

the Minkowski Representation Hypothesis

Continuing our interpretation of DINOv2, the second part of our study concerns the *geometry of concepts* and the synthesis of our findings toward a new representational *phenomenology*:

the Minkowski Representation Hypothesis

Continuing our interpretation of DINOv2, the second part of our study concerns the *geometry of concepts* and the synthesis of our findings toward a new representational *phenomenology*:

the Minkowski Representation Hypothesis

𝗔𝗻 𝗶𝗻𝘁𝗲𝗿𝗽𝗿𝗲𝘁𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝗱𝗲𝗲𝗽 𝗱𝗶𝘃𝗲 𝗶𝗻𝘁𝗼 𝗗𝗜𝗡𝗢𝘃𝟮, one of vision’s most important foundation models.

And today is Part I, buckle up, we're exploring some of its most charming features. :)

𝗔𝗻 𝗶𝗻𝘁𝗲𝗿𝗽𝗿𝗲𝘁𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝗱𝗲𝗲𝗽 𝗱𝗶𝘃𝗲 𝗶𝗻𝘁𝗼 𝗗𝗜𝗡𝗢𝘃𝟮, one of vision’s most important foundation models.

And today is Part I, buckle up, we're exploring some of its most charming features. :)

TL;DR: train a text2image model from scratch on ImageNet only and beat SDXL.

Paper, code, data available! Reproducible science FTW!

🧵👇

📜 arxiv.org/abs/2502.21318

💻 github.com/lucasdegeorg...

💽 huggingface.co/arijitghosh/...

TL;DR: train a text2image model from scratch on ImageNet only and beat SDXL.

Paper, code, data available! Reproducible science FTW!

🧵👇

📜 arxiv.org/abs/2502.21318

💻 github.com/lucasdegeorg...

💽 huggingface.co/arijitghosh/...

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

2 papers find:

There are phase transitions where features emerge and stay throughout learning

🤖📈🧠

alphaxiv.org/pdf/2509.17196

@amuuueller.bsky.social @abosselut.bsky.social

alphaxiv.org/abs/2509.05291

2 papers find:

There are phase transitions where features emerge and stay throughout learning

🤖📈🧠

alphaxiv.org/pdf/2509.17196

@amuuueller.bsky.social @abosselut.bsky.social

alphaxiv.org/abs/2509.05291

From observed phenomena in representations (conditional orthogonality) we derive a natural instantiation.

And it turns out to be an old friend: Matching Pursuit!

📄 arxiv.org/abs/2506.03093

See you in San Diego,

@neuripsconf.bsky.social

🎉

#interpretability

From observed phenomena in representations (conditional orthogonality) we derive a natural instantiation.

And it turns out to be an old friend: Matching Pursuit!

📄 arxiv.org/abs/2506.03093

See you in San Diego,

@neuripsconf.bsky.social

🎉

#interpretability

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

with @chloesu07.bsky.social, @thomasfel.bsky.social, @shamkakade.bsky.social and Stephanie Gil

arxiv.org/abs/2504.11695

with @chloesu07.bsky.social, @thomasfel.bsky.social, @shamkakade.bsky.social and Stephanie Gil

arxiv.org/abs/2504.11695

SAEs reveal that VLM embedding spaces aren’t just "image vs. text" cones.

They contain stable conceptual directions, some forming surprising bridges across modalities.

arxiv.org/abs/2504.11695

Demo 👉 vlm-concept-visualization.com

SAEs reveal that VLM embedding spaces aren’t just "image vs. text" cones.

They contain stable conceptual directions, some forming surprising bridges across modalities.

arxiv.org/abs/2504.11695

Demo 👉 vlm-concept-visualization.com

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

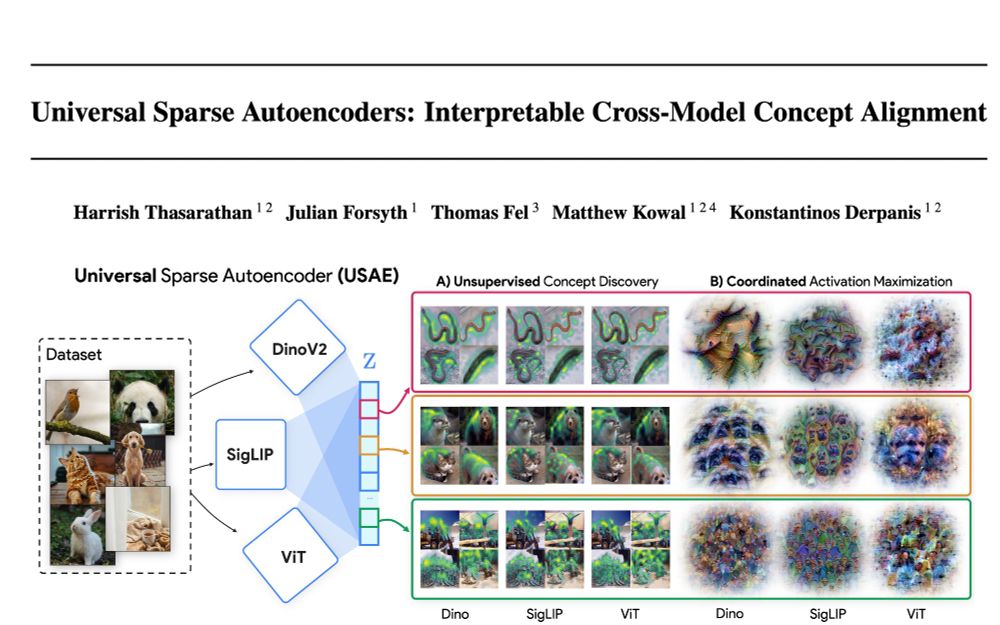

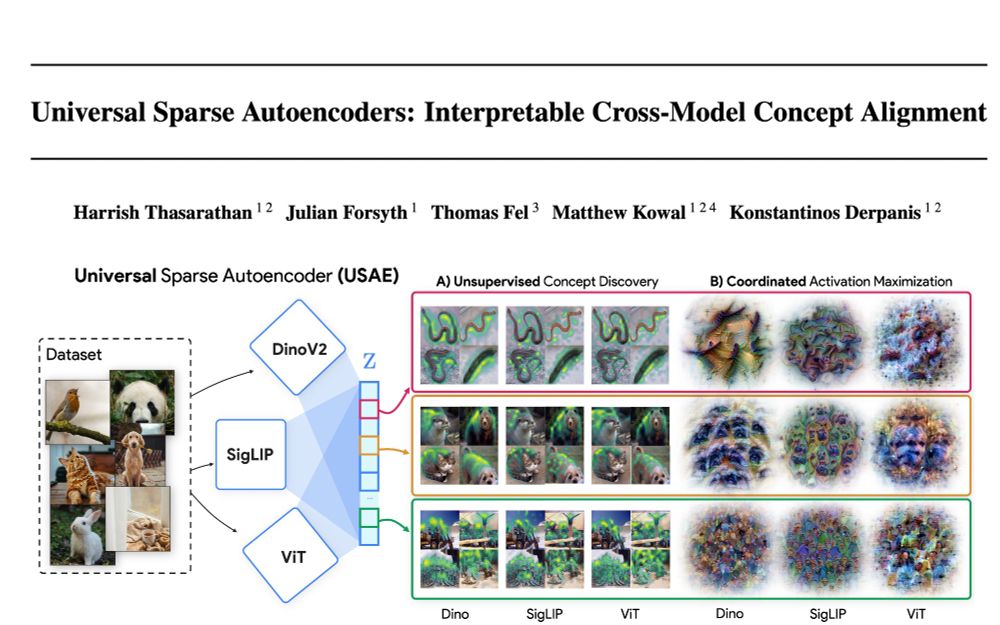

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

arxiv.org/abs/2502.03714

(1/9)

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

HUGE shoutout to Harry (1st PhD paper, in 1st year), Julian (1st ever, done as an undergrad), Thomas and Matt!

@hthasarathan.bsky.social @thomasfel.bsky.social @matthewkowal.bsky.social

arxiv.org/abs/2502.03714

(1/9)

HUGE shoutout to Harry (1st PhD paper, in 1st year), Julian (1st ever, done as an undergrad), Thomas and Matt!

@hthasarathan.bsky.social @thomasfel.bsky.social @matthewkowal.bsky.social

Hot off the arXiv! 🦬 "Appa: Bending Weather Dynamics with Latent Diffusion Models for Global Data Assimilation" 🌍 Appa is our novel 1.5B-parameter probabilistic weather model that unifies reanalysis, filtering, and forecasting in a single framework. A thread 🧵

Hot off the arXiv! 🦬 "Appa: Bending Weather Dynamics with Latent Diffusion Models for Global Data Assimilation" 🌍 Appa is our novel 1.5B-parameter probabilistic weather model that unifies reanalysis, filtering, and forecasting in a single framework. A thread 🧵