Tejas Srinivasan

@tejassrinivasan.bsky.social

CS PhD student at USC. Former research intern at AI2 Mosaic. Interested in human-AI interaction and language grounding.

Pinned

People are increasingly relying on AI assistance, but *how* they use AI advice is influenced by their trust in the AI, which the AI is typically blind to. What if they weren’t?

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

🚨Reminder: Submissioms for the ORIGen workshop at COLM are due today!!! 🚨

CfP: origen-workshop.github.io/submissions/

OpenReview submission page: openreview.net/group?id=col...

CfP: origen-workshop.github.io/submissions/

OpenReview submission page: openreview.net/group?id=col...

LLMs are all around us, but how can we foster reliable and accountable interactions with them??

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

ORIGen 2025

Workshop on Optimal Reliance and Accountability in Interactions with Generative LMs

origen-workshop.github.io

June 27, 2025 at 7:54 PM

🚨Reminder: Submissioms for the ORIGen workshop at COLM are due today!!! 🚨

CfP: origen-workshop.github.io/submissions/

OpenReview submission page: openreview.net/group?id=col...

CfP: origen-workshop.github.io/submissions/

OpenReview submission page: openreview.net/group?id=col...

Reposted by Tejas Srinivasan

This month, @jessezhang.bsky.social completed his PhD defense and signed to start a postdoc with @abhishekunique7.bsky.social at UW! Keep an eye on his journey :) www.jessezhang.net

I'm sad to lose one of my sinistral students but glad to produce another Dr. Jesse 😛

I'm sad to lose one of my sinistral students but glad to produce another Dr. Jesse 😛

May 28, 2025 at 5:04 PM

This month, @jessezhang.bsky.social completed his PhD defense and signed to start a postdoc with @abhishekunique7.bsky.social at UW! Keep an eye on his journey :) www.jessezhang.net

I'm sad to lose one of my sinistral students but glad to produce another Dr. Jesse 😛

I'm sad to lose one of my sinistral students but glad to produce another Dr. Jesse 😛

LLMs are all around us, but how can we foster reliable and accountable interactions with them??

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

ORIGen 2025

Workshop on Optimal Reliance and Accountability in Interactions with Generative LMs

origen-workshop.github.io

May 16, 2025 at 3:35 PM

LLMs are all around us, but how can we foster reliable and accountable interactions with them??

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

To discuss these problems, we will host the first ORIGen workshop at @colmweb.org! Submissions welcome from NLP, HCI, CogSci, and anything human-centered, due June 20 :)

origen-workshop.github.io

This! So much this!!!

AI can do so much more. Instead of seeing aging as a problem to sweep under the rug, we should be designing AI to facilitate meaningful connections for all.

May 1, 2025 at 4:29 PM

This! So much this!!!

Reposted by Tejas Srinivasan

Nothing says “I love you” like outsourcing your parents’ phone calls to a chatbot. 🙃 Social isolation in aging is real. Connection isn’t something you can automate.

Why does everyone think we can just throw a chatbot at every problem?

www.404media.co/i-tested-the...

Why does everyone think we can just throw a chatbot at every problem?

www.404media.co/i-tested-the...

I Tested The AI That Calls Your Elderly Parents If You Can't Be Bothered

inTouch says on its website "Busy life? You can’t call your parent every day—but we can." My own mum said she would feel terrible if her child used it.

www.404media.co

April 29, 2025 at 7:42 PM

Nothing says “I love you” like outsourcing your parents’ phone calls to a chatbot. 🙃 Social isolation in aging is real. Connection isn’t something you can automate.

Why does everyone think we can just throw a chatbot at every problem?

www.404media.co/i-tested-the...

Why does everyone think we can just throw a chatbot at every problem?

www.404media.co/i-tested-the...

Reposted by Tejas Srinivasan

Arresting and threatening to deport students because of their participation in political protest is the kind of action one ordinarily associates with the world’s most repressive regimes. It’s genuinely shocking that this appears to be what’s going on right here. 1/

March 9, 2025 at 11:55 PM

Arresting and threatening to deport students because of their participation in political protest is the kind of action one ordinarily associates with the world’s most repressive regimes. It’s genuinely shocking that this appears to be what’s going on right here. 1/

Reposted by Tejas Srinivasan

I worry that concerns with "superintelligence" are being blurred with concerns around *ceding human control*.

A "SuperDumb" system can create mutually assured destruction. What it takes is allowing AI systems to execute code autonomously in military operations.

A "SuperDumb" system can create mutually assured destruction. What it takes is allowing AI systems to execute code autonomously in military operations.

AI real talk. We (humanity) are moving full speed ahead at building AI agents for war that can create a runaway missile crisis of mutually assured destruction globally.

Is the option of not allowing AI agents to deploy missiles already off the table, or is that still up for discussion?

Is the option of not allowing AI agents to deploy missiles already off the table, or is that still up for discussion?

Scale AI announces multimillion-dollar defense deal, a major step in U.S. military automation

Spearheaded by the Defense Innovation Unit, the Thunderforge program will work with Anduril, Microsoft and others to develop and deploy AI agents.

www.cnbc.com

March 6, 2025 at 7:46 PM

I worry that concerns with "superintelligence" are being blurred with concerns around *ceding human control*.

A "SuperDumb" system can create mutually assured destruction. What it takes is allowing AI systems to execute code autonomously in military operations.

A "SuperDumb" system can create mutually assured destruction. What it takes is allowing AI systems to execute code autonomously in military operations.

Reposted by Tejas Srinivasan

"The first guest on Gavin Newsom's podcast was Charlie Kirk" is more than enough for me to say "absolutely not" to any suggestion Newsom play any role in the future of the Democratic Party. People like him are the past, the failures, the ones who got us here.

Gavin Newsom would have put down the Bell Riots with tanks and napalm I can tell you that much

March 6, 2025 at 2:24 AM

"The first guest on Gavin Newsom's podcast was Charlie Kirk" is more than enough for me to say "absolutely not" to any suggestion Newsom play any role in the future of the Democratic Party. People like him are the past, the failures, the ones who got us here.

People are increasingly relying on AI assistance, but *how* they use AI advice is influenced by their trust in the AI, which the AI is typically blind to. What if they weren’t?

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

February 27, 2025 at 5:56 PM

People are increasingly relying on AI assistance, but *how* they use AI advice is influenced by their trust in the AI, which the AI is typically blind to. What if they weren’t?

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

We show that adapting AI assistants' behavior to user trust mitigates under- and over-reliance!

arxiv.org/abs/2502.13321

Reposted by Tejas Srinivasan

Stand by this: www.politico.com/newsletters/...

February 19, 2025 at 4:42 PM

Stand by this: www.politico.com/newsletters/...

Reposted by Tejas Srinivasan

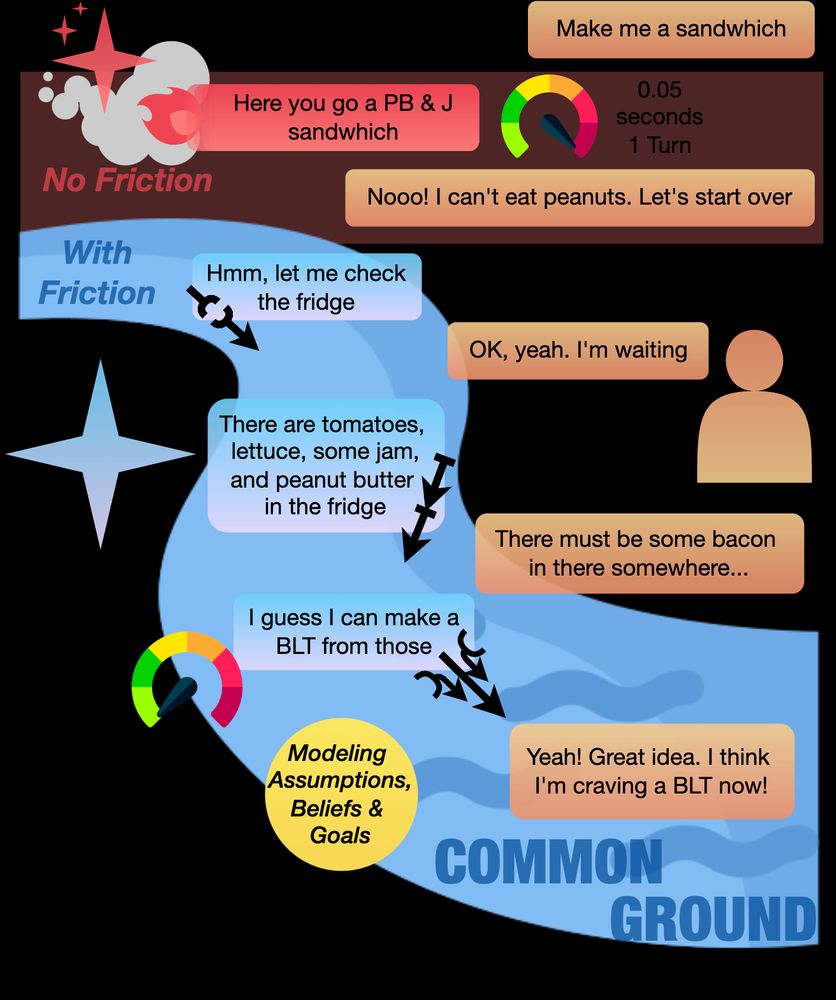

‼️ Ever wish LLMs would just... slow down for a second?

In our latest work, "Better Slow than Sorry: Introducing Positive Friction for Reliable Dialogue Systems", we delve into how strategic delays can enhance dialogue systems.

Paper Website: merterm.github.io/positive-fri...

In our latest work, "Better Slow than Sorry: Introducing Positive Friction for Reliable Dialogue Systems", we delve into how strategic delays can enhance dialogue systems.

Paper Website: merterm.github.io/positive-fri...

February 8, 2025 at 10:42 PM

‼️ Ever wish LLMs would just... slow down for a second?

In our latest work, "Better Slow than Sorry: Introducing Positive Friction for Reliable Dialogue Systems", we delve into how strategic delays can enhance dialogue systems.

Paper Website: merterm.github.io/positive-fri...

In our latest work, "Better Slow than Sorry: Introducing Positive Friction for Reliable Dialogue Systems", we delve into how strategic delays can enhance dialogue systems.

Paper Website: merterm.github.io/positive-fri...

Reposted by Tejas Srinivasan

“Toward the end of the November dinner, Trump raised the matter of the lawsuit, the people said. The president signaled that the litigation had to be resolved before Zuckerberg could be “brought into the tent,” one of the people said.”

They’re in the tent now. Cowards.

They’re in the tent now. Cowards.

Meta has agreed to pay Trump $25 million in damages to settle a lawsuit alleging that removing Trump from the platform was illegal. Message was that the money needed to be paid before Meta could be "in the tent." Never got around to filing an amended complaint. www.wsj.com/us-news/law/...

Exclusive | Trump Signs Agreement Calling for Meta to Pay $25 Million to Settle Suit

The president had sued the social-media company after his accounts were suspended.

www.wsj.com

January 29, 2025 at 9:59 PM

“Toward the end of the November dinner, Trump raised the matter of the lawsuit, the people said. The president signaled that the litigation had to be resolved before Zuckerberg could be “brought into the tent,” one of the people said.”

They’re in the tent now. Cowards.

They’re in the tent now. Cowards.

Reposted by Tejas Srinivasan

What the hell are we doing?

December 4, 2024 at 2:23 PM

What the hell are we doing?