Stéphane Thiell

@sthiell.bsky.social

77 followers

40 following

19 posts

I do HPC storage at Stanford and always monitor channel 16 ⛵

Posts

Media

Videos

Starter Packs

Stéphane Thiell

@sthiell.bsky.social

· Mar 15

Reposted by Stéphane Thiell

kilian

@kiliantw.bsky.social

· Feb 8

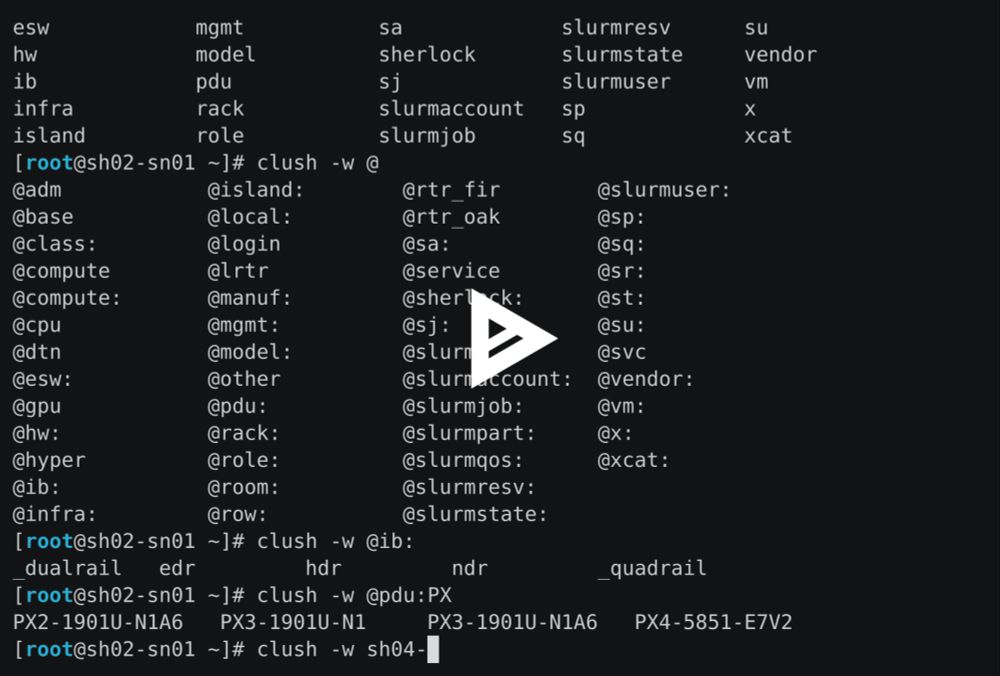

Sherlock goes full flash - Sherlock

What could be more frustrating than anxiously waiting for your computing job to finish? Slow I/O that makes it take even longer is certainly high on the list. But not anymore! Fir, Sherlock’s scratch file system, has just undergone a major

news.sherlock.stanford.edu

Stéphane Thiell

@sthiell.bsky.social

· Oct 28