Nick Stracke

@rmsnorm.bsky.social

Two great works on how we can manipulate style for generative modeling by PiMa!

I’m thrilled to share that I’ll present two first-authored papers at #ICCV2025 🌺 in Honolulu together with @mgui7.bsky.social ! 🏝️

(Thread 🧵👇)

(Thread 🧵👇)

October 18, 2025 at 8:37 AM

Two great works on how we can manipulate style for generative modeling by PiMa!

Reposted by Nick Stracke

🤔 What happens when you poke a scene — and your model has to predict how the world moves in response?

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

October 15, 2025 at 1:56 AM

🤔 What happens when you poke a scene — and your model has to predict how the world moves in response?

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

Reposted by Nick Stracke

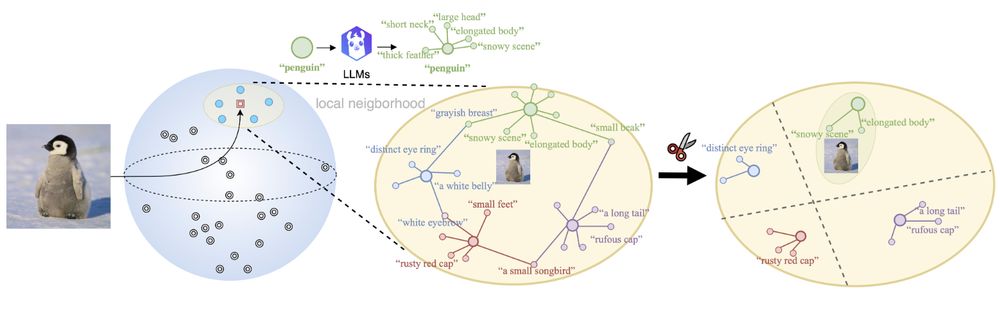

🤔When combining Vision-language models (VLMs) with Large language models (LLMs), do VLMs benefit from additional genuine semantics or artificial augmentations of the text for downstream tasks?

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

January 8, 2025 at 3:54 PM

🤔When combining Vision-language models (VLMs) with Large language models (LLMs), do VLMs benefit from additional genuine semantics or artificial augmentations of the text for downstream tasks?

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

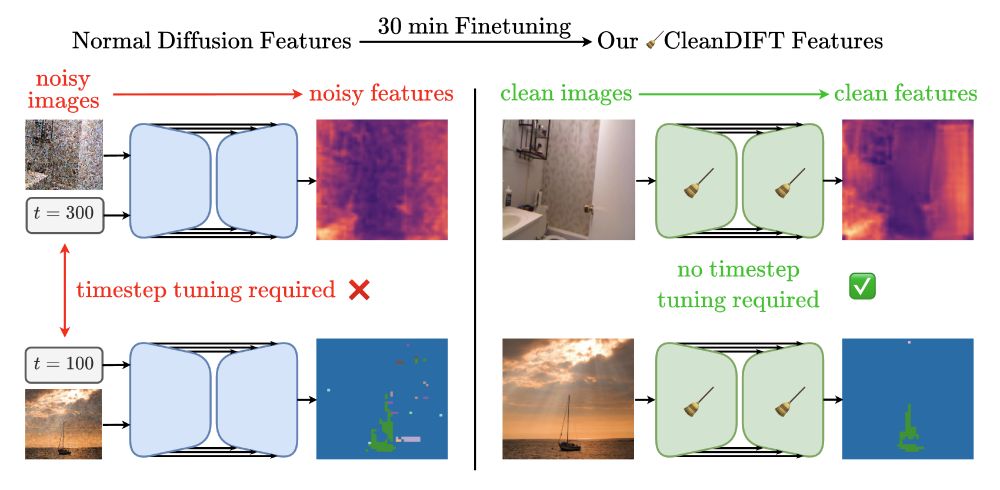

🤔 Why do we extract diffusion features from noisy images? Isn’t that destroying information?

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

December 4, 2024 at 11:31 PM

🤔 Why do we extract diffusion features from noisy images? Isn’t that destroying information?

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Reposted by Nick Stracke

Hi, just sharing an updated version of the PyTorch 2 Internals slides: drive.google.com/file/d/18YZV.... Content: basics, jit, dynamo, Inductor, export path and executorch. This is focused on internals so you will need a bit of C/C++. I show how you can export and run a model on a Pixel Watch too.

November 19, 2024 at 11:05 AM

Hi, just sharing an updated version of the PyTorch 2 Internals slides: drive.google.com/file/d/18YZV.... Content: basics, jit, dynamo, Inductor, export path and executorch. This is focused on internals so you will need a bit of C/C++. I show how you can export and run a model on a Pixel Watch too.

Reposted by Nick Stracke

While we're starting up over here, I suppose it's okay to reshare some old content, right?

Here's my lecture from the EEML 2024 summer school in Novi Sad🇷🇸, where I tried to give an intuitive introduction to diffusion models: youtu.be/9BHQvQlsVdE

Check out other lectures on their channel as well!

Here's my lecture from the EEML 2024 summer school in Novi Sad🇷🇸, where I tried to give an intuitive introduction to diffusion models: youtu.be/9BHQvQlsVdE

Check out other lectures on their channel as well!

[EEML'24] Sander Dieleman - Generative modelling through iterative refinement

YouTube video by EEML Community

youtu.be

November 19, 2024 at 9:57 AM

While we're starting up over here, I suppose it's okay to reshare some old content, right?

Here's my lecture from the EEML 2024 summer school in Novi Sad🇷🇸, where I tried to give an intuitive introduction to diffusion models: youtu.be/9BHQvQlsVdE

Check out other lectures on their channel as well!

Here's my lecture from the EEML 2024 summer school in Novi Sad🇷🇸, where I tried to give an intuitive introduction to diffusion models: youtu.be/9BHQvQlsVdE

Check out other lectures on their channel as well!