CLSP@JHU

Shit's become moral support.

Shit's become moral support.

(Is it wrong that I respect O1 for this?)

(Is it wrong that I respect O1 for this?)

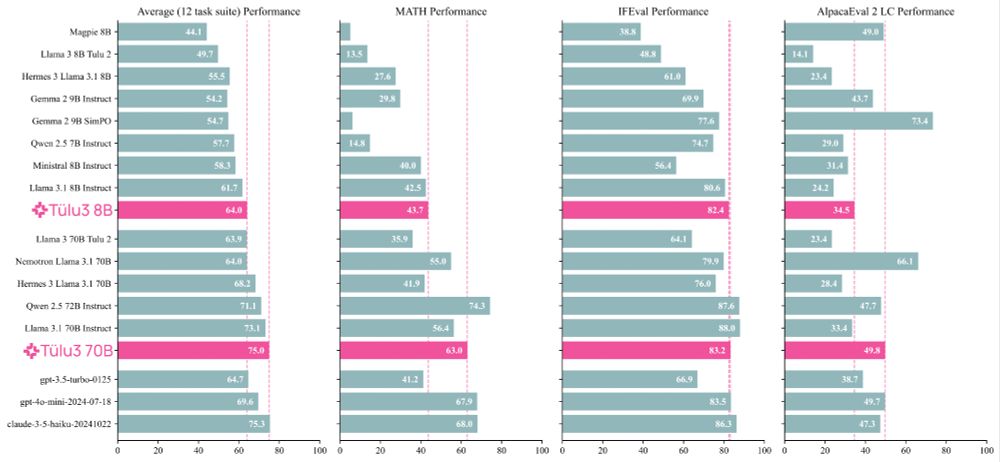

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇