The result of months of work with the goal of advancing Multilingual LLM evaluation.

Built together with the community and amazing collaborators at Cohere4AI, MILA, MIT, and many more.

The result of months of work with the goal of advancing Multilingual LLM evaluation.

Built together with the community and amazing collaborators at Cohere4AI, MILA, MIT, and many more.

Check this lunar rover transforming into a cinematic moonscape with Earth hanging majestically in the sky! 🚙🌎 #AIart #DigitalArt

Try it out: huggingface.co/spaces/Yuans...

Check this lunar rover transforming into a cinematic moonscape with Earth hanging majestically in the sky! 🚙🌎 #AIart #DigitalArt

Try it out: huggingface.co/spaces/Yuans...

I put together a database of known public technical writeups with summaries of the key technical features.

I put together a database of known public technical writeups with summaries of the key technical features.

Here's a recap, find the text-readable version here huggingface.co/posts/merve/...

Here's a recap, find the text-readable version here huggingface.co/posts/merve/...

https://buff.ly/3Zjmako

https://buff.ly/3Zjmako

Our Community Computer Vision Course Repo just reached 500 stars on GitHub: github.com/johko/comput... 🤩

I'm really proud of all the amazing content people from the community have contributed here and that they still keep on adding very cool and helpful material 💪

Kudos to @fffiloni.bsky.social

Try it out: huggingface.co/spaces/fffil...

Kudos to @fffiloni.bsky.social

Try it out: huggingface.co/spaces/fffil...

1️⃣ Understanding tool calling with Llama 3.2 🔧

2️⃣ Using Text Generation Inference (TGI) with Llama models 🦙

(links in the next post)

1️⃣ Understanding tool calling with Llama 3.2 🔧

2️⃣ Using Text Generation Inference (TGI) with Llama models 🦙

(links in the next post)

• Tiny 1.7B LLM running at 88 tokens / second ⚡

• Powered by MLC/WebLLM on WebGPU 🔥

• JSON Structured Generation entirely in the browser 🤏

• Tiny 1.7B LLM running at 88 tokens / second ⚡

• Powered by MLC/WebLLM on WebGPU 🔥

• JSON Structured Generation entirely in the browser 🤏

It's super interesting to see the reasoning steps, and with really impressive results too. Feel free to try it out here: huggingface.co/chat/models/...

I'd love to get your feedback on it!

It's super interesting to see the reasoning steps, and with really impressive results too. Feel free to try it out here: huggingface.co/chat/models/...

I'd love to get your feedback on it!

Powered by MLC Web-LLM & XGrammar ⚡

Define a JSON schema, Input free text, get structured data right in your browser - profit!!

Powered by MLC Web-LLM & XGrammar ⚡

Define a JSON schema, Input free text, get structured data right in your browser - profit!!

I am no longer willing to engage in this conversation.

1/ What happened yesterday with my colleague's dataset was inappropriate and unethical. It has been taken down, and all the data has been deleted. I am truly sorry if this has made Bluesky users feel unsafe, it was never the goal.

I am no longer willing to engage in this conversation.

blog.neurips.cc/2024/11/27/a...

blog.neurips.cc/2024/11/27/a...

So people bullied him & posted death threats.

He took it down.

Nice one, folks.

So people bullied him & posted death threats.

He took it down.

Nice one, folks.

I'll keep hoping for a collaborative and kind space where empathy rules rather than polarization and violence❤️

clearsky.app/osanseviero....

I'll keep hoping for a collaborative and kind space where empathy rules rather than polarization and violence❤️

clearsky.app/osanseviero....

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

If you want to learn more about it and how to use these models, check out the freshly released book "Hands-On Generative AI", written with @pcuenq.hf.co @apolinario.bsky.social and Jonathan

www.oreilly.com/library/view...

If you want to learn more about it and how to use these models, check out the freshly released book "Hands-On Generative AI", written with @pcuenq.hf.co @apolinario.bsky.social and Jonathan

www.oreilly.com/library/view...

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

It's a great, small, and fully open VLM that I'm really excited about for fine-tuning and on-device use cases 💻

It also comes with 0-day MLX support via mlx-vlm, here's it running at > 80 tok/s on my M1 Max 🤯

It's a great, small, and fully open VLM that I'm really excited about for fine-tuning and on-device use cases 💻

It also comes with 0-day MLX support via mlx-vlm, here's it running at > 80 tok/s on my M1 Max 🤯

> Multilingual - English, Chinese, Korean & Japanese

> Cross platform inference w/ llama.cpp

> Trained on 5 Billion audio tokens

> Qwen 2.5 0.5B LLM backbone

> Trained via HF GPU grants

> Multilingual - English, Chinese, Korean & Japanese

> Cross platform inference w/ llama.cpp

> Trained on 5 Billion audio tokens

> Qwen 2.5 0.5B LLM backbone

> Trained via HF GPU grants

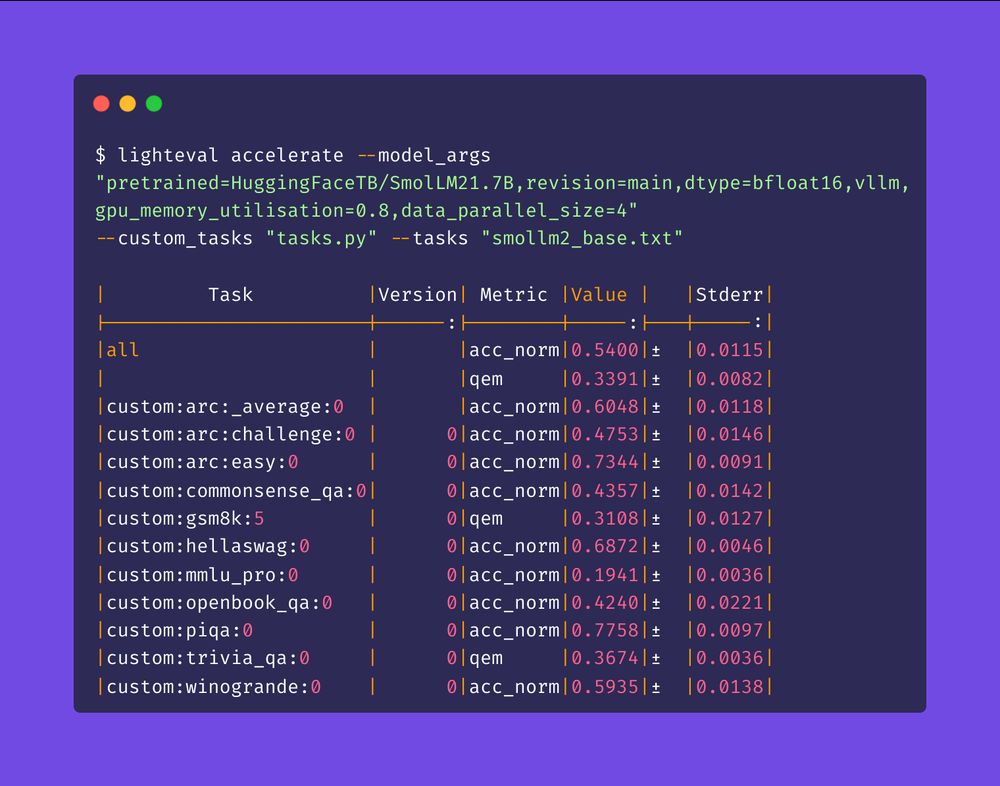

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

- Building custom feeds using ML

- Creating dashboards for data exploration

- Developing custom models for Bluesky

To gather @bsky.app resources on @huggingface.bsky.social. I've established a community org 🤗 huggingface.co/bluesky-comm...

- Building custom feeds using ML

- Creating dashboards for data exploration

- Developing custom models for Bluesky

To gather @bsky.app resources on @huggingface.bsky.social. I've established a community org 🤗 huggingface.co/bluesky-comm...