Maxwell Ramstead

@mjdramstead.bsky.social

1.3K followers

150 following

120 posts

Cofounder @noumenal-labs.bsky.social. Honorary Fellow at the UCL Queen Square Institute of Neurology. Free energy principle, active inference, Bayesian mechanics, artificial intelligence, phenomenology

Posts

Media

Videos

Starter Packs

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Maxwell Ramstead

@mjdramstead.bsky.social

· Sep 15

Maxwell Ramstead

@mjdramstead.bsky.social

· Sep 15

Maxwell Ramstead

@mjdramstead.bsky.social

· Sep 15

Dynamic Markov Blanket Detection for Macroscopic Physics Discovery

The free energy principle (FEP), along with the associated constructs of Markov blankets and ontological potentials, have recently been presented as the core components of a generalized modeling metho...

arxiv.org

Maxwell Ramstead

@mjdramstead.bsky.social

· Sep 15

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Takuya Isomura

@takuyaisomura.bsky.social

· Aug 11

Predicting individual learning trajectories in zebrafish via the free-energy principle

The free-energy principle has been proposed as a unified theory of brain function, and recent evidence from in vitro experiments supports its validity. However, its empirical application to in vivo ne...

doi.org

Reposted by Maxwell Ramstead

Jen

@ladyjenpool.bsky.social

· Jun 22

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Reposted by Maxwell Ramstead

Fernando Rosas

@frosas.bsky.social

· Apr 23

Shannon invariants: A scalable approach to information decomposition

Distributed systems, such as biological and artificial neural networks, process information via complex interactions engaging multiple subsystems, resulting in high-order patterns with distinct proper...

arxiv.org

Reposted by Maxwell Ramstead

Tiago Peixoto

@tiago.skewed.de

· Apr 5

Reposted by Maxwell Ramstead

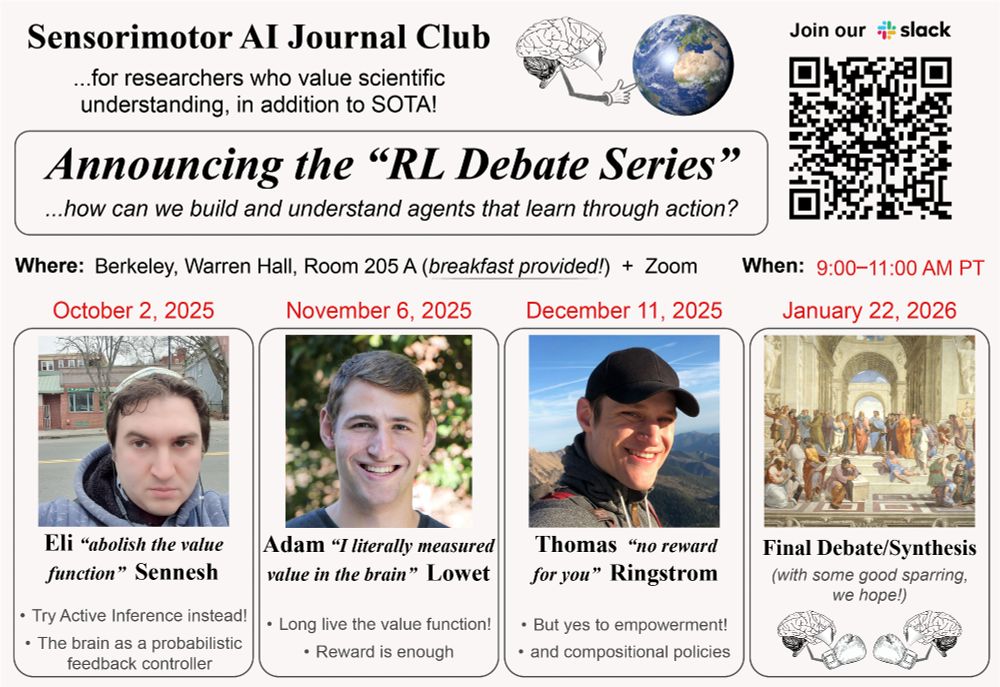

Ryan Smith

@rssmith.bsky.social

· Mar 27

A Systematic Empirical Comparison of Active Inference and Reinforcement Learning Models in Accounting for Decision-Making Under Uncertainty

Reinforcement Learning (RL) and Active Inference (AInf) are related computational frameworks for modeling learning and choice under uncertainty. However, differ

papers.ssrn.com

Reposted by Maxwell Ramstead

John Scalzi

@scalzi.com

· Mar 20

NEW: LibGen contains millions of pirated books and research papers, built over nearly two decades. From court documents, we know that Meta torrented a version of it to build its AI. Today, @theatlantic.com presents an analysis of the data set by @alexreisner.bsky.social. Search through it yourself:

The Unbelievable Scale of AI’s Pirated-Books Problem

Meta pirated millions of books to train its AI. Search through them here.

www.theatlantic.com

Maxwell Ramstead

@mjdramstead.bsky.social

· Mar 18

Maxwell Ramstead

@mjdramstead.bsky.social

· Mar 17