Daniel Lowd

@lowd.bsky.social

4.1K followers

490 following

400 posts

CS Prof at the University of Oregon, studying adversarial machine learning, data poisoning, interpretable AI, probabilistic and relational models, and more. Avid unicyclist and occasional singer-songwriter. He/him

Posts

Media

Videos

Starter Packs

This is a song inspired by the first story I ever sold — “Forget Me Not” — which is about a man addicted to a memory drug. I love how jazzy and catchy it turned out.

youtu.be/w3ui-XMrFcQ?...

youtu.be/w3ui-XMrFcQ?...

Forget Me Not

YouTube video by Mary E. Lowd - Topic

youtu.be

Reposted by Daniel Lowd

Reposted by Daniel Lowd

Daniel Lowd

@lowd.bsky.social

· Sep 25

Reposted by Daniel Lowd

Reposted by Daniel Lowd

Ryan Cordell

@ryancordell.org

· Sep 10

Daniel Lowd

@lowd.bsky.social

· Apr 23

Daniel Lowd

@lowd.bsky.social

· Apr 9

Daniel Lowd

@lowd.bsky.social

· Apr 9

Reposted by Daniel Lowd

Daniel Lowd

@lowd.bsky.social

· Mar 15

Reposted by Daniel Lowd

Kate Starbird

@katestarbird.bsky.social

· Feb 20

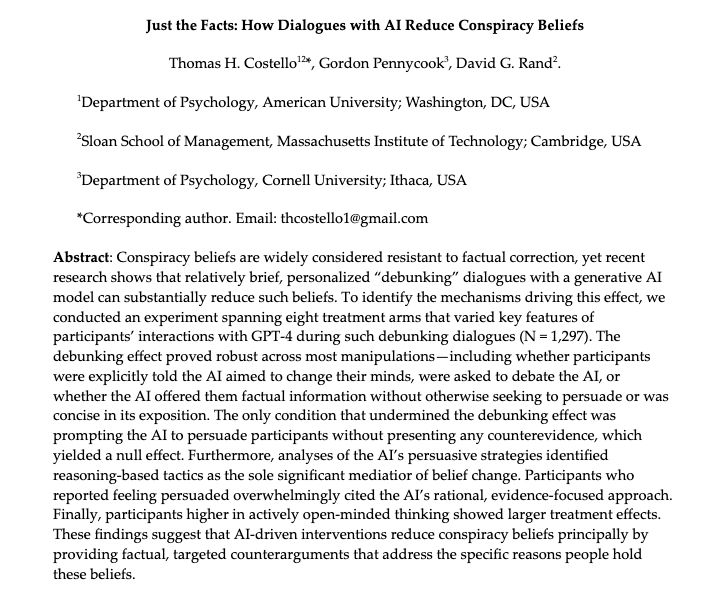

Last year, we published a paper showing that AI models can "debunk" conspiracy theories via personalized conversations. That paper raised a major question: WHY are the human<>AI convos so effective? In a new working paper, we have some answers.

TLDR: facts

osf.io/preprints/ps...

TLDR: facts

osf.io/preprints/ps...

Reposted by Daniel Lowd

Reposted by Daniel Lowd

Reposted by Daniel Lowd

Loris D'Antoni

@lorisdanto.bsky.social

· Jan 23

Daniel Lowd

@lowd.bsky.social

· Jan 21

Reposted by Daniel Lowd

Simon Willison

@simonwillison.net

· Jan 19

Daniel Lowd

@lowd.bsky.social

· Jan 18

Daniel Lowd

@lowd.bsky.social

· Jan 17

Daniel Lowd

@lowd.bsky.social

· Jan 14

Daniel Lowd

@lowd.bsky.social

· Jan 14

Daniel Lowd

@lowd.bsky.social

· Jan 13

🧪 NYU researchers show AI models can be easily poisoned with medical misinformation, increasing risks of false outputs. Their study also suggests strategies to intercept and mitigate harmful content. 🩺💻 #MLSky

Vaccine misinformation can easily poison AI – but there's a fix

Adding just a little medical misinformation to an AI model’s training data increases the chances that chatbots will spew harmful false content about vaccines and other topics

www.newscientist.com