📅 Ddl 12/22/25

🔬 Accessibility & Learning, plus Sustainability & Social Justice

🧑🏫 Associate/Full Prof*

🔗 umd.wd1.myworkdayjobs.com/en-US/UMCP/j...

*Assistant-level candidates: apply to departments, mentioning AIM in a cover letter

📅 Ddl 12/22/25

🔬 Accessibility & Learning, plus Sustainability & Social Justice

🧑🏫 Associate/Full Prof*

🔗 umd.wd1.myworkdayjobs.com/en-US/UMCP/j...

*Assistant-level candidates: apply to departments, mentioning AIM in a cover letter

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

LLMs often produce inconsistent explanations (62–86%), hurting faithfulness and trust in explainable AI.

We introduce PEX consistency, a measure for explanation consistency,

and show that optimizing it via DPO improves faithfulness by up to 9.7%.

LLMs often produce inconsistent explanations (62–86%), hurting faithfulness and trust in explainable AI.

We introduce PEX consistency, a measure for explanation consistency,

and show that optimizing it via DPO improves faithfulness by up to 9.7%.

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468

![QANTA Logo: Question Answering is not a Trivial Activity

[Humans and computers competing on a buzzer]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:fl42ibvzjfunee4t4zqvxurf/bafkreigcvckbbculrr5g3emigdt67avtojtcf24x6tjdfk6uwbqny6pilu@jpeg)

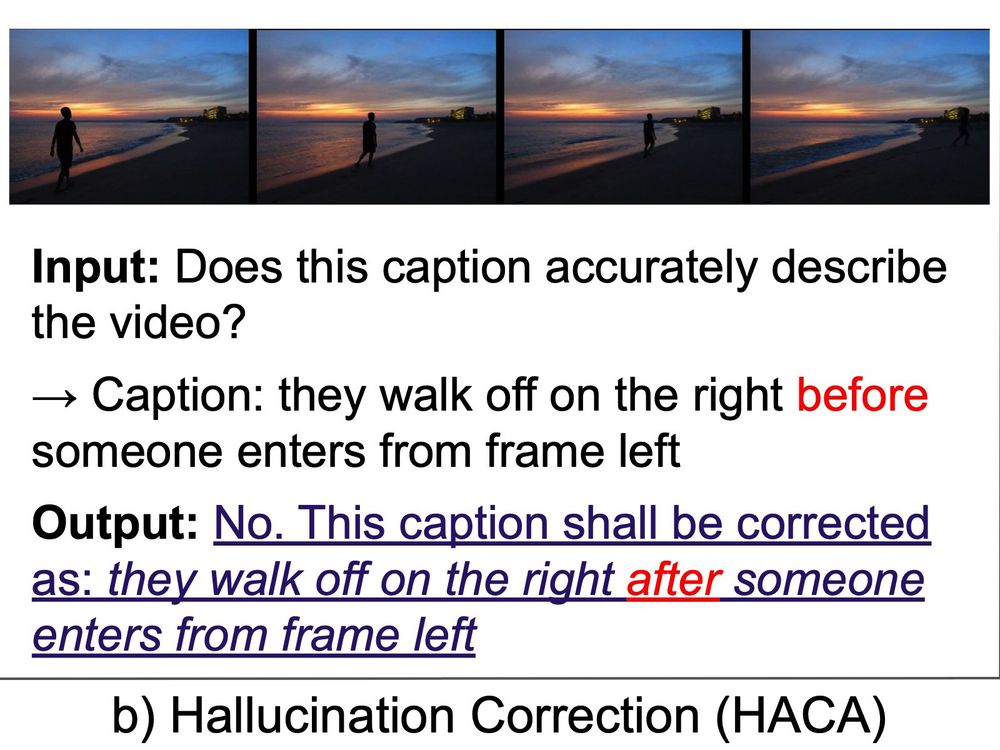

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

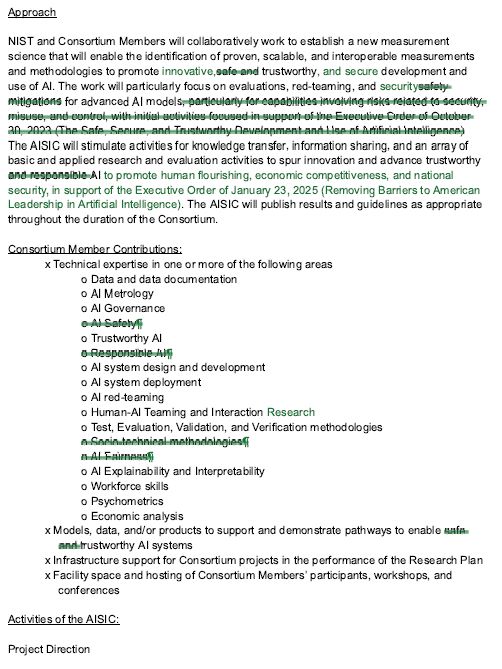

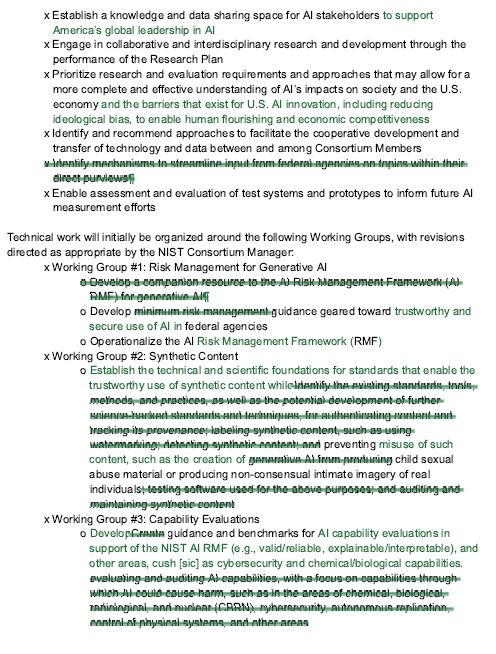

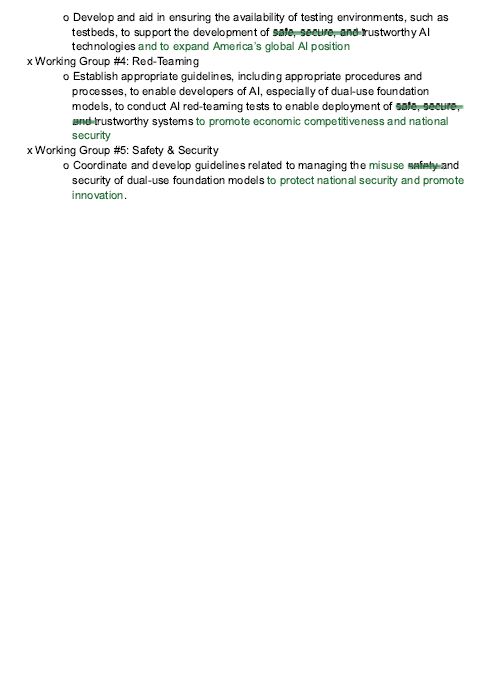

Out: safety, responsibility, sociotechnical, fairness, working w fed agencies, authenticating content, watermarking, RN of CBRN, autonomous replication, ctrl of physical systems

>

Out: safety, responsibility, sociotechnical, fairness, working w fed agencies, authenticating content, watermarking, RN of CBRN, autonomous replication, ctrl of physical systems

>

Just read this great work by Goyal et al. arxiv.org/abs/2411.11437

I'm optimizing for high coverage and low redundancy—assigning reviewers based on relevant topics or affinity scores alone feels off. Seniority and diversity matter!

Just read this great work by Goyal et al. arxiv.org/abs/2411.11437

I'm optimizing for high coverage and low redundancy—assigning reviewers based on relevant topics or affinity scores alone feels off. Seniority and diversity matter!