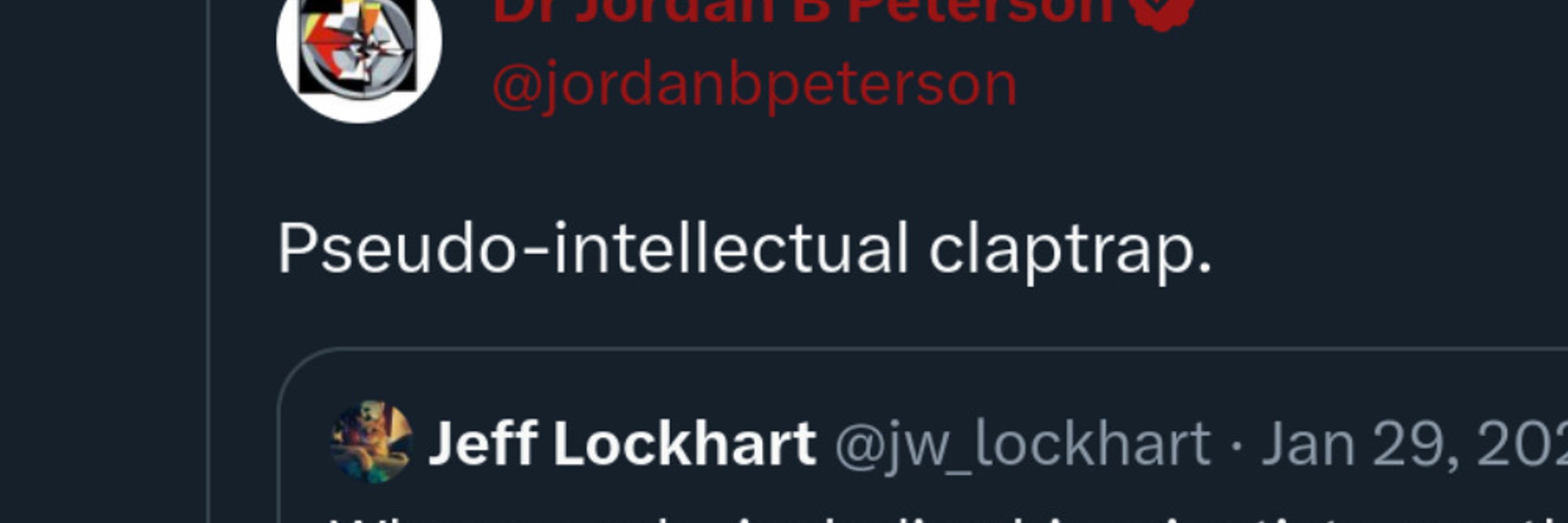

Jeff Lockhart

@jwlockhart.bsky.social

2.3K followers

630 following

530 posts

Cat person. Sometimes sociologist of science, sex, & other stuff.

Posts

Media

Videos

Starter Packs