🗓️ Fri, June 13, 10:30 AM–12:30 PM

📍 ExHall D, Poster #34

🔗 justachetan.github.io/hnf-derivati...

👉 cvpr.thecvf.com/virtual/2025...

Details ⬇️ (1/n)

Please reach out if you would like to chat about neural fields, dynamic scenes, video understanding, or just generally about gaming, musicals, or ☕️

I will also be presenting our poster ⬇️ (Come visit!)

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

🗓️ Fri, June 13, 10:30 AM–12:30 PM

📍 ExHall D, Poster #34

🔗 justachetan.github.io/hnf-derivati...

👉 cvpr.thecvf.com/virtual/2025...

Details ⬇️ (1/n)

Please reach out if you would like to chat about neural fields, dynamic scenes, video understanding, or just generally about gaming, musicals, or ☕️

I will also be presenting our poster ⬇️ (Come visit!)

🗓️ Fri, June 13, 10:30 AM–12:30 PM

📍 ExHall D, Poster #34

🔗 justachetan.github.io/hnf-derivati...

👉 cvpr.thecvf.com/virtual/2025...

Details ⬇️ (1/n)

Please reach out if you would like to chat about neural fields, dynamic scenes, video understanding, or just generally about gaming, musicals, or ☕️

I will also be presenting our poster ⬇️ (Come visit!)

🗓️ Fri, June 13, 10:30 AM–12:30 PM

📍 ExHall D, Poster #34

🔗 justachetan.github.io/hnf-derivati...

👉 cvpr.thecvf.com/virtual/2025...

Details ⬇️ (1/n)

🗓️ Fri, June 13, 10:30 AM–12:30 PM

📍 ExHall D, Poster #34

🔗 justachetan.github.io/hnf-derivati...

👉 cvpr.thecvf.com/virtual/2025...

Details ⬇️ (1/n)

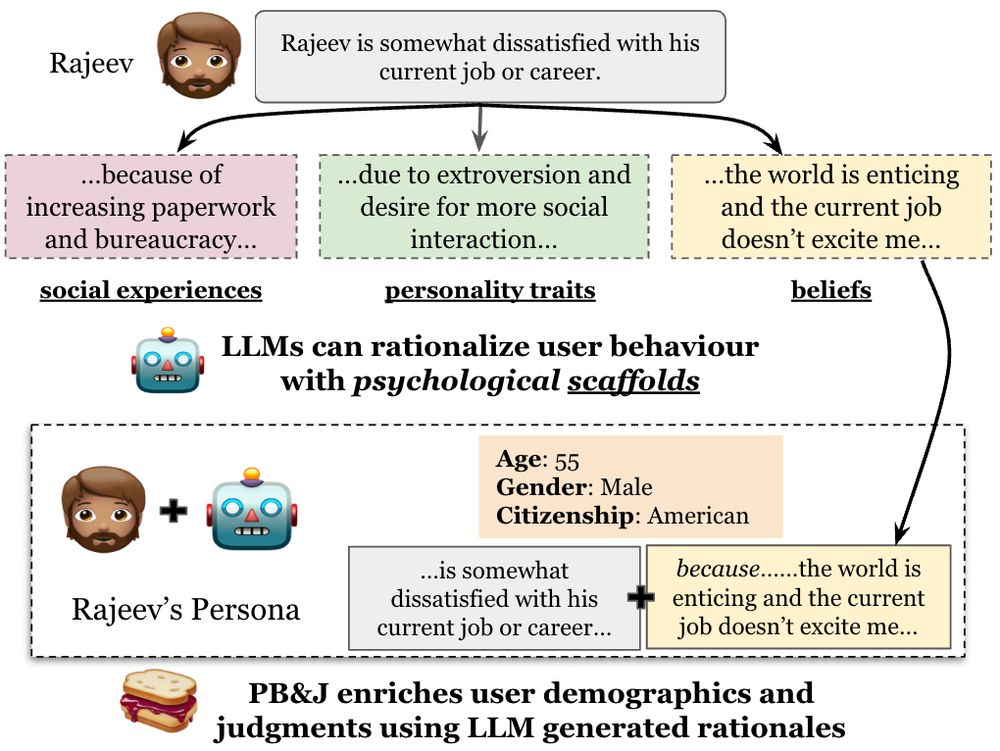

📝Excited to share our new work: Improving LLM Personas via Rationalization with Psychological Scaffolds

🔗 arxiv.org/abs/2504.17993

🧵 (1/n)

📝Excited to share our new work: Improving LLM Personas via Rationalization with Psychological Scaffolds

🔗 arxiv.org/abs/2504.17993

🧵 (1/n)

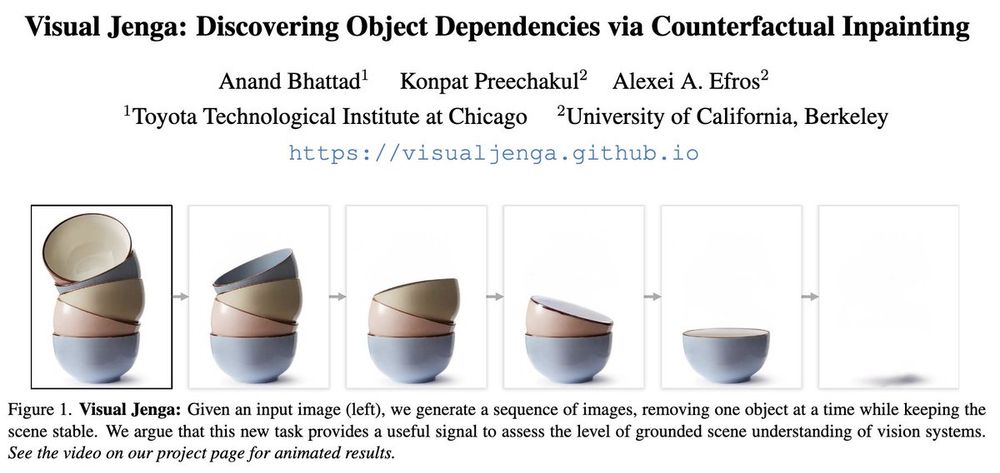

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!