AI chatbots can shift voter attitudes on candidates & policies, often by 10+pp

🔹Exps in US Canada Poland & UK

🔹More “facts”→more persuasion (not psych tricks)

🔹Increasing persuasiveness reduces "fact" accuracy

🔹Right-leaning bots=more inaccurate

AI chatbots can shift voter attitudes on candidates & policies, often by 10+pp

🔹Exps in US Canada Poland & UK

🔹More “facts”→more persuasion (not psych tricks)

🔹Increasing persuasiveness reduces "fact" accuracy

🔹Right-leaning bots=more inaccurate

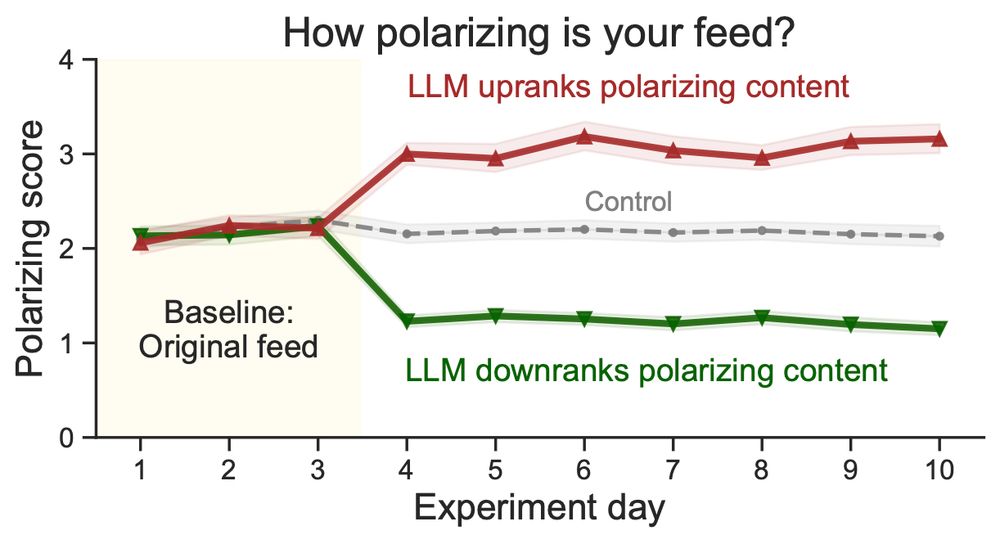

We add an LLM-powered reranking of highly polarizing political content into N=1256 participants' feeds. Downranking cools tensions with the opposite party—but upranking inflames them.

In a platform-independent field experiment, we show that reranking content expressing antidemocratic attitudes and partisan animosity in social media feeds alters affective polarization.

🧵

In a platform-independent field experiment, we show that reranking content expressing antidemocratic attitudes and partisan animosity in social media feeds alters affective polarization.

🧵

Link: doi.org/10.1126/scie...

And a very thoughtful perspective by @jennyallen.bsky.social and @jatucker.bsky.social: doi.org/10.1126/scie...

/1

Link: doi.org/10.1126/scie...

And a very thoughtful perspective by @jennyallen.bsky.social and @jatucker.bsky.social: doi.org/10.1126/scie...

/1

www.science.org/doi/10.1126/...

www.science.org/doi/10.1126/...

We add an LLM-powered reranking of highly polarizing political content into N=1256 participants' feeds. Downranking cools tensions with the opposite party—but upranking inflames them.

We add an LLM-powered reranking of highly polarizing political content into N=1256 participants' feeds. Downranking cools tensions with the opposite party—but upranking inflames them.

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️