Interested in Computer Vision, Geometry, and learning both at the same time

https://www.jgaubil.com/

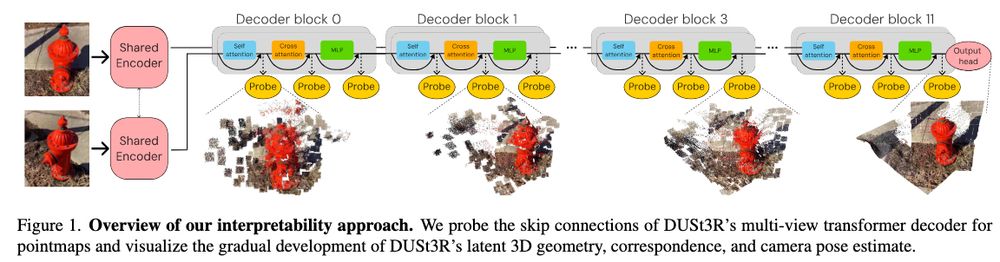

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

Michal Stary, Julien Gaubil, Ayush Tewari, Vincent Sitzmann

arxiv.org/abs/2510.24907

Trending on www.scholar-inbox.com

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

We use Dust3r as a black box. This work looks under the hood at what is going on. The internal representations seem to "iteratively" refine towards the final answer. Quite similar to what goes on in point cloud net

Check out our new work: MILo: Mesh-In-the-Loop Gaussian Splatting!

🎉Accepted to SIGGRAPH Asia 2025 (TOG)

MILo is a novel differentiable framework that extracts meshes directly from Gaussian parameters during training.

🧵👇

Check out our new work: MILo: Mesh-In-the-Loop Gaussian Splatting!

🎉Accepted to SIGGRAPH Asia 2025 (TOG)

MILo is a novel differentiable framework that extracts meshes directly from Gaussian parameters during training.

🧵👇

Sign up now to be randomly matched with peers for a SIGGRAPH conference coffee!

Are you a researcher of an underrepresented gender registered for SIGGRAPH? Do you want an opportunity to network with your peers? Learn more and sign up here:

www.wigraph.org/events/2025-...

Sign up now to be randomly matched with peers for a SIGGRAPH conference coffee!

🍵MAtCha reconstructs sharp, accurate and scalable meshes of both foreground AND background from just a few unposed images (eg 3 to 10 images)...

...While also working with dense-view datasets (hundreds of images)!

🍵MAtCha reconstructs sharp, accurate and scalable meshes of both foreground AND background from just a few unposed images (eg 3 to 10 images)...

...While also working with dense-view datasets (hundreds of images)!