Inception Labs

@inceptionlabs.bsky.social

150 followers

1 following

7 posts

Pioneering a new generation of LLMs.

Posts

Media

Videos

Starter Packs

Inception Labs

@inceptionlabs.bsky.social

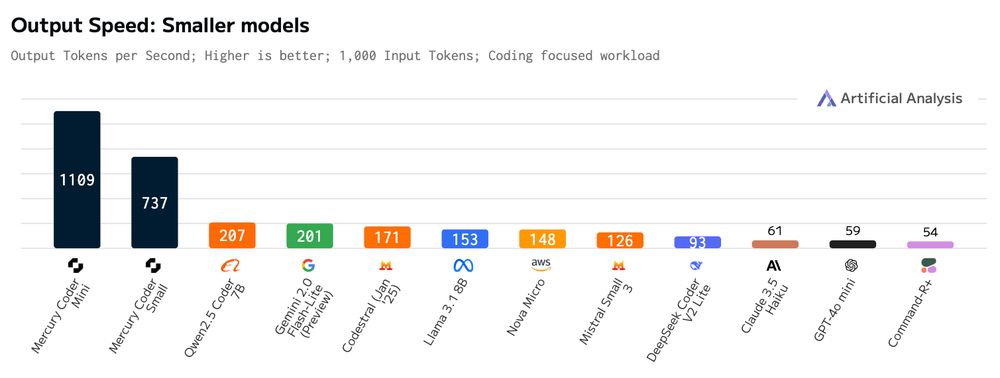

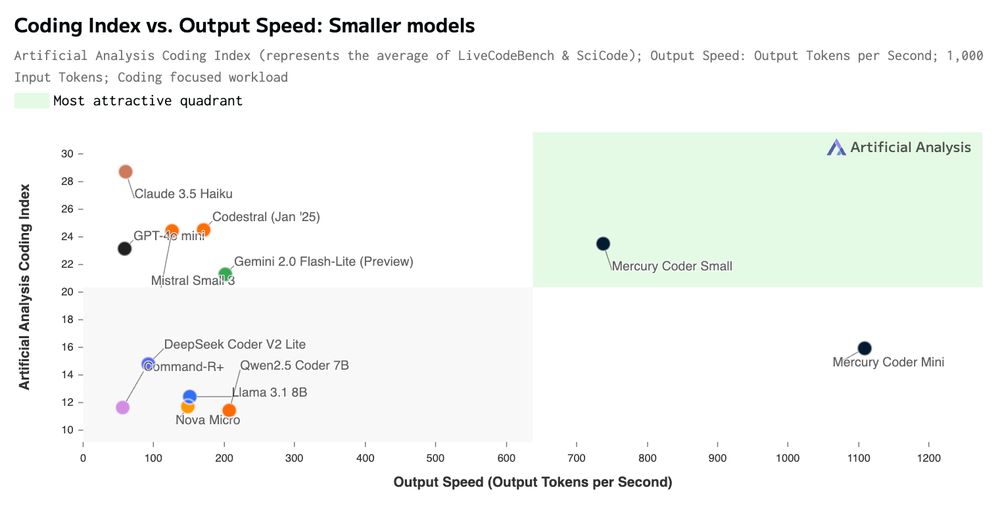

· Feb 26

Inception Labs

@inceptionlabs.bsky.social

· Feb 25