Interests: Bioinformatics, Genomics, LLMs, Python, Rust, NLP.

https://imaurer.com/

https://github.com/imaurer/

Then you can grind and keep eeking out gains.

This has add’l benefits:

- improvement on that task

- greater vision of what else you can do

- higher expectations on what good results are

- better first drafts the next time.

Then you can grind and keep eeking out gains.

This has add’l benefits:

- improvement on that task

- greater vision of what else you can do

- higher expectations on what good results are

- better first drafts the next time.

For instance, I do something like this:

- analyze

- draft

- evaluate

- final

And then provide exemplars with errors to show self correction.

But I haven’t done extensive testing. Just seems like a good idea.

For instance, I do something like this:

- analyze

- draft

- evaluate

- final

And then provide exemplars with errors to show self correction.

But I haven’t done extensive testing. Just seems like a good idea.

ArXiv Paper:

arxiv.org/pdf/2411.03538

ArXiv Paper:

arxiv.org/pdf/2411.03538

Repo:

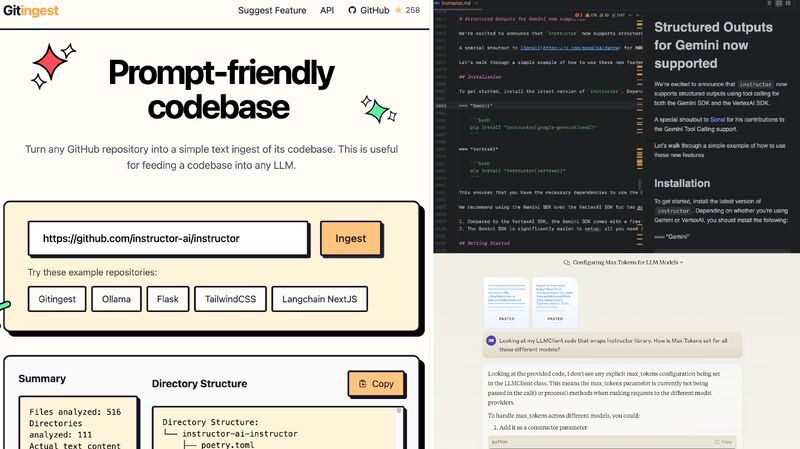

github.com/imaurer/awes...

Repo:

github.com/imaurer/awes...

Builds upon GLiNER which does 0-shot Named Entity Recognition (NER).

Repo:

github.com/jackboyla/GL...

Model:

huggingface.co/jackboyla/gl...

h/t @pacoid.bsky.social

Builds upon GLiNER which does 0-shot Named Entity Recognition (NER).

Repo:

github.com/jackboyla/GL...

Model:

huggingface.co/jackboyla/gl...

h/t @pacoid.bsky.social