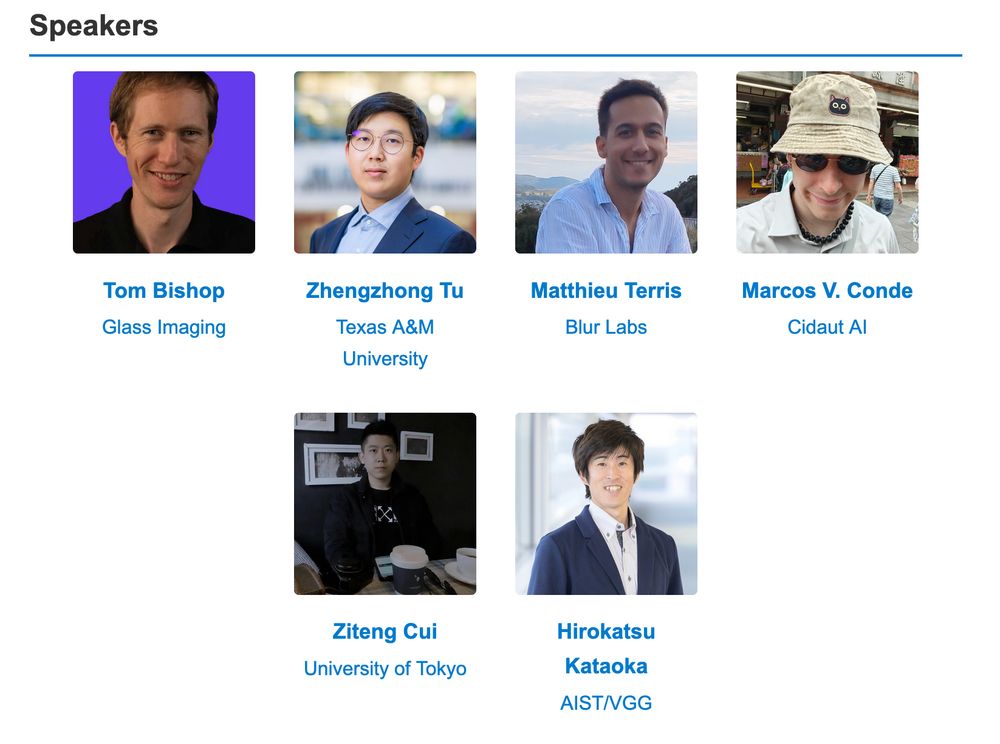

Hirokatsu Kataoka | 片岡裕雄

@hirokatukataoka.bsky.social

130 followers

150 following

50 posts

Chief Scientist @ AIST | Academic Visitor @ Oxford VGG | PI @ cvpaper.challenge | 3D ResNet (Top 0.5% in 5-yr CVPR) | FDSL (ACCV20 Award/BMVC23 Award Finalist)

Posts

Media

Videos

Starter Packs

Reposted by Hirokatsu Kataoka | 片岡裕雄