@leonardoai_. (now at @canva) trying to feel the magic.

www.ethansmith2000.com

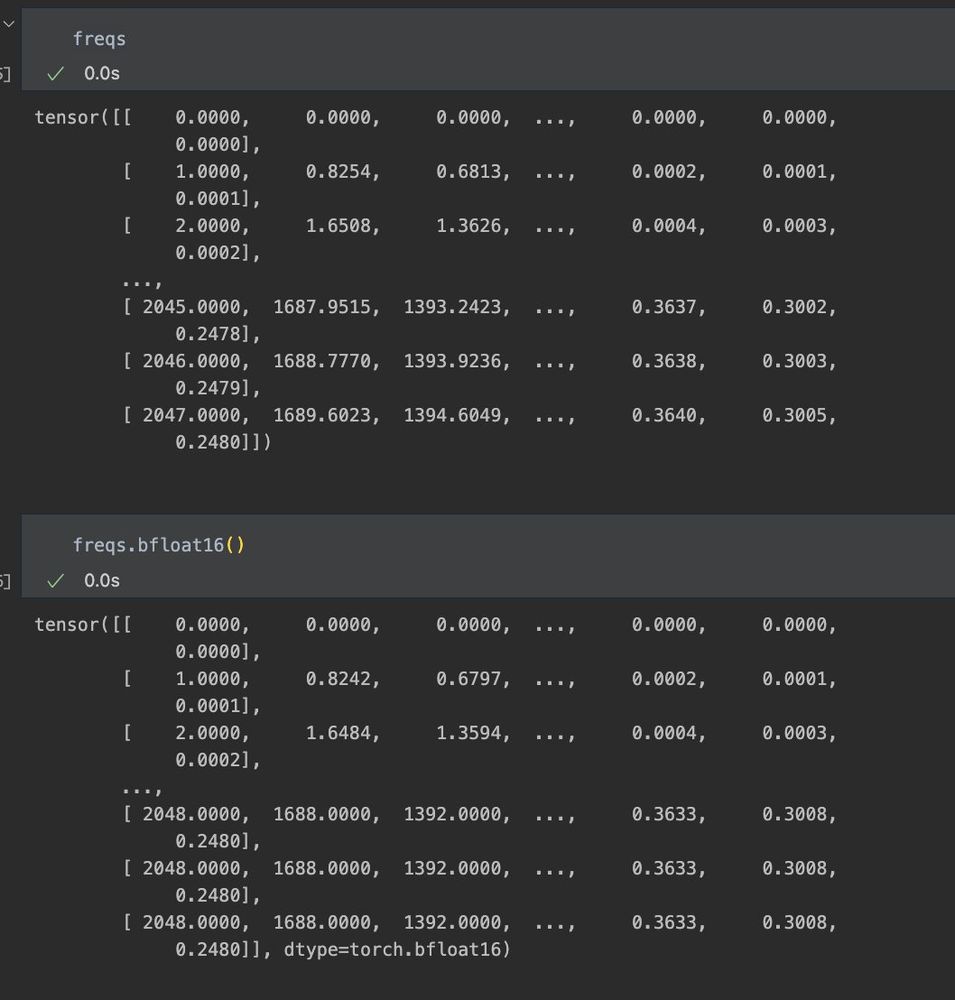

For a reasonable N of 2048, these are the computed frequencies prior to cos(x) & sin(x) for fp32 above and bf16 below.

Given how short the period is of simple trig functions, this difference is catastrophic for large values.

For a reasonable N of 2048, these are the computed frequencies prior to cos(x) & sin(x) for fp32 above and bf16 below.

Given how short the period is of simple trig functions, this difference is catastrophic for large values.

Large companies are training models and gating them and making you pay per use with no transparency on biases, fairness of the model or societal/environmental impact.

dev-discuss.pytorch.org/t/fsdp-cudac...

TLDR by default mem is proper to a stream, to share it::

- `Tensor.record_stream` -> automatic, but can be suboptimal and nondeterministic

- `Stream.wait` -> manual, but precise control

dev-discuss.pytorch.org/t/fsdp-cudac...

TLDR by default mem is proper to a stream, to share it::

- `Tensor.record_stream` -> automatic, but can be suboptimal and nondeterministic

- `Stream.wait` -> manual, but precise control

Happy to have helped provide the compute for this and hoping to support more awesome research like this!

Previous record: 5.03 minutes

Changelog:

- FlexAttention blocksize warmup

- hyperparameter tweaks

Happy to have helped provide the compute for this and hoping to support more awesome research like this!

@ethansmith2000.com for generously providing H100s to support this research to enable this release. Y'all rock, thanks so much! <3

@ethansmith2000.com for generously providing H100s to support this research to enable this release. Y'all rock, thanks so much! <3

Previous record: 5.03 minutes

Changelog:

- FlexAttention blocksize warmup

- hyperparameter tweaks

Previous record: 5.03 minutes

Changelog:

- FlexAttention blocksize warmup

- hyperparameter tweaks

It was a little annoying as both SOAP and psgd maintain preconds as lists of varying size, which fail to be pickled. To fix this I hardcoded there to be a max of 4 (based on conv layers being 4d tensors).

github.com/ethansmith20...

It was a little annoying as both SOAP and psgd maintain preconds as lists of varying size, which fail to be pickled. To fix this I hardcoded there to be a max of 4 (based on conv layers being 4d tensors).

github.com/ethansmith20...

github.com/ethansmith20...

github.com/ethansmith20...

github.com/ethansmith20...

If ML experimentation software was as widespreadly accessible as it is now, I'd be generative diffusion models would have been discovered a while ago.

If ML experimentation software was as widespreadly accessible as it is now, I'd be generative diffusion models would have been discovered a while ago.

dev-discuss.pytorch.org/t/fsdp-cudac...

dev-discuss.pytorch.org/t/fsdp-cudac...

GTC is about guiding spaceships from your walls to their portals by drawing paths and destroying obstacles

meta.com/experiences/...

Let's talk about the features... 🧵

#gamedev #vr #xr #MetaQuest

GTC is about guiding spaceships from your walls to their portals by drawing paths and destroying obstacles

meta.com/experiences/...

Let's talk about the features... 🧵

#gamedev #vr #xr #MetaQuest