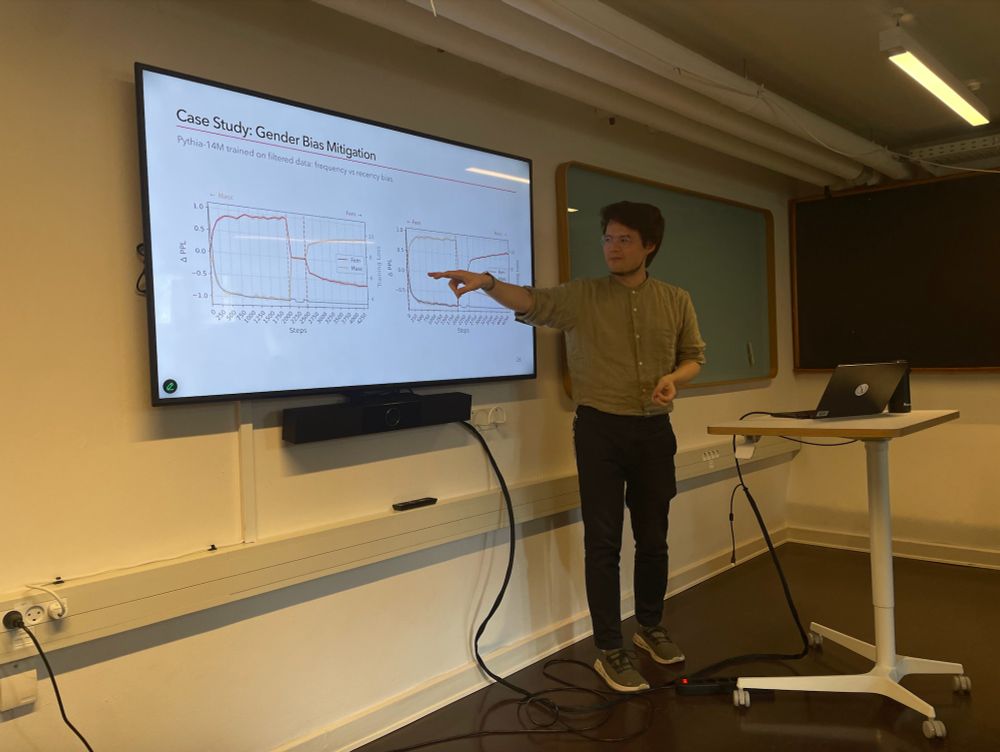

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

💻 The code is now a python package! Installation instructions here: github.com/dwright37/ll...

🤗 All 1.6M model responses and 70M clustered claims are now available on HuggingFace! huggingface.co/datasets/dwr...

📄 Paper: arxiv.org/pdf/2510.04226

💻 The code is now a python package! Installation instructions here: github.com/dwright37/ll...

🤗 All 1.6M model responses and 70M clustered claims are now available on HuggingFace! huggingface.co/datasets/dwr...

📄 Paper: arxiv.org/pdf/2510.04226

www.copenlu.com/news/8-paper...

@apepa.bsky.social @rnv.bsky.social @kirekara.bsky.social @shoejoe.bsky.social @dustinbwright.com @zainmujahid.me @lucasresck.bsky.social @iaugenstein.bsky.social

#NLProc #AI #EMNLP2025

www.copenlu.com/news/8-paper...

@apepa.bsky.social @rnv.bsky.social @kirekara.bsky.social @shoejoe.bsky.social @dustinbwright.com @zainmujahid.me @lucasresck.bsky.social @iaugenstein.bsky.social

#NLProc #AI #EMNLP2025

⏰ When: Fri. Nov 7 14:00-15:30

🗺️ Where: Hall C

I'm unable to attend but @iaugenstein.bsky.social will present our work!

⏰ When: Fri. Nov 7 14:00-15:30

🗺️ Where: Hall C

I'm unable to attend but @iaugenstein.bsky.social will present our work!

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

"Unstructured Evidence Attribution for Long Context Query Focused Summarization"

w/ @zainmujahid.me , Lu Wang, @iaugenstein.bsky.social , and @davidjurgens.bsky.social

"Unstructured Evidence Attribution for Long Context Query Focused Summarization"

w/ @zainmujahid.me , Lu Wang, @iaugenstein.bsky.social , and @davidjurgens.bsky.social

You can read “Efficiency is Not Enough: A Critical Perspective on Environmentally Sustainable AI” now in CACM!!!

dl.acm.org/doi/10.1145/...

You can read “Efficiency is Not Enough: A Critical Perspective on Environmentally Sustainable AI” now in CACM!!!

dl.acm.org/doi/10.1145/...

@dustinbwright.com @ic2s2.bsky.social #ic2s2

@dustinbwright.com @ic2s2.bsky.social #ic2s2

See the preprint of this work here: arxiv.org/abs/2409.08330

See the preprint of this work here: arxiv.org/abs/2409.08330

Check out the paper ojs.aaai.org/index.php/IC... and models huggingface.co/ariannap22

Feat. poster and research buddy @alessianetwork.bsky.social ♥️

Check out the paper ojs.aaai.org/index.php/IC... and models huggingface.co/ariannap22

Feat. poster and research buddy @alessianetwork.bsky.social ♥️

If you want to apply to work with me and Johannes Bjerva at @aau.dk Copenhagen, I'll be at @ic2s2.bsky.social this week and @aclmeeting.bsky.social next week! DM me if you'd like to meet :)

If you want to apply to work with me and Johannes Bjerva at @aau.dk Copenhagen, I'll be at @ic2s2.bsky.social this week and @aclmeeting.bsky.social next week! DM me if you'd like to meet :)

cacm.acm.org/sustainabili...

cacm.acm.org/sustainabili...

🇩🇰 With international NLP experts from Columbia, UCLA, University of Michigan, and more to Copenhagen to meet with the Danish NLP community. 🇩🇰

📅 Poster submission deadline: June 16, 2025

🔗 Register: www.aicentre.dk/events/pre-a...

🇩🇰 With international NLP experts from Columbia, UCLA, University of Michigan, and more to Copenhagen to meet with the Danish NLP community. 🇩🇰

📅 Poster submission deadline: June 16, 2025

🔗 Register: www.aicentre.dk/events/pre-a...

We show: fact checking w/ crowd workers is more efficient when using LLM summaries, quality doesn't suffer.

arxiv.org/abs/2501.18265

@aicentre.dk

climateainordics.com/newsletter/2...

@aicentre.dk

huggingface.co/datasets/dwr...

Use it as a training or a test set for long context query focused summarization! It includes evidence attribution of free-form text spans from the context, making summaries more transparent and reliable!

huggingface.co/datasets/dwr...

Use it as a training or a test set for long context query focused summarization! It includes evidence attribution of free-form text spans from the context, making summaries more transparent and reliable!

We propose *unstructured* evidence attribution for long context summarization and a synthetic dataset called SUnsET which can help models perform this task.

Paper link: arxiv.org/abs/2502.14409

Thread ⬇️

We propose *unstructured* evidence attribution for long context summarization and a synthetic dataset called SUnsET which can help models perform this task.

Paper link: arxiv.org/abs/2502.14409

Thread ⬇️

We show: fact checking w/ crowd workers is more efficient when using LLM summaries, quality doesn't suffer.

arxiv.org/abs/2501.18265

We show: fact checking w/ crowd workers is more efficient when using LLM summaries, quality doesn't suffer.

arxiv.org/abs/2501.18265

Come to poster 4003 in East Hall to learn about Bayesian model reduction for structured pruning!

Come to poster 4003 in East Hall to learn about Bayesian model reduction for structured pruning!

I’m also on the faculty job market!

Come talk to me about neural network efficiency, reliable NLP, open positions, and come to my spotlight poster on Friday from 11a — 2p in East Exhibit Hall A — C, poster number 4003

I’m also on the faculty job market!

Come talk to me about neural network efficiency, reliable NLP, open positions, and come to my spotlight poster on Friday from 11a — 2p in East Exhibit Hall A — C, poster number 4003

I’m on the job market so message me if you’d like to meet up during the conference to chat about jobs, research, or to just hang out 😊

Next stop is #NeurIPS2024 where I'll present our ✨spotlight✨ paper on thresholdless Bayesian structured pruning!

Paper: arxiv.org/pdf/2406.01345

I’m on the job market so message me if you’d like to meet up during the conference to chat about jobs, research, or to just hang out 😊