Previously AI Research Engineer at Bending Spoons

While Phi-4 excels on benchmarks like math competitions, BALROG reveals that Phi-4 falls short as an agent. More research on how to improve agentic performance is needed.

While Phi-4 excels on benchmarks like math competitions, BALROG reveals that Phi-4 falls short as an agent. More research on how to improve agentic performance is needed.

This week's new entries on balrogai.com are:

Llama 3.3 70B Instruct 🫤

Claude 3.5 Haiku✨

Mistral-Nemo-it (12B) 🆗

Github: github.com/balrog-ai/BA...

This week's new entries on balrogai.com are:

Llama 3.3 70B Instruct 🫤

Claude 3.5 Haiku✨

Mistral-Nemo-it (12B) 🆗

Github: github.com/balrog-ai/BA...

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

Check out what he had to say about it here:

jack-clark.net

And check out BALROG's leaderboard on balrogai.com

Check out what he had to say about it here:

jack-clark.net

And check out BALROG's leaderboard on balrogai.com

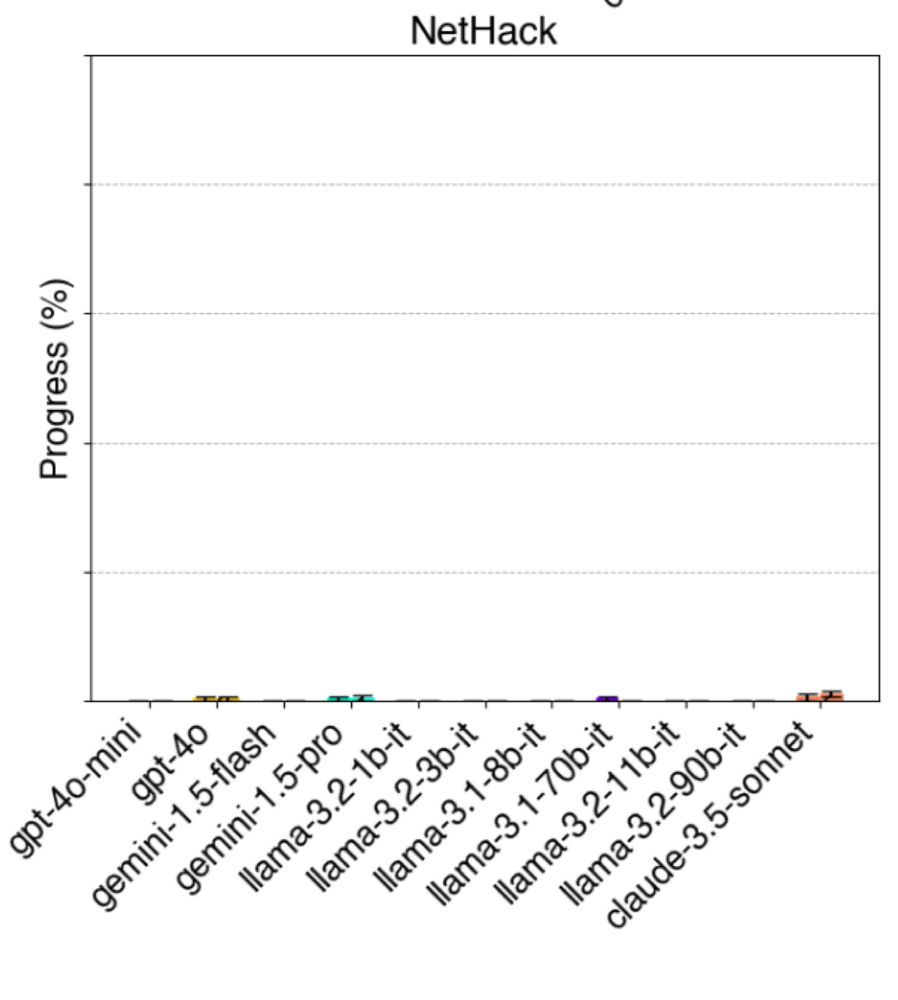

The hardest of all is Nethack. No AI is close, and I suspect that an AI that can fairly win/ascend would need to be AGI-ish. Paper: balrogai.com

The hardest of all is Nethack. No AI is close, and I suspect that an AI that can fairly win/ascend would need to be AGI-ish. Paper: balrogai.com

... unless it's really good in reasoning and games!

Check out this new amazing benchmark BALROG 👾 from @dpaglieri.bsky.social and team 👇

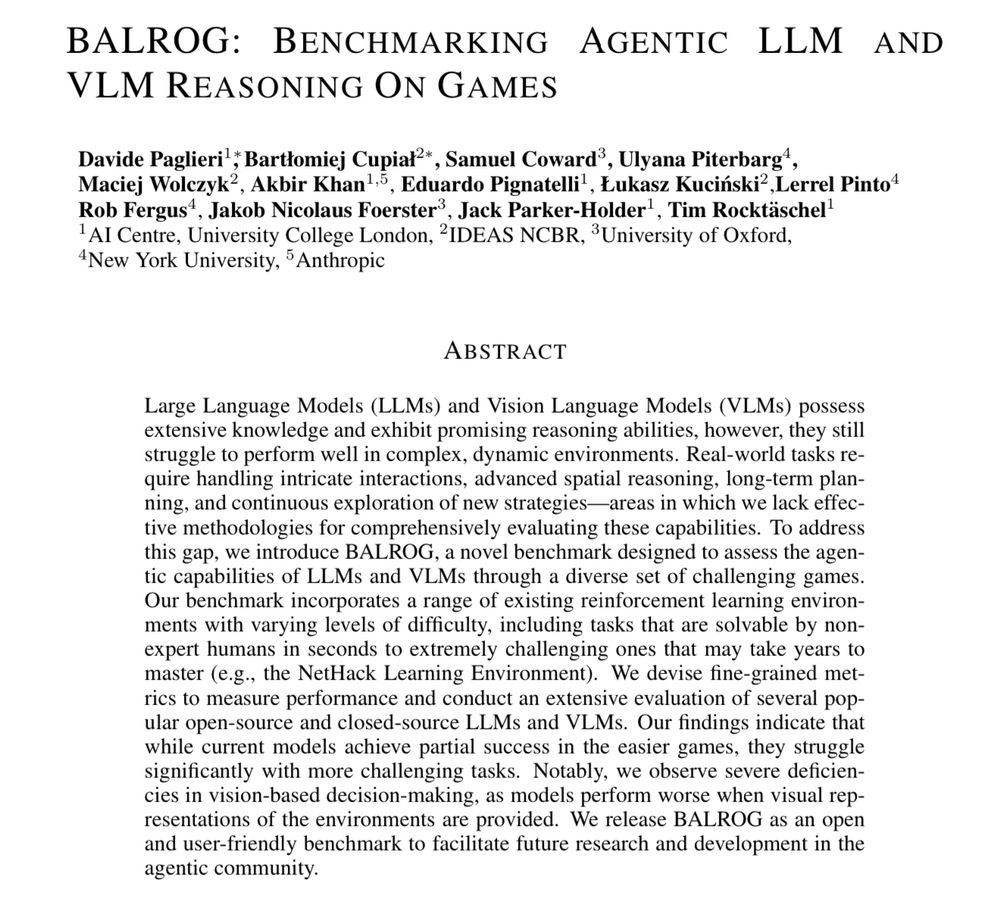

🔥 Introducing BALROG: a Benchmark for Agentic LLM and VLM Reasoning On Games!

BALROG is a challenging benchmark for LLM agentic capabilities, designed to stay relevant for years to come.

1/🧵

... unless it's really good in reasoning and games!

Check out this new amazing benchmark BALROG 👾 from @dpaglieri.bsky.social and team 👇

🔥 Introducing BALROG: a Benchmark for Agentic LLM and VLM Reasoning On Games!

BALROG is a challenging benchmark for LLM agentic capabilities, designed to stay relevant for years to come.

1/🧵

🔥 Introducing BALROG: a Benchmark for Agentic LLM and VLM Reasoning On Games!

BALROG is a challenging benchmark for LLM agentic capabilities, designed to stay relevant for years to come.

1/🧵