David Heineman

@davidheineman.com

Pre-doc @ai2.bsky.social

davidheineman.com

davidheineman.com

Evaluating language models is tricky, how do we know if our results are real, or due to random chance?

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

August 19, 2025 at 4:46 PM

Evaluating language models is tricky, how do we know if our results are real, or due to random chance?

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

Reposted by David Heineman

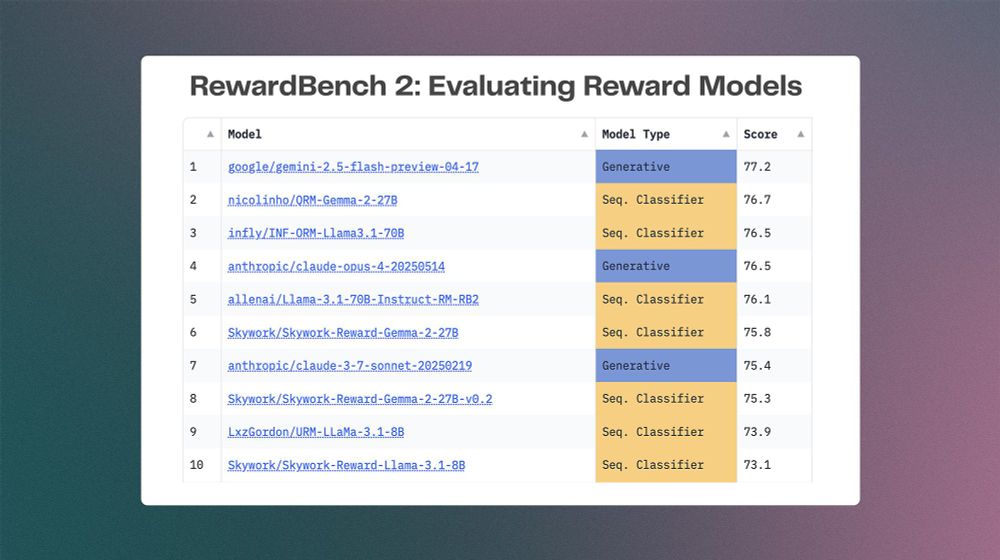

RewardBench 2 is here! We took a long time to learn from our first reward model evaluation tool to make one that is substantially harder and more correlated with both downstream RLHF and inference-time scaling.

June 2, 2025 at 4:31 PM

RewardBench 2 is here! We took a long time to learn from our first reward model evaluation tool to make one that is substantially harder and more correlated with both downstream RLHF and inference-time scaling.