ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

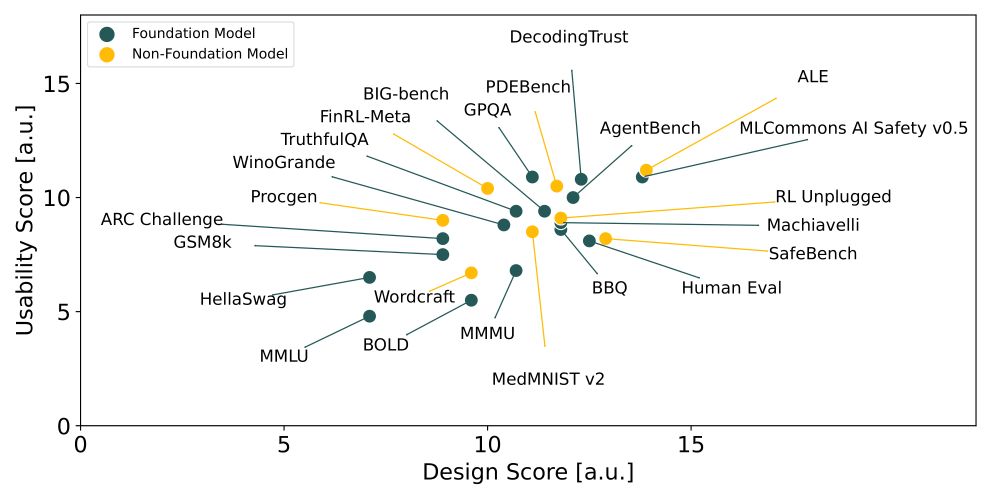

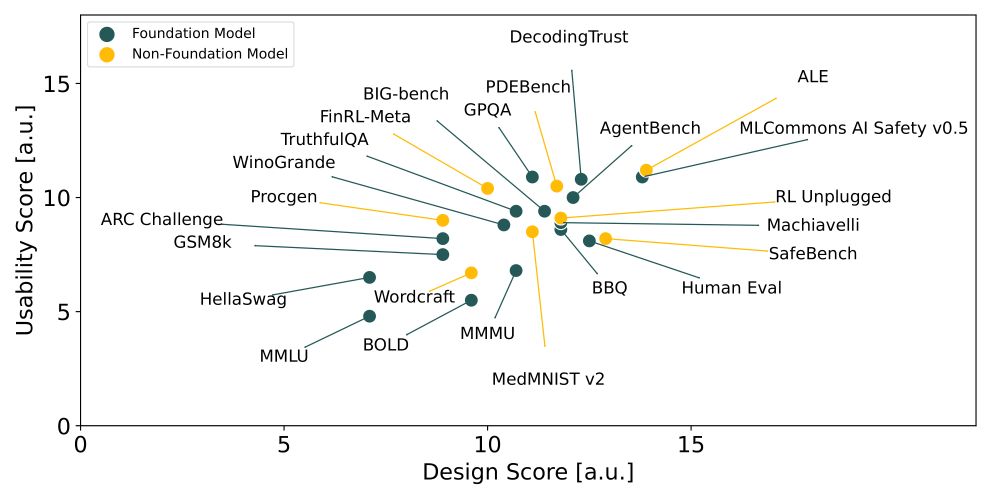

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

www.cooperativeai.com/summer-schoo...

www.cooperativeai.com/summer-schoo...

arxiv.org/abs/2405.06161

Would love to connect with folks and chat anything multi-agent, agentic AI, benchmarking, etc.

I am applying for fall ‘25 PhDs. Ping me if you have advice or there may be a fit!

Would love to connect with folks and chat anything multi-agent, agentic AI, benchmarking, etc.

I am applying for fall ‘25 PhDs. Ping me if you have advice or there may be a fit!

MALT is a multi-agent configuration that leverages synthetic data generation and credit assignment strategies for post-training specialized models solving problems together

MALT is a multi-agent configuration that leverages synthetic data generation and credit assignment strategies for post-training specialized models solving problems together

@techreviewjp.bsky.social

Article: bit.ly/3Zo1rgw

Paper: bit.ly/4eMSZfw

Website & Scores: betterbench.stanford.edu

Please share widely & join us in setting new standards for better AI benchmarking! ❤️

@techreviewjp.bsky.social

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x