arxiv.org/abs/1708.03735

arxiv.org/abs/1708.03735

drsciml.github.io/drsciml/

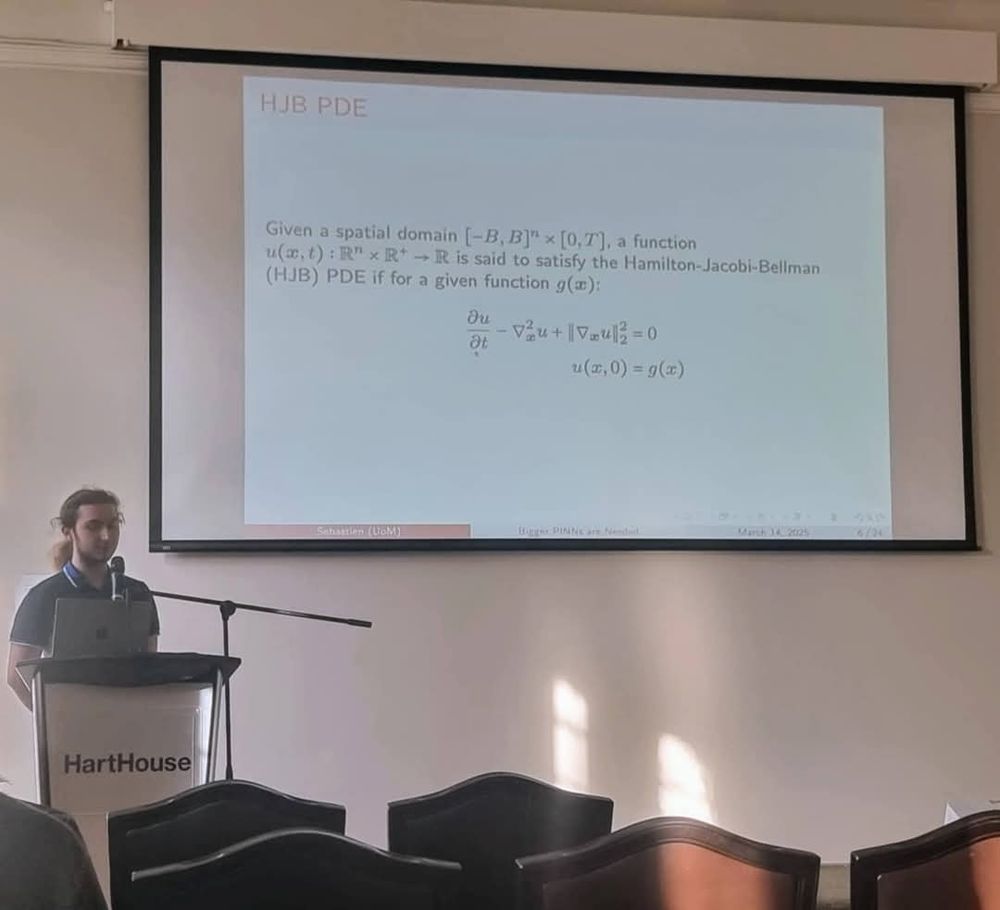

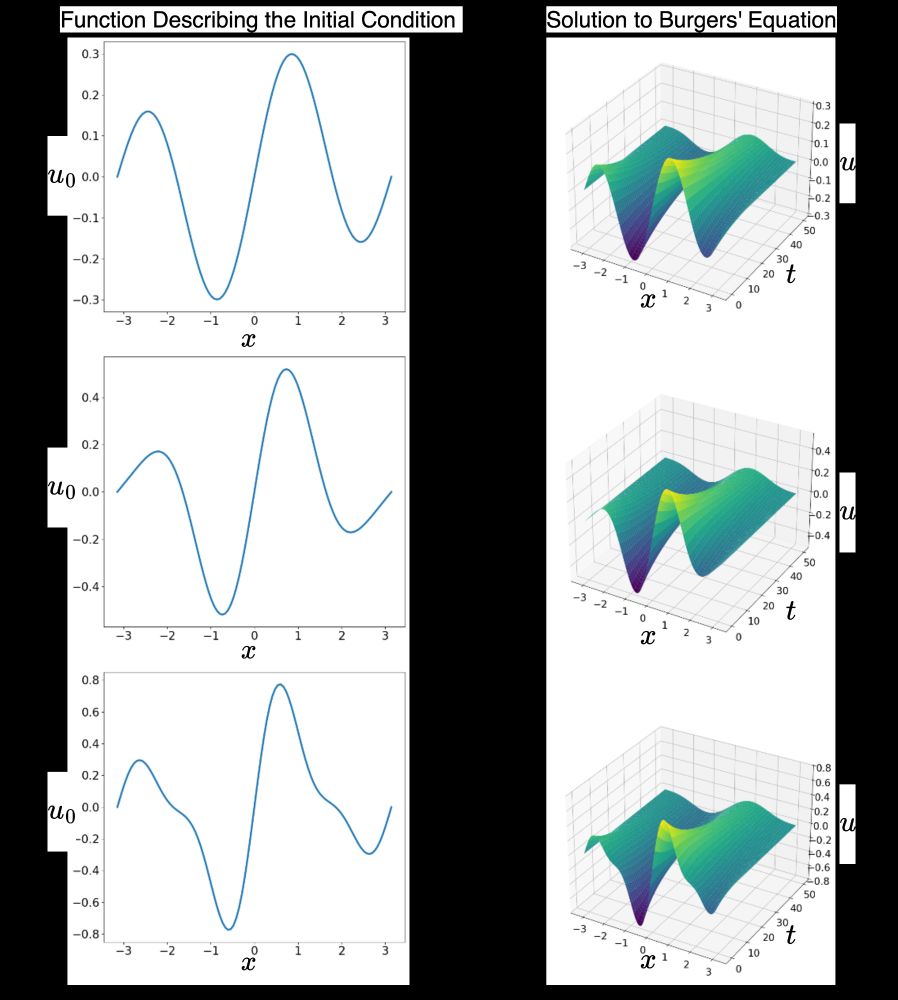

India's premier institute IISc 's "Bangalore Theory Seminars" where I explained our results on size lowerbounds for neural models of solving PDEs via neural nets. #SciML #AI4SCIENCE I cover work by one of my, 1st year PhD student, Sebastien.

youtu.be/CWvnhv1nMRY?...

India's premier institute IISc 's "Bangalore Theory Seminars" where I explained our results on size lowerbounds for neural models of solving PDEs via neural nets. #SciML #AI4SCIENCE I cover work by one of my, 1st year PhD student, Sebastien.

youtu.be/CWvnhv1nMRY?...

stanford.io/2WJJJGN

stanford.io/2WJJJGN

www.acm.org/articles/acm...

www.acm.org/articles/acm...

In our hugely revised draft my student @dkumar9.bsky.social gives the full proof that a form of noisy-GD, Langevin Monte-Carlo (#LMC), can learn arbitrary depth 2 nets.

arxiv.org/abs/2503.10428

In our hugely revised draft my student @dkumar9.bsky.social gives the full proof that a form of noisy-GD, Langevin Monte-Carlo (#LMC), can learn arbitrary depth 2 nets.

arxiv.org/abs/2503.10428

drsciml.github.io/drsciml/

drsciml.github.io/drsciml/

Title: Immersion posterior: Meeting Frequentist Goals under Structural Restrictions

Time: Aug 5 16:00-17:00

Abstract: www.newton.ac.uk/seminar/45562/

Livestream: www.newton.ac.uk/news/watch-l...

Title: Immersion posterior: Meeting Frequentist Goals under Structural Restrictions

Time: Aug 5 16:00-17:00

Abstract: www.newton.ac.uk/seminar/45562/

Livestream: www.newton.ac.uk/news/watch-l...

drsciml.github.io/drsciml/

- We are hosting a 2 day international workshop on understanding scientific-ML.

- We have leading experts from around the world giving talks.

- There might be ticketing. Watch this space!

drsciml.github.io/drsciml/

- We are hosting a 2 day international workshop on understanding scientific-ML.

- We have leading experts from around the world giving talks.

- There might be ticketing. Watch this space!

- dl.acm.org/journal/topml

- jds.acm.org

- link.springer.com/journal/44439

- academic.oup.com/rssdat

- jmlr.org/tmlr/

- data.mlr.press

No reason why these cant replace everything the current conferences are doing and most likely better.

- dl.acm.org/journal/topml

- jds.acm.org

- link.springer.com/journal/44439

- academic.oup.com/rssdat

- jmlr.org/tmlr/

- data.mlr.press

No reason why these cant replace everything the current conferences are doing and most likely better.

openreview.net/pdf?id=ABT1X...

#optimization #deeplearningtheory

openreview.net/pdf?id=ABT1X...

#optimization #deeplearningtheory

openreview.net/pdf?id=ABT1X...

#optimization #deeplearningtheory

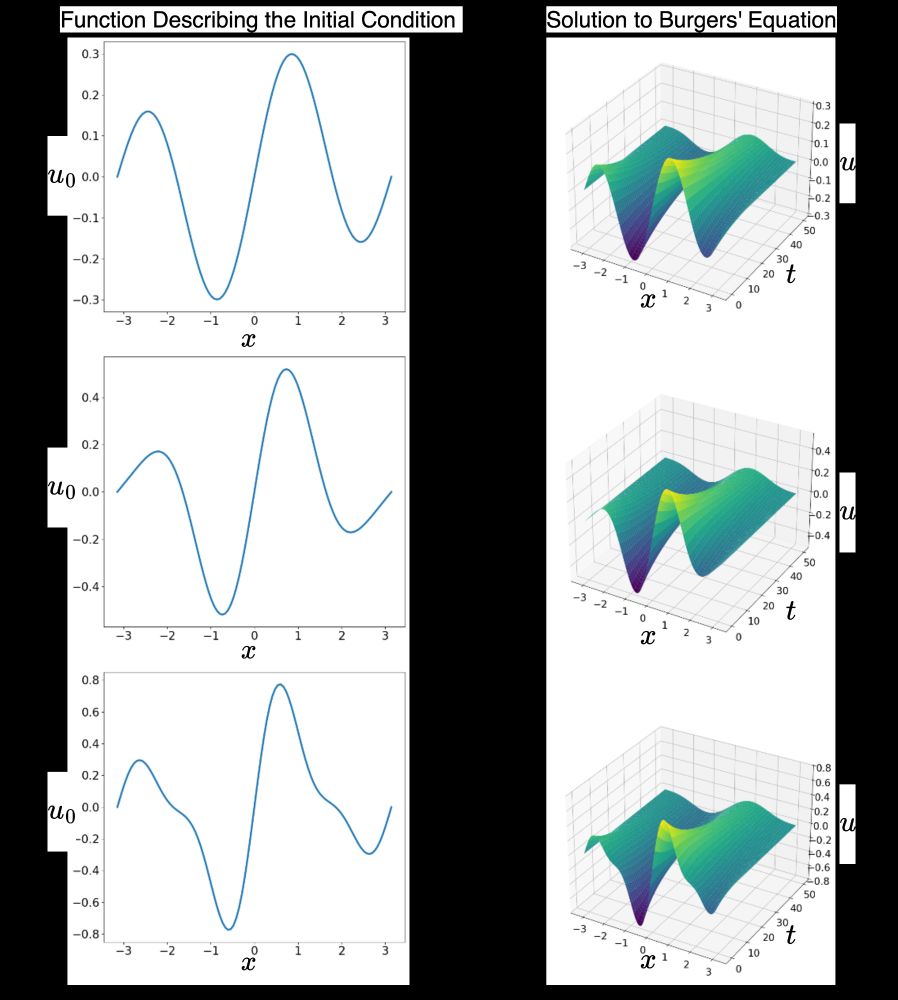

- and more specially,

"Machine Learning in Function Spaces/Infinite Dimensions".

Its all about the 2 key inequalities on slides 27 and 33.

Both come via similar proofs.

github.com/Anirbit-AI/S...

- and more specially,

"Machine Learning in Function Spaces/Infinite Dimensions".

Its all about the 2 key inequalities on slides 27 and 33.

Both come via similar proofs.

github.com/Anirbit-AI/S...

We know of edge-cases with simple PDEs, where PINNs struggle, but then often those aren't the cutting-edge of use-cases of PDEs.

We know of edge-cases with simple PDEs, where PINNs struggle, but then often those aren't the cutting-edge of use-cases of PDEs.

arxiv.org/abs/2404.08624

arxiv.org/abs/2404.08624

In my recent work with @anirbit.bsky.social and Samyak Jha (arxiv.org/abs/2503.10428), we prove noisy gradient descent learns neural nets.