Mike Driscoll

@medriscoll.com

3.7K followers

270 following

160 posts

Founder @ RillData.com, building GenBI. Lover of fast, flexible, beautiful data tools. Lapsed computational biologist.

Posts

Media

Videos

Starter Packs

Mike Driscoll

@medriscoll.com

· May 27

Today we're launching DuckLake, an integrated data lake and catalog format powered by SQL. DuckLake unlocks next-generation data warehousing where compute is local, consistency central, and storage scales till infinity. ducklake is an open standard and we implemented it in the "ducklake" extension.

Mike Driscoll

@medriscoll.com

· Apr 15

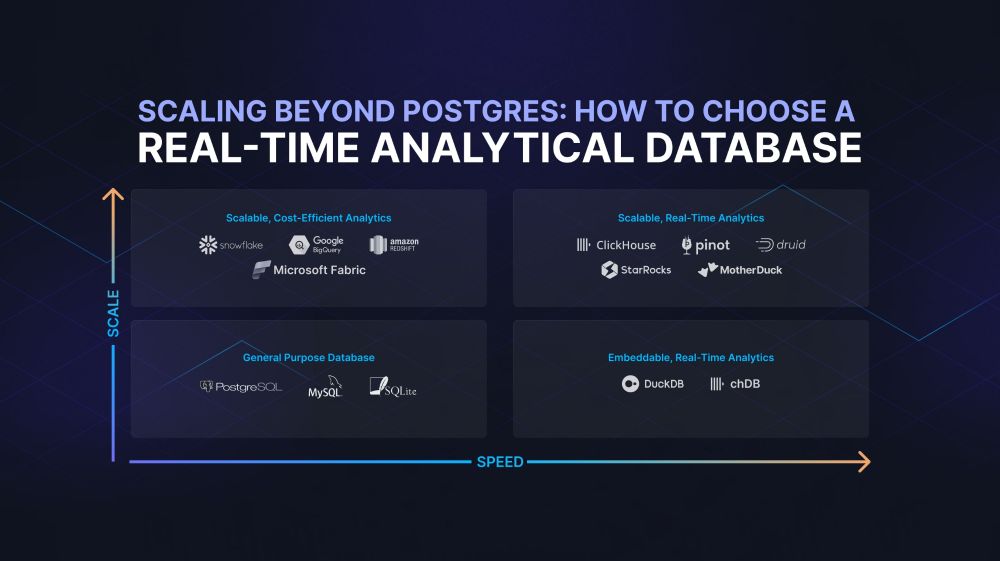

Rill | Scaling Beyond Postgres: How to Choose a Real-Time Analytical Database

This blog explores how real-time databases address critical analytical requirements. We highlight the differences between cloud data warehouses like Snowflake and BigQuery, legacy OLAP databases like ...

www.rilldata.com

Mike Driscoll

@medriscoll.com

· Apr 15

Mike Driscoll

@medriscoll.com

· Apr 15

Mike Driscoll

@medriscoll.com

· Apr 11

Mike Driscoll

@medriscoll.com

· Apr 11

Mike Driscoll

@medriscoll.com

· Apr 11

Mike Driscoll

@medriscoll.com

· Apr 11

Mike Driscoll

@medriscoll.com

· Apr 4

The past few Data Talks on the Rocks interviews have been with the creators of real-time analytical databases. For our 7th round, we decided to chat with Kishore Gopalakrishna, CEO of @startreedata.bsky.social and creator of the wildly popular database #ApachePinot.

www.rilldata.com/blog/kishore...

www.rilldata.com/blog/kishore...

Rill | Data Talks on the Rocks 7 - Kishore Gopalakrishna, StarTree

Mike Driscoll, CEO of Rill, is joined by Kishore Gopalakrishna, founder and CEO of StarTree. This video interview covers Pinot's architectural decisions and actual use cases from Uber, Stripe, and Wal...

www.rilldata.com

Mike Driscoll

@medriscoll.com

· Mar 15

Mike Driscoll

@medriscoll.com

· Mar 14

Mike Driscoll

@medriscoll.com

· Mar 14

Mike Driscoll

@medriscoll.com

· Mar 14

Mike Driscoll

@medriscoll.com

· Mar 14

Mike Driscoll

@medriscoll.com

· Mar 14