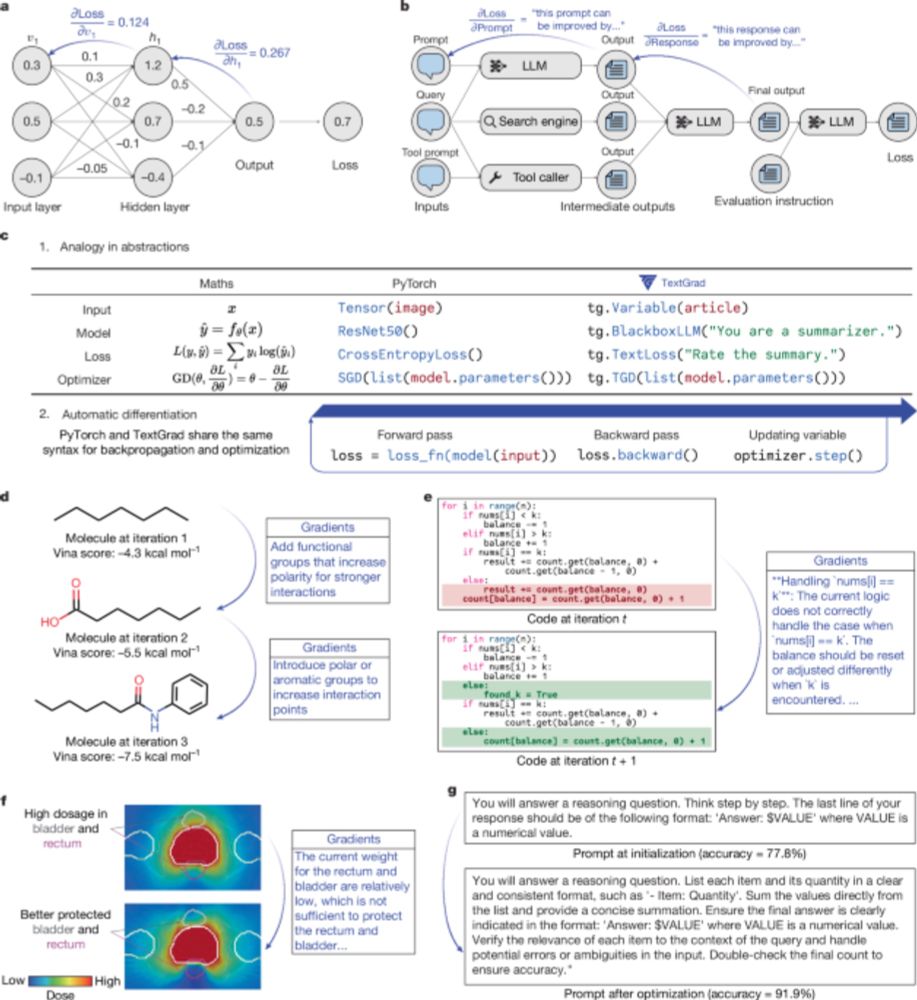

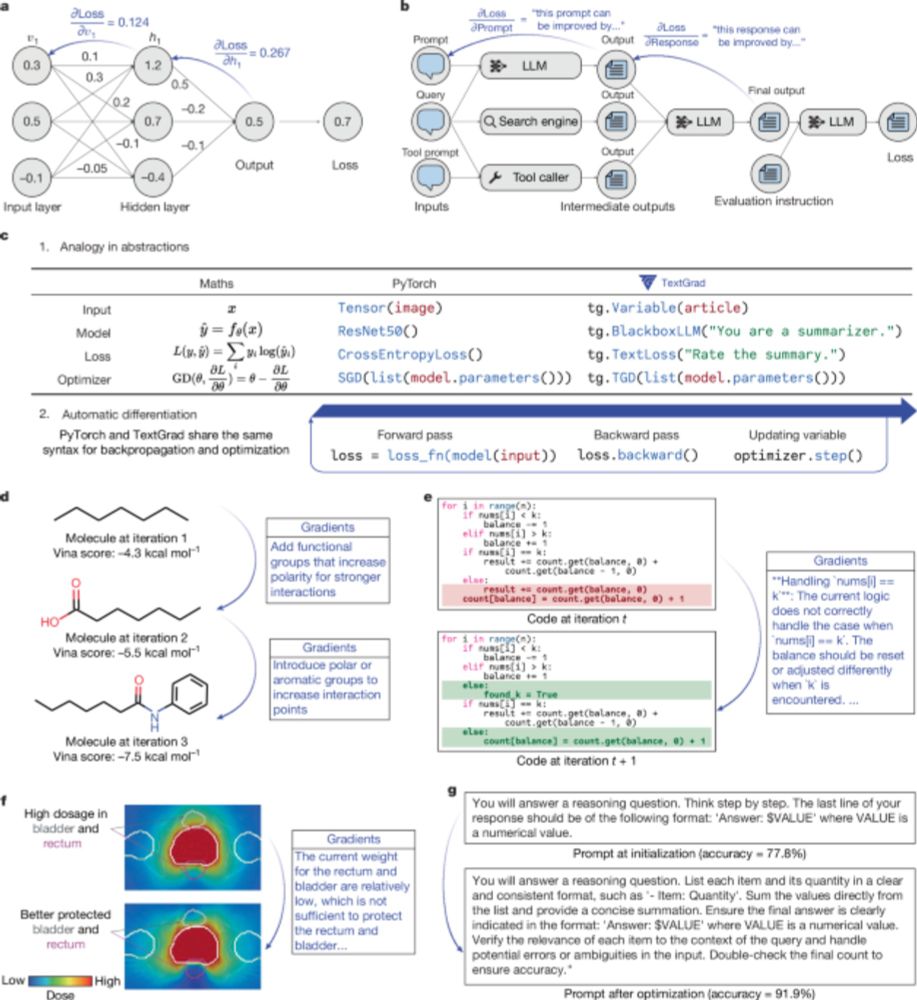

Scholars developed a versatile framework called TextGrad to improve complex AI systems by leveraging natural language feedback. @jameszou.bsky.social

Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

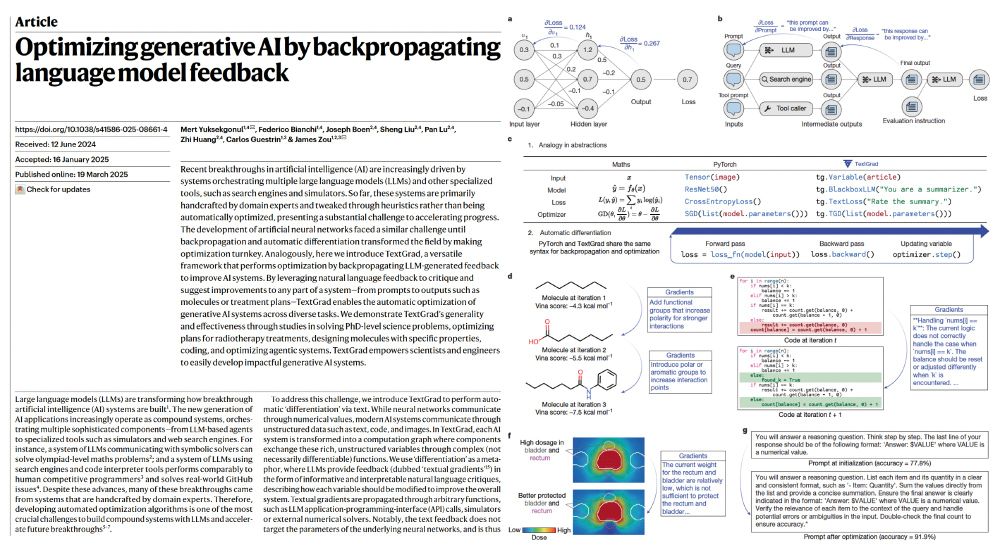

Optimizing generative AI by backpropagating language model feedback - Nature

Generative artificial intelligence (AI) systems can be optimized using TextGrad, a framework that performs optimization by backpropagating large-language-model-generated feedback; TextGrad enable...

www.nature.com

March 21, 2025 at 7:14 PM

Scholars developed a versatile framework called TextGrad to improve complex AI systems by leveraging natural language feedback. @jameszou.bsky.social

Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

March 31, 2025 at 2:30 PM

It is a really interesting tool/video, especially considering the current paper from DeepSeek regarding Critique Tuning. Just not understanding why you are not using textgrad for the prompt optimization?

April 7, 2025 at 6:05 PM

It is a really interesting tool/video, especially considering the current paper from DeepSeek regarding Critique Tuning. Just not understanding why you are not using textgrad for the prompt optimization?

Here's the non-paywall version of our #TextGrad paper rdcu.be/efRp4! 📜

April 2, 2025 at 4:01 PM

Here's the non-paywall version of our #TextGrad paper rdcu.be/efRp4! 📜

allocating conceptual tags for queries and knowledge sources, together with a hybrid retrieval mechanism from both relevant knowledge and patient. In addition, a Med-TextGrad module using multi-agent textual gradients is integrated to ensure that the [3/5 of https://arxiv.org/abs/2505.19538v1]

May 27, 2025 at 6:28 AM

allocating conceptual tags for queries and knowledge sources, together with a hybrid retrieval mechanism from both relevant knowledge and patient. In addition, a Med-TextGrad module using multi-agent textual gradients is integrated to ensure that the [3/5 of https://arxiv.org/abs/2505.19538v1]

Try adalflow, it combines dspy and textgrad... But it's a bit more complex to setup.

November 21, 2024 at 9:33 PM

Try adalflow, it combines dspy and textgrad... But it's a bit more complex to setup.

[2024/06/17 ~ 06/23] 이번 주의 주요 ML 논문 (Top ML Papers of the Week)

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

[2024/06/17 ~ 06/23] 이번 주의 주요 ML 논문 (Top ML Papers of the Week)

[2024/06/17 ~ 06/23] 이번 주의 주요 ML 논문 (Top ML Papers of the Week) PyTorchKR🔥🇰🇷 🤔💬 이번 주 선정된 논문들을 살펴보면, 크게 두 가지 주요 추세를 확인할 수 있습니다. 먼저, 대부분의 논문이 자연어 처리(NLP)와 관련된 주제에 집중하고 있음을 알 수 있습니다. 그 중에서도 특히, 장문의 맥락을 다루는 언어 모델(LM), 정보 검색 및 질의 응답(QA) 시스템의 효율성을 높이기 위한 방법들이 주요 관심사로 떠오르고 있습니다. 예를 들어, ‘Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?’ 와 같은 논문은 긴 맥락을 이해하는 언어 모델의 가능성을 탐구하고 있으며, ‘PlanRAG’과 ‘From RAG to Rich Parameters’는 정보 검색과 질의 응답 시스템을 개선하기 위한 새로...

d.ptln.kr

June 24, 2024 at 1:43 AM

[2024/06/17 ~ 06/23] 이번 주의 주요 ML 논문 (Top ML Papers of the Week)

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

ずっと気になっていたTextGrad。アイディアは秀逸。いろんなモデルにすぐに使える。動作もわかりやすい。

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

Mert Yuksekgonul, Federico Bianchi, Joseph Boen, Sheng Liu, Zhi Huang, Carlos Guestrin, James Zou

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

July 19, 2024 at 7:37 PM

ずっと気になっていたTextGrad。アイディアは秀逸。いろんなモデルにすぐに使える。動作もわかりやすい。

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

𝐓𝐞𝐱𝐭𝐆𝐫𝐚𝐝: 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐞 𝐋𝐋𝐌𝐬 𝐰𝐢𝐭𝐡 𝐅𝐞𝐞𝐝𝐛𝐚𝐜𝐤 🚀

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

GitHub - zou-group/textgrad: TextGrad: Automatic ''Differentiation'' via Text -- using large language models to backpropagate textual gradients.

TextGrad: Automatic ''Differentiation'' via Text -- using large language models to backpropagate textual gradients. - zou-group/textgrad

github.com

February 24, 2025 at 6:59 PM

𝐓𝐞𝐱𝐭𝐆𝐫𝐚𝐝: 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐞 𝐋𝐋𝐌𝐬 𝐰𝐢𝐭𝐡 𝐅𝐞𝐞𝐝𝐛𝐚𝐜𝐤 🚀

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

What's TextGrad?

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

November 21, 2024 at 2:32 PM

What's TextGrad?

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

You heard about DSPy? Let's talk TextGrad. The upgraded version in AI optimization.

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

November 21, 2024 at 2:32 PM

You heard about DSPy? Let's talk TextGrad. The upgraded version in AI optimization.

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

💡The key idea of #textgrad is to optimize by backpropagating textual gradients produced by #LLM

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

Optimizing generative AI by backpropagating language model feedback - Nature

Generative artificial intelligence (AI) systems can be optimized using TextGrad, a framework that performs optimization by backpropagating large-language-model-generated feedback; TextGrad enable...

www.nature.com

March 19, 2025 at 4:47 PM

💡The key idea of #textgrad is to optimize by backpropagating textual gradients produced by #LLM

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

It's great, but we find the setup to be great too.. :)

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

November 23, 2024 at 11:41 AM

It's great, but we find the setup to be great too.. :)

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

Mert Yuksekgonul, Federico Bianchi, Joseph Boen, Sheng Liu, Zhi Huang, Carlos Guestrin, James Zou

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

June 12, 2024 at 2:02 PM

Mert Yuksekgonul, Federico Bianchi, Joseph Boen, Sheng Liu, Zhi Huang, Carlos Guestrin, James Zou

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

arXiv:2502.19980v1 Announce Type: new

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

February 28, 2025 at 6:06 AM

arXiv:2502.19980v1 Announce Type: new

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

TextGrad enables backpropagation of LLM-generated feedback, automating the optimization of generative AI systems. From solving PhD-level problems to designing molecules and refining treatment plans, it enhances AI performance across diverse tasks.

Nature: www.nature.com/articles/s41...

Nature: www.nature.com/articles/s41...

Optimizing generative AI by backpropagating language model feedback - Nature

Generative artificial intelligence (AI) systems can be optimized using TextGrad, a framework that performs optimization by backpropagating large-language-model-generated feedback; TextGrad enable...

www.nature.com

March 20, 2025 at 6:54 AM

TextGrad enables backpropagation of LLM-generated feedback, automating the optimization of generative AI systems. From solving PhD-level problems to designing molecules and refining treatment plans, it enhances AI performance across diverse tasks.

Nature: www.nature.com/articles/s41...

Nature: www.nature.com/articles/s41...

Do u know about DSPy and TextGrad? They may be relevant.

July 16, 2025 at 4:29 PM

Do u know about DSPy and TextGrad? They may be relevant.

Why You Might Not Want to Use It:

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

November 21, 2024 at 2:32 PM

Why You Might Not Want to Use It:

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

3/5 Inference-time compute. With models topping out, this is the next frontier for improving AI performance. Good intro on the @huggingface blog:

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

December 17, 2024 at 6:59 PM

3/5 Inference-time compute. With models topping out, this is the next frontier for improving AI performance. Good intro on the @huggingface blog:

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

今はこういう論文(TextGrad: Automatic ''Differentiation'' via Text、 github.com/zou-group/te... )でもNatureに通るんですね

www.nature.com/articles/s41...

www.nature.com/articles/s41...

Optimizing generative AI by backpropagating language model feedback - Nature

Generative artificial intelligence (AI) systems can be optimized using TextGrad, a framework that performs optimization by backpropagating large-language-model-generated feedback; TextGrad enable...

www.nature.com

April 5, 2025 at 1:53 AM

今はこういう論文(TextGrad: Automatic ''Differentiation'' via Text、 github.com/zou-group/te... )でもNatureに通るんですね

www.nature.com/articles/s41...

www.nature.com/articles/s41...

I successfully optimised a context compression prompt with DSPy GEPA and TextGrad

github.com/Laurian/cont...

github.com/Laurian/cont...

GitHub - Laurian/context-compression-experiments-2508: prompt engineering experiments with DSPy GEPA and TextGrad

prompt engineering experiments with DSPy GEPA and TextGrad - Laurian/context-compression-experiments-2508

github.com

September 3, 2025 at 12:46 PM

I successfully optimised a context compression prompt with DSPy GEPA and TextGrad

github.com/Laurian/cont...

github.com/Laurian/cont...

RAGがprompt勾配降下やLoraに比べて優れてる点って知識を「部分的に」「忘れさせる」ことがしやすいからなんだよな。textgradは合成可能性と自動最適化を両立できるんだろうか。

April 21, 2025 at 4:13 AM

RAGがprompt勾配降下やLoraに比べて優れてる点って知識を「部分的に」「忘れさせる」ことがしやすいからなんだよな。textgradは合成可能性と自動最適化を両立できるんだろうか。

I had a lot of fun discussing #textgrad on the @nature.com podcast with @climateadam.bsky.social! It starts at around 12 minutes here www.nature.com/articles/d41...

Tiny satellite sets new record for secure quantum communication

Hear the biggest stories from the world of science | 19 March 2025

www.nature.com

March 21, 2025 at 2:20 PM

I had a lot of fun discussing #textgrad on the @nature.com podcast with @climateadam.bsky.social! It starts at around 12 minutes here www.nature.com/articles/d41...

⚡️Really thrilled that #textgrad is published in @nature.com today!⚡️

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!

March 19, 2025 at 4:47 PM

⚡️Really thrilled that #textgrad is published in @nature.com today!⚡️

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!

My concern (beyond boilerplate reliability issues with LLM-generated material) is the metaphor of backpropagation. How does TextGrad actually translate natural language critique into concrete system adjustments across potentially non-differentiable components like prompts or external tool calls? 7/8

April 16, 2025 at 8:46 PM

My concern (beyond boilerplate reliability issues with LLM-generated material) is the metaphor of backpropagation. How does TextGrad actually translate natural language critique into concrete system adjustments across potentially non-differentiable components like prompts or external tool calls? 7/8