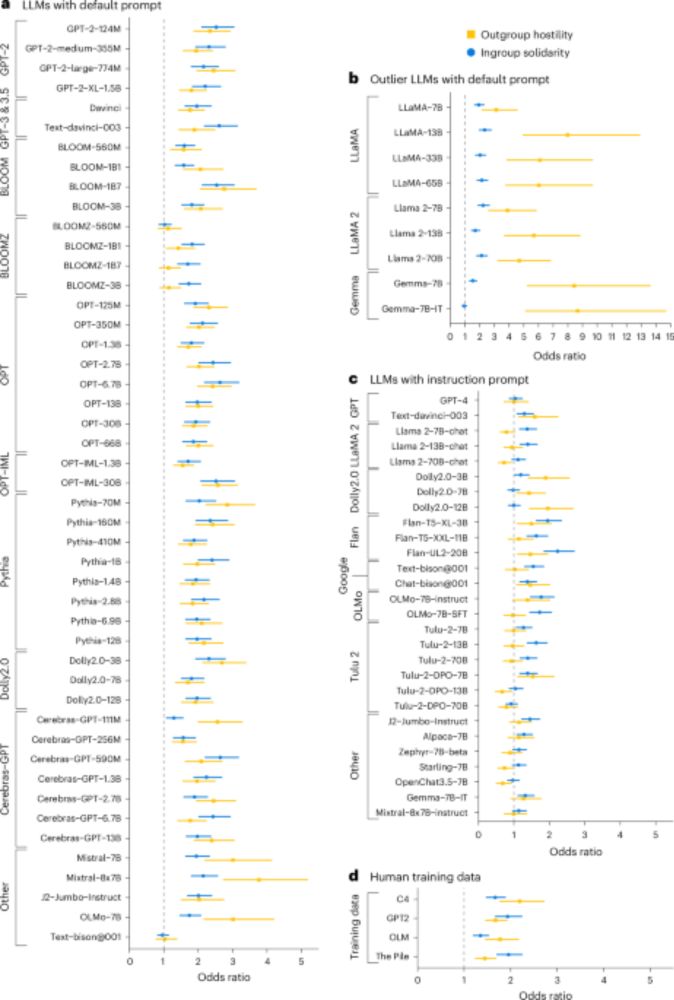

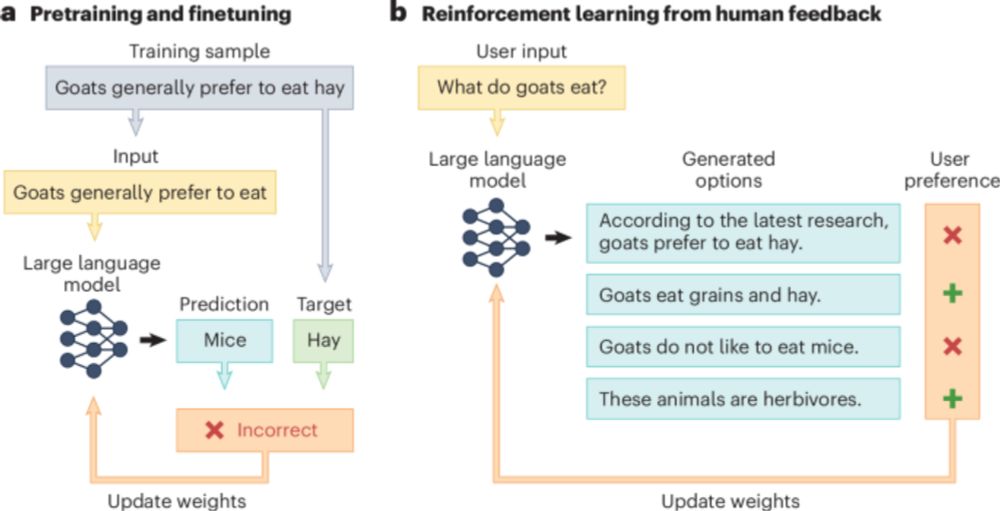

Do large language models (LLMs) exhibit social identity biases like humans?

(co-lead by @tiancheng.bsky.social, together with @steverathje.bsky.social, Nigel Collier, @profsanderlinden.bsky.social, and Jon Roozenbeek)

1/

www.nature.com/articles/s43...

awards.womenofthefuture.co.uk/our-alumni-c...

awards.womenofthefuture.co.uk/our-alumni-c...

"For eighteen years, the awards have shone a light on trailblazing women"

awards.womenofthefuture.co.uk/our-alumni-c...

"For eighteen years, the awards have shone a light on trailblazing women"

awards.womenofthefuture.co.uk/our-alumni-c...

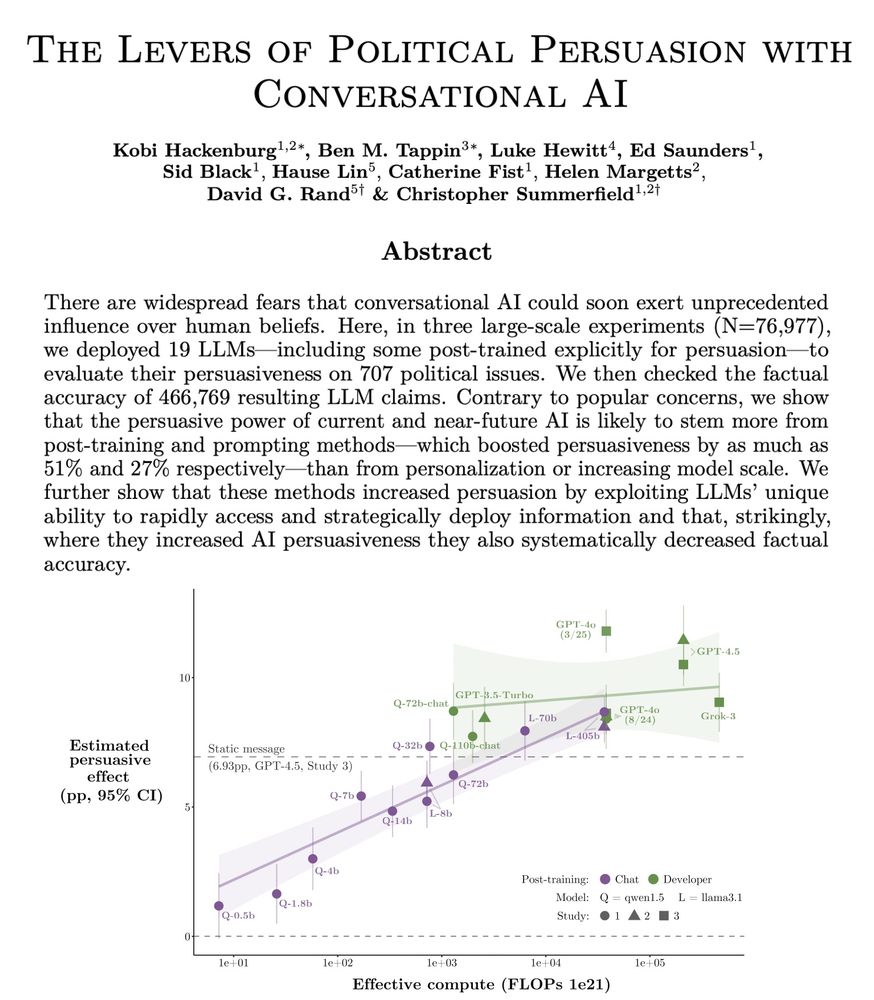

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

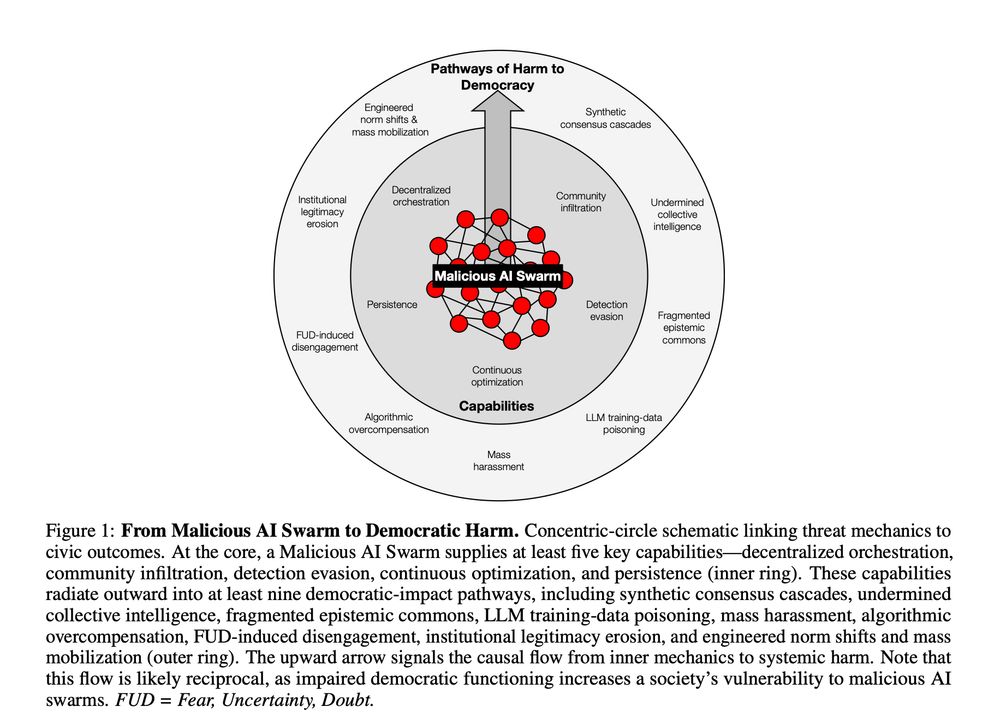

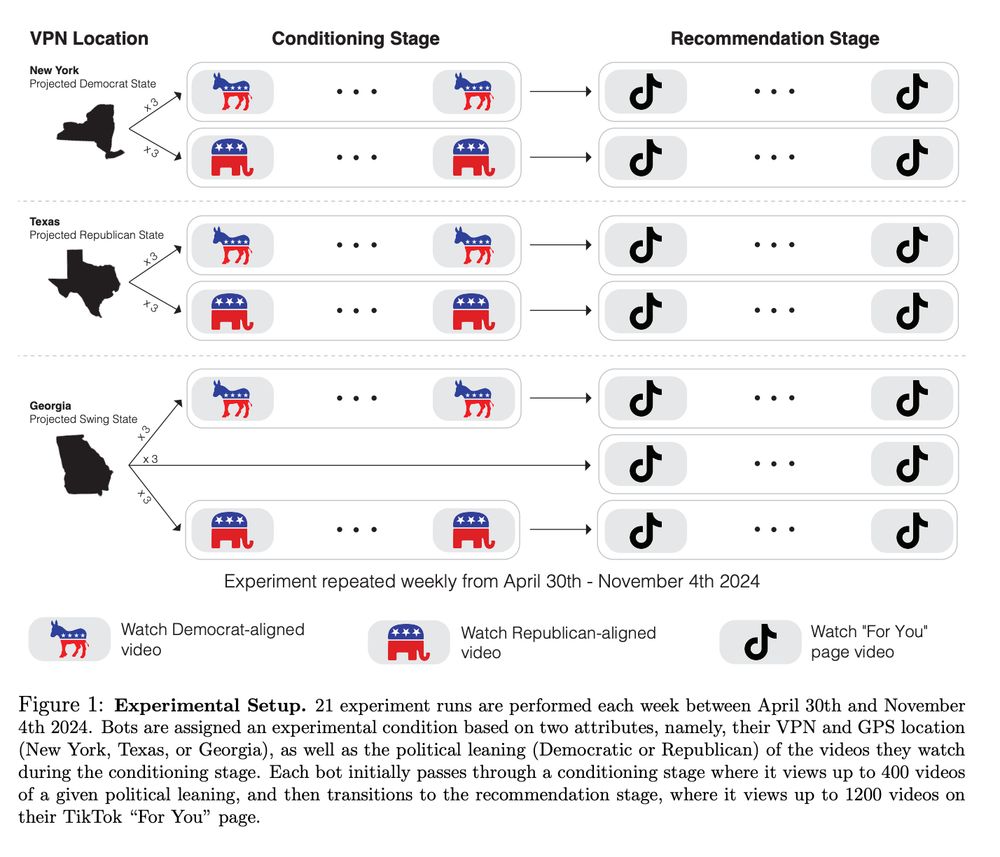

Our new paper explains how AI swarms can fabricate grassroots consensus, fragment shared reality, engage in mass harassment, interfer with elections, and erode institutional trust: osf.io/preprints/os...

Our new paper explains how AI swarms can fabricate grassroots consensus, fragment shared reality, engage in mass harassment, interfer with elections, and erode institutional trust: osf.io/preprints/os...

One of these days, these bots are gonna walk all over you!

theconversation.com/what-is-ai-s...

One of these days, these bots are gonna walk all over you!

theconversation.com/what-is-ai-s...

How should we actually write rules for AI?

C3AI provides a way to:

1. Design effective constitutions using psychology and public input.

2. Evaluate how well fine-tuned models actually follow the rules.

How should we actually write rules for AI?

C3AI provides a way to:

1. Design effective constitutions using psychology and public input.

2. Evaluate how well fine-tuned models actually follow the rules.

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

Led by the brill @yarakyrychenko.bsky.social & Fritz Götz

authors.elsevier.com/sd/article/S...

Led by the brill @yarakyrychenko.bsky.social & Fritz Götz

authors.elsevier.com/sd/article/S...

This is what we mean when we say Bluesky is open: your identity and followers belong to you. It took 30s to sign up for this new, independent app, and everything is there.

This is what we mean when we say Bluesky is open: your identity and followers belong to you. It took 30s to sign up for this new, independent app, and everything is there.

Can I interest you in some of the freshest thinking coming out of the academy today?

Try ePODstemology!

Our latest episode is Cambridge's Yara Kyrychenko on socially responsible AI.

@yarakyrychenko.bsky.social from Cambridge's Social Decision-Making Lab is on to talk about bots - how can they be a force for good? Tune in to find out.

www.buzzsprout.com/1763534/epis...

Can I interest you in some of the freshest thinking coming out of the academy today?

Try ePODstemology!

Our latest episode is Cambridge's Yara Kyrychenko on socially responsible AI.

@yarakyrychenko.bsky.social from Cambridge's Social Decision-Making Lab is on to talk about bots - how can they be a force for good? Tune in to find out.

www.buzzsprout.com/1763534/epis...

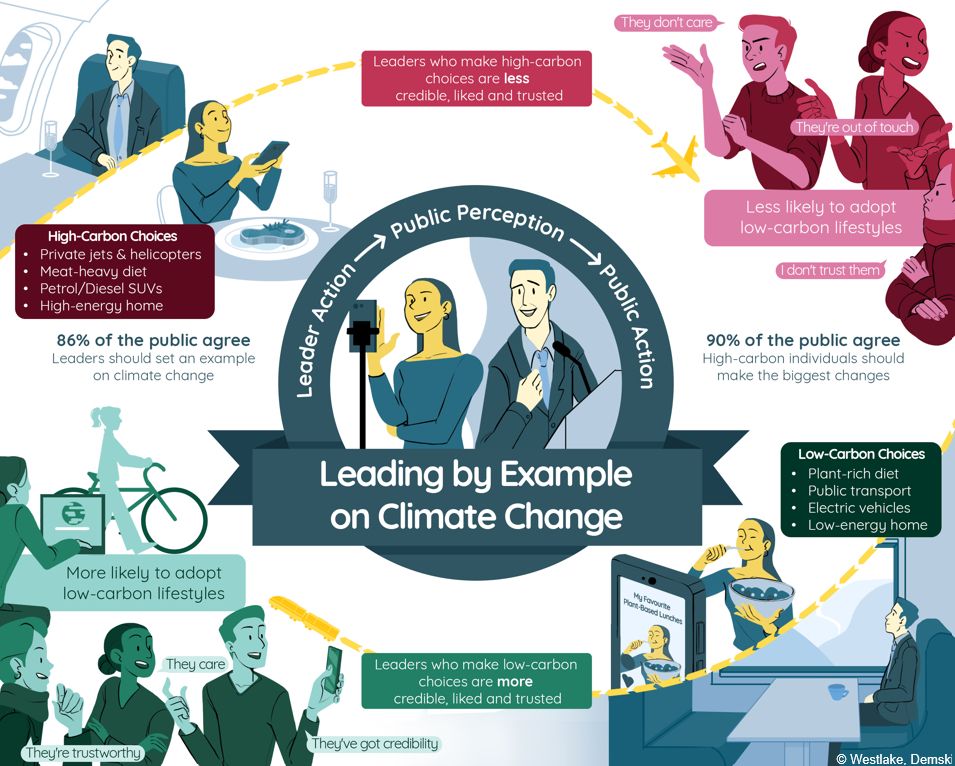

CCC says leading by example increases public buy-in and behaviour change @thecccuk.bsky.social

🚨So, we've made this new infographic showing how it works...

🧵

CCC says leading by example increases public buy-in and behaviour change @thecccuk.bsky.social

🚨So, we've made this new infographic showing how it works...

🧵

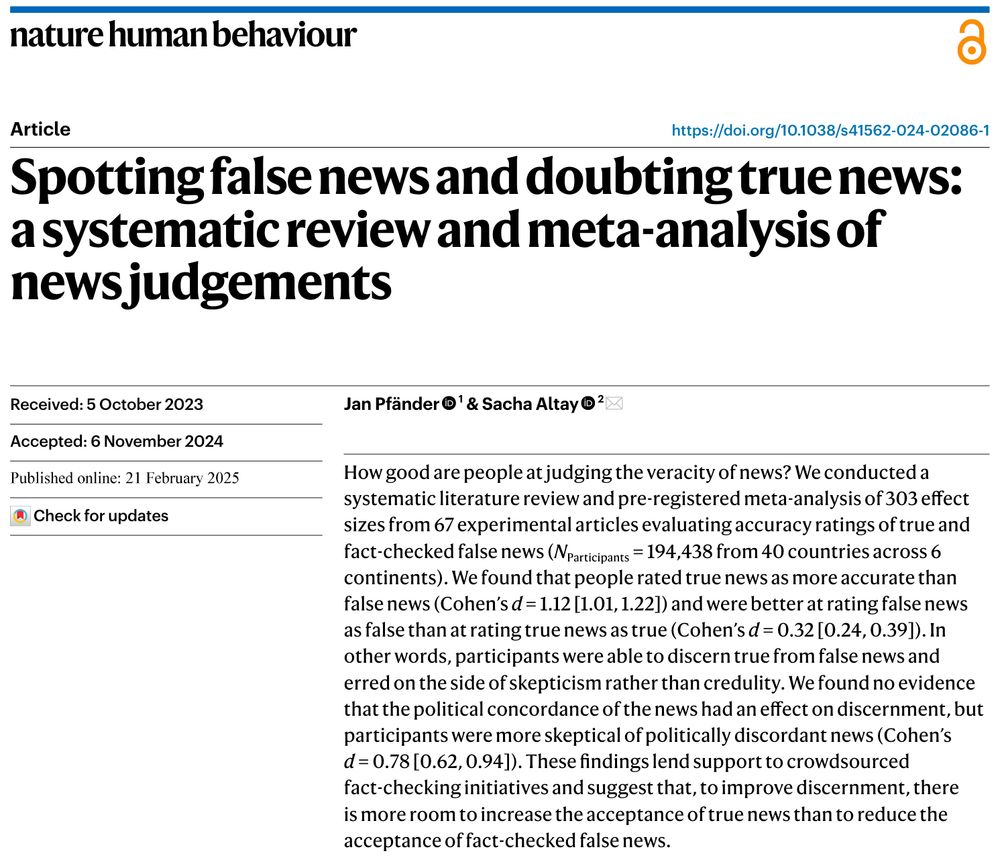

Can people tell true from false news?

Yes! Our meta-analysis shows that people rate true news as more accurate than false news (d = 1.12) and were better at spotting false news than at recognizing true news (d = 0.32).

www.nature.com/articles/s41...

Can people tell true from false news?

Yes! Our meta-analysis shows that people rate true news as more accurate than false news (d = 1.12) and were better at spotting false news than at recognizing true news (d = 0.32).

www.nature.com/articles/s41...

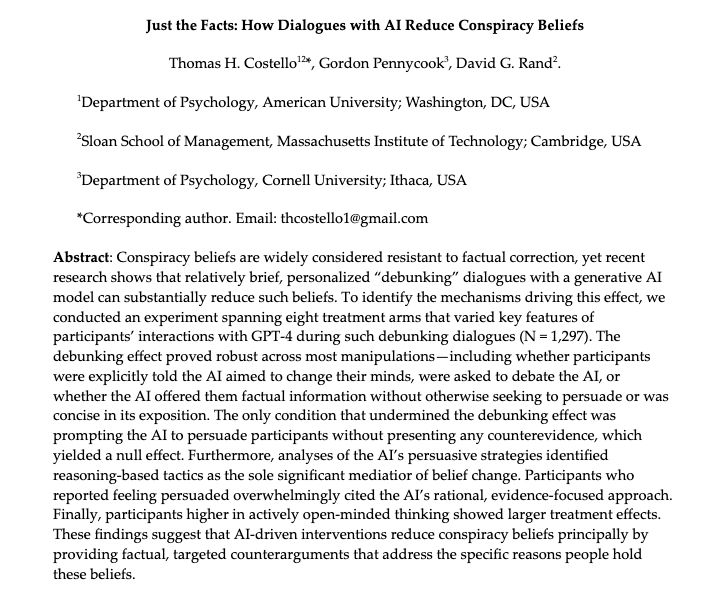

TLDR: facts

osf.io/preprints/ps...

TLDR: facts

osf.io/preprints/ps...

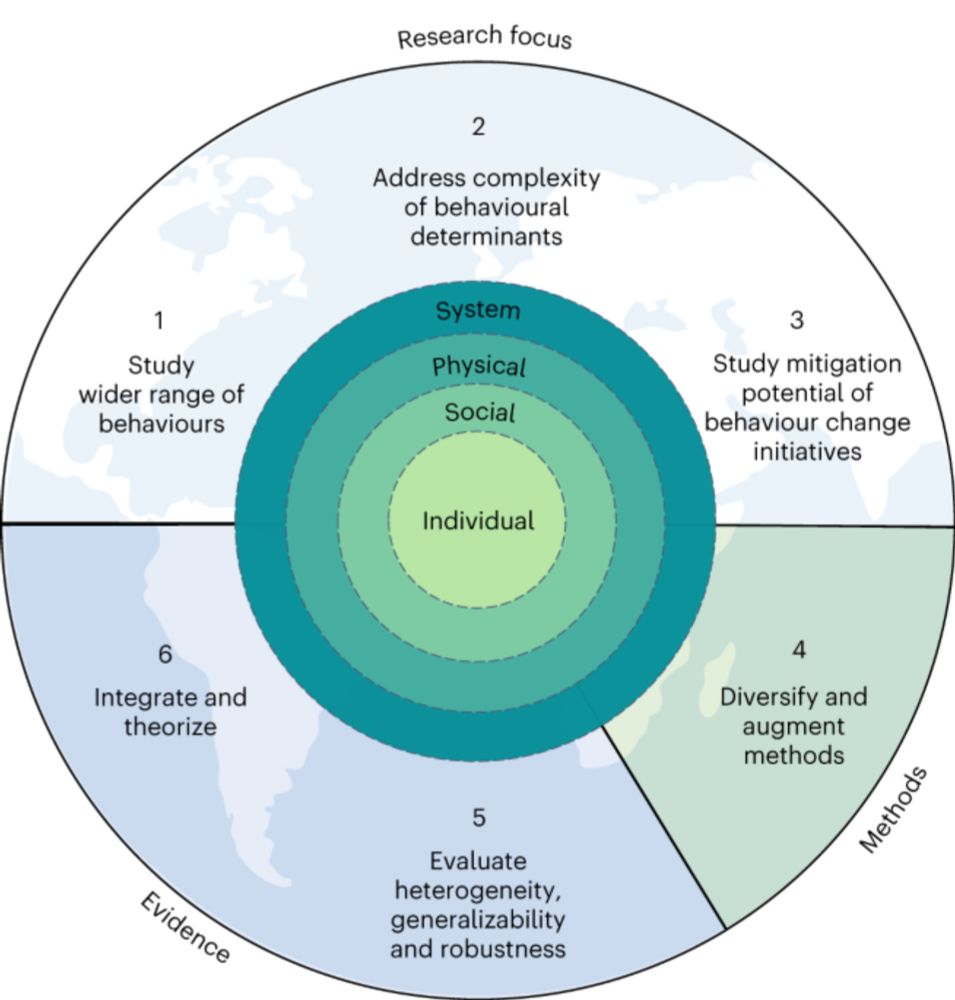

We offered some suggestions for this in our paper.

@cameronbrick.bsky.social @colognaviktoria.bsky.social

www.nature.com/articles/s41...

We offered some suggestions for this in our paper.

@cameronbrick.bsky.social @colognaviktoria.bsky.social

www.nature.com/articles/s41...

Few quotes…🧵

Few quotes…🧵

Please share widely.

Want more info? See here: www.linkedin.com/feed/update/...

Please share widely.

Want more info? See here: www.linkedin.com/feed/update/...

(1) The prevalence of misinformation in society is substantial when properly defined.

(2) Misinformation causally impacts attitudes and behaviors.

psycnet.apa.org/fulltext/202...

(1) The prevalence of misinformation in society is substantial when properly defined.

(2) Misinformation causally impacts attitudes and behaviors.

psycnet.apa.org/fulltext/202...

🔓https://rdcu.be/d5owe

🔓https://rdcu.be/d5owe

Check out the amazing (original) paper here: www.nature.com/articles/s43...