If you're interested in learning more about 🤗 Transformers.js, I highly recommend checking it out!

👉 www.youtube.com/watch?v=KR61...

If you're interested in learning more about 🤗 Transformers.js, I highly recommend checking it out!

👉 www.youtube.com/watch?v=KR61...

In-browser tool calling & MCP is finally here, allowing LLMs to interact with websites programmatically.

To show what's possible, I built a demo using Liquid AI's new LFM2 model, powered by 🤗 Transformers.js.

In-browser tool calling & MCP is finally here, allowing LLMs to interact with websites programmatically.

To show what's possible, I built a demo using Liquid AI's new LFM2 model, powered by 🤗 Transformers.js.

🗣️ Transcribe videos, meeting notes, songs and more

🔐 Runs on-device, meaning no data is sent to a server

🌎 Multilingual (8 languages)

🤗 Completely free (forever) & open source

🗣️ Transcribe videos, meeting notes, songs and more

🔐 Runs on-device, meaning no data is sent to a server

🌎 Multilingual (8 languages)

🤗 Completely free (forever) & open source

It has an estimated ELO of ~1400... can you beat it? 👀

(runs on both mobile and desktop)

It has an estimated ELO of ~1400... can you beat it? 👀

(runs on both mobile and desktop)

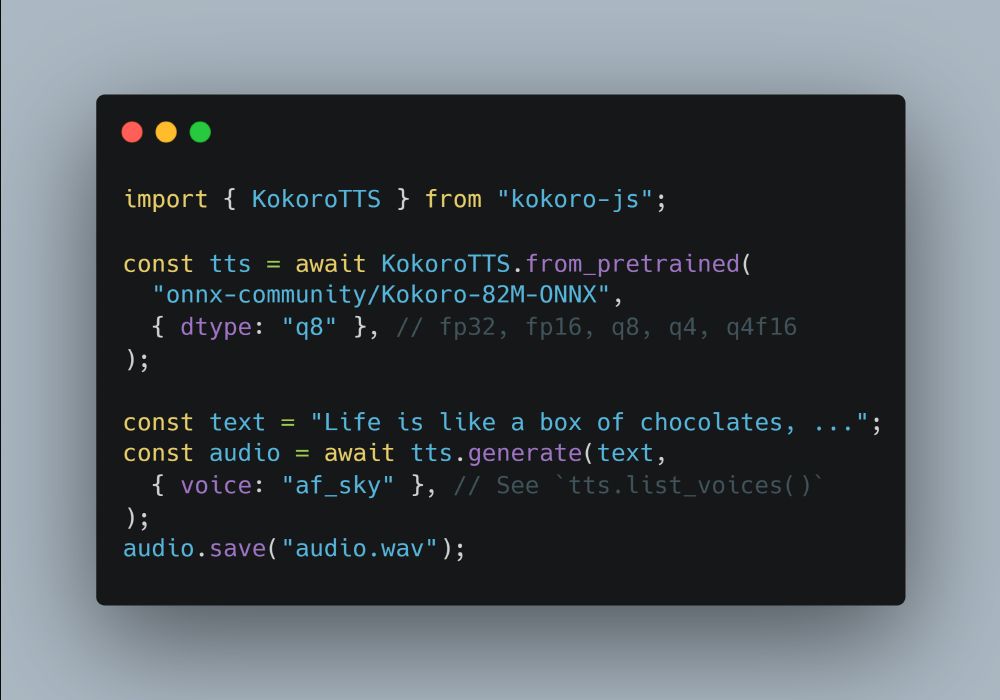

Generate 10 seconds of speech in ~1 second for $0.

What will you build? 🔥

Generate 10 seconds of speech in ~1 second for $0.

What will you build? 🔥

Link to models/samples: huggingface.co/onnx-communi...

Link to models/samples: huggingface.co/onnx-communi...

Huge kudos to the Kokoro TTS community, especially taylorchu for the ONNX exports and Hexgrad for the amazing project! None of this would be possible without you all! 🤗

Try it out yourself: huggingface.co/spaces/webml...

Huge kudos to the Kokoro TTS community, especially taylorchu for the ONNX exports and Hexgrad for the amazing project! None of this would be possible without you all! 🤗

Try it out yourself: huggingface.co/spaces/webml...

👉 npm i kokoro-js 👈

Link to demo (+ sample code) in 🧵

👉 npm i kokoro-js 👈

Link to demo (+ sample code) in 🧵

Here's MiniThinky-v2 (1B) running 100% locally in the browser at ~60 tps (no API calls)! I can't wait to see what you build with it!

Demo + source code in 🧵👇

Here's MiniThinky-v2 (1B) running 100% locally in the browser at ~60 tps (no API calls)! I can't wait to see what you build with it!

Demo + source code in 🧵👇

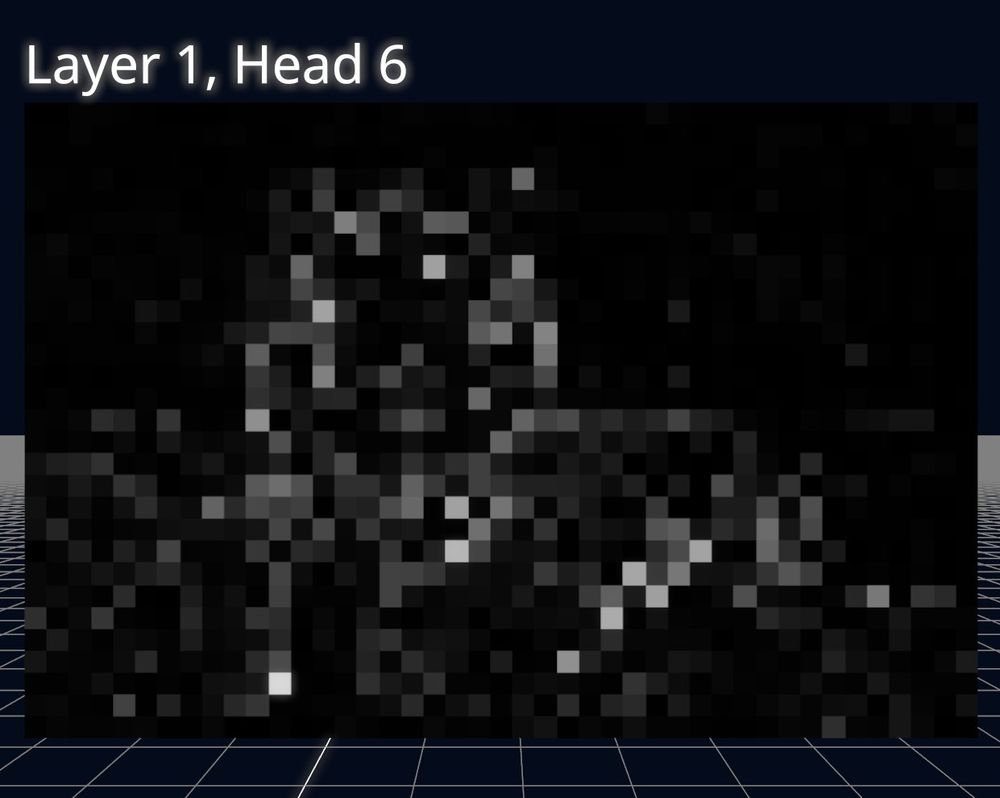

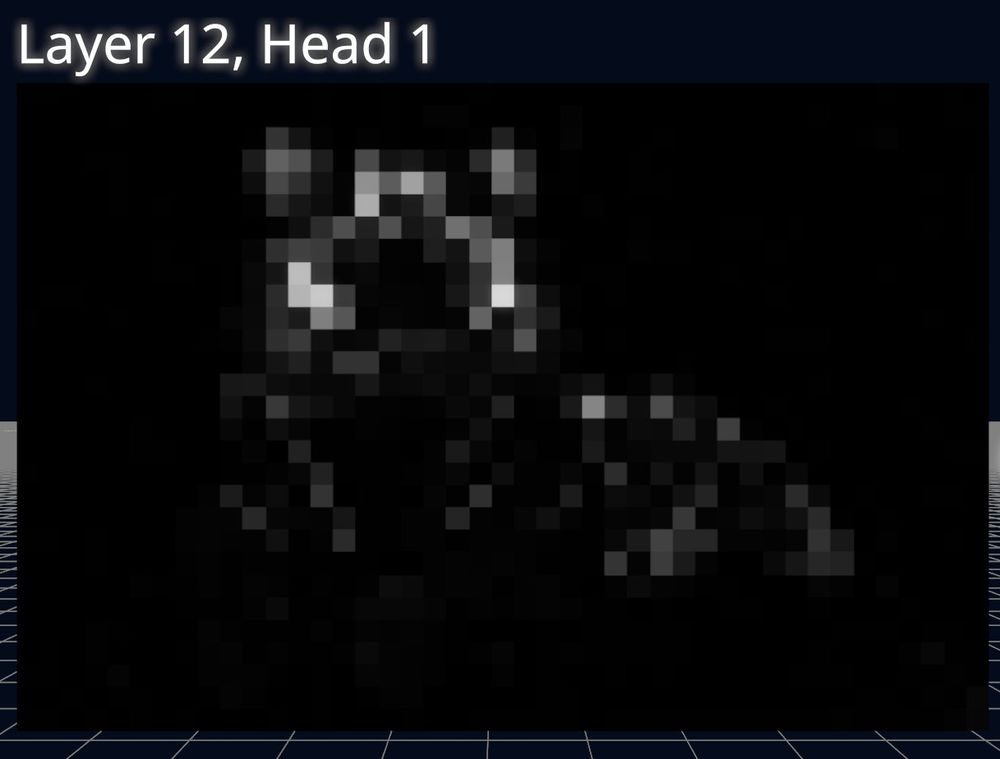

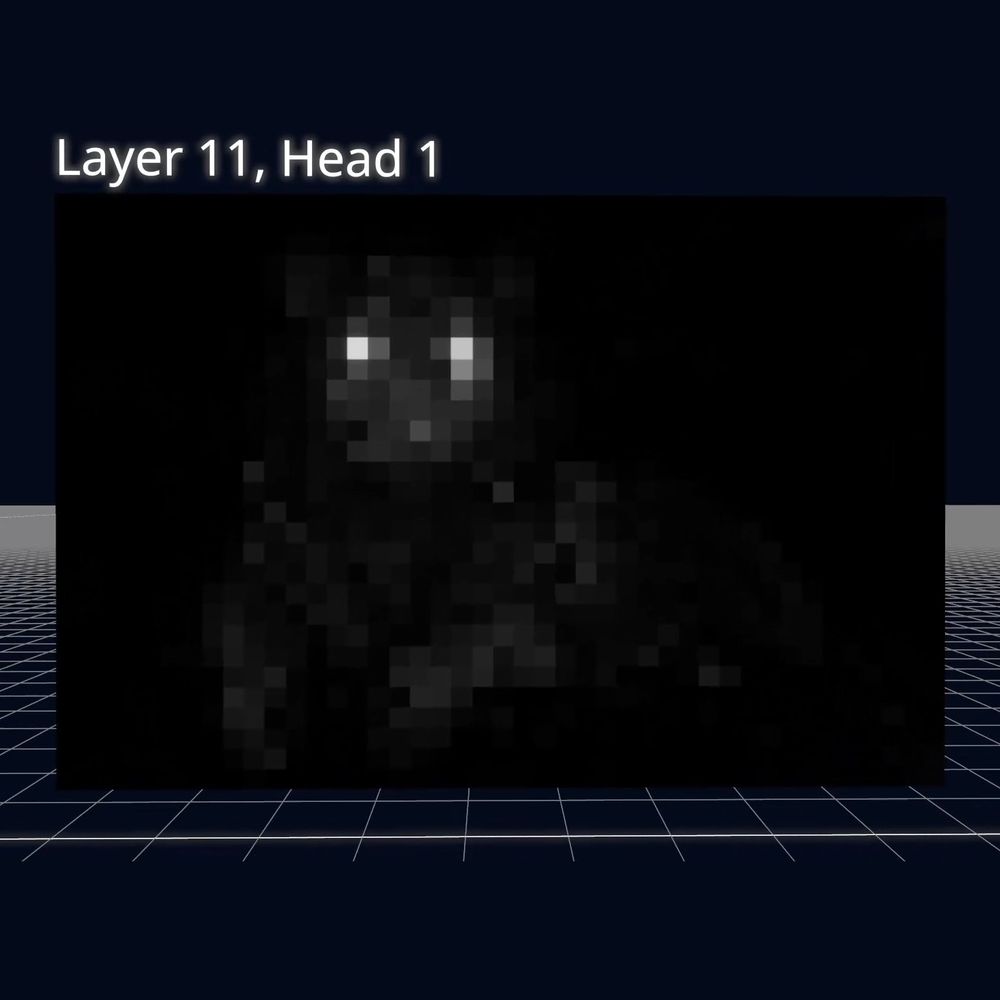

First layer (1) – noisy and diffuse, capturing broad general patterns.

Last layer (12) – focused and precise, highlighting specific features.

First layer (1) – noisy and diffuse, capturing broad general patterns.

Last layer (12) – focused and precise, highlighting specific features.

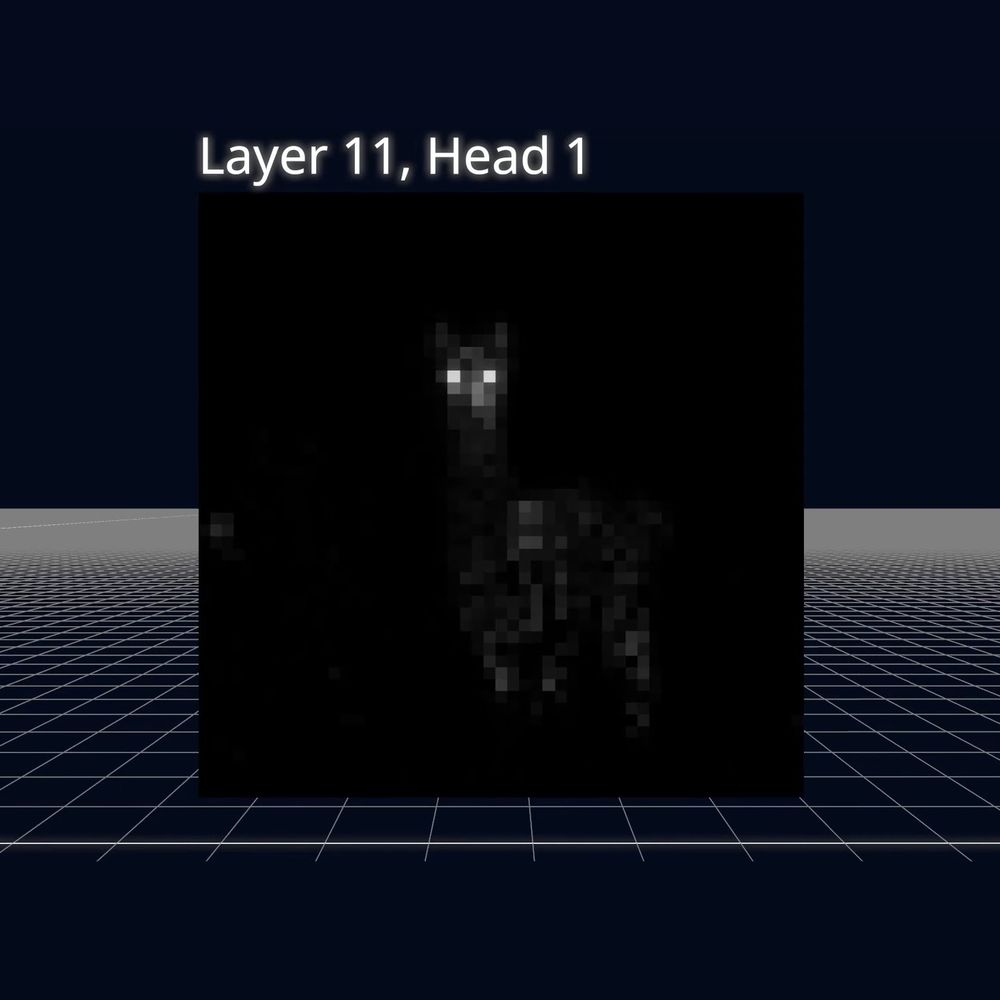

It's fascinating to see what each attention head learns to "focus on". For example, layer 11, head 1 seems to identify eyes. Spooky! 👀

It's fascinating to see what each attention head learns to "focus on". For example, layer 11, head 1 seems to identify eyes. Spooky! 👀

This means you can analyze your own images for free: simply click the image to open the file dialog.

E.g., the model recognizes that long necks and fluffy ears are defining features of llamas! 🦙

This means you can analyze your own images for free: simply click the image to open the file dialog.

E.g., the model recognizes that long necks and fluffy ears are defining features of llamas! 🦙

I built a web app to interactively explore the self-attention maps produced by ViTs. This explains what the model is focusing on when making predictions, and provides insights into its inner workings! 🤯

Try it out yourself! 👇

I built a web app to interactively explore the self-attention maps produced by ViTs. This explains what the model is focusing on when making predictions, and provides insights into its inner workings! 🤯

Try it out yourself! 👇

🚀 Faster and more accurate than Whisper

🔒 Privacy-focused (no data leaves your device)

⚡️ WebGPU accelerated (w/ WASM fallback)

🔥 Powered by ONNX Runtime Web and Transformers.js

Demo + source code below! 👇

🚀 Faster and more accurate than Whisper

🔒 Privacy-focused (no data leaves your device)

⚡️ WebGPU accelerated (w/ WASM fallback)

🔥 Powered by ONNX Runtime Web and Transformers.js

Demo + source code below! 👇

High-quality and natural speech generation that runs 100% locally in your browser, powered by OuteTTS and Transformers.js. 🤗 Try it out yourself!

Demo + source code below 👇

High-quality and natural speech generation that runs 100% locally in your browser, powered by OuteTTS and Transformers.js. 🤗 Try it out yourself!

Demo + source code below 👇

1. Janus from Deepseek for unified multimodal understanding and generation (Text-to-Image and Image-Text-to-Text)

Demo (+ source code): hf.co/spaces/webml...

1. Janus from Deepseek for unified multimodal understanding and generation (Text-to-Image and Image-Text-to-Text)

Demo (+ source code): hf.co/spaces/webml...

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

⚡ WebGPU support (up to 100x faster than WASM)

🔢 New quantization formats

🏛 121 supported architectures in total

🤖 Over 1200 pre-converted models

Get started with `npm i @huggingface/transformers`

⚡ WebGPU support (up to 100x faster than WASM)

🔢 New quantization formats

🏛 121 supported architectures in total

🤖 Over 1200 pre-converted models

Get started with `npm i @huggingface/transformers`