MVA/CentraleSupélec alumni

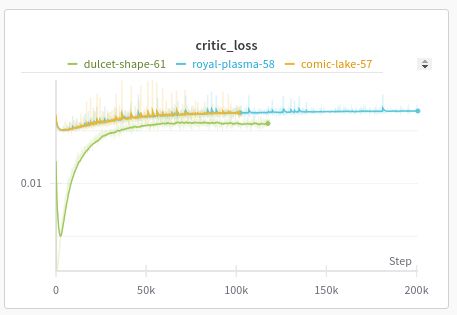

@jessefarebro.bsky.social & i fix this by introducing the Continuous ALE (CALE)!

read thread for details!

1/9

@jessefarebro.bsky.social & i fix this by introducing the Continuous ALE (CALE)!

read thread for details!

1/9

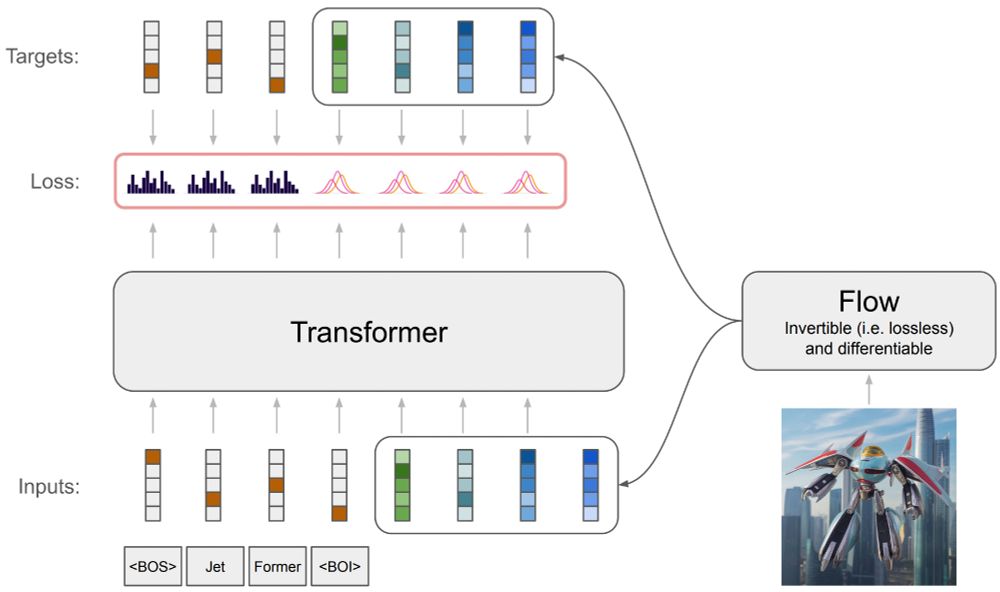

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

+ I fully agree with the conclusion: RL needs to become an *empirical science* and not a benchmark maximizing rat race where methods gets exponentially complex to squeeze out more reward points

Finally in blog form, have a read!

psc-g.github.io/posts/resear...

+ I fully agree with the conclusion: RL needs to become an *empirical science* and not a benchmark maximizing rat race where methods gets exponentially complex to squeeze out more reward points

Which doesn't mean the said narrative is not tremendously important.

Which doesn't mean the said narrative is not tremendously important.

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

works for general robot policies too

works for general robot policies too

I'm still in shock of the performance/price ratio. It stays cool and dead silent as well. ARM arch ftw

Also is anyone using MLX a lot? Curious about its current state

I'm still in shock of the performance/price ratio. It stays cool and dead silent as well. ARM arch ftw

Also is anyone using MLX a lot? Curious about its current state

Ultra high quality vibe/consensus check on many science fields, centralized.

Ultra high quality vibe/consensus check on many science fields, centralized.

arxiv.org/abs/2410.14606

arxiv.org/abs/2410.14606

arxiv.org/abs/2312.00598

arxiv.org/abs/2312.00598

1. Reward-less offline RL

2. Emergent vision from interaction (experience shapes perception, going beyond offline supervised/SSL)

1. High-dim planning

2. What are the minimal requirements for two agents to recognize each other as acting on behalf of reasons.

1. I came to hate my work and thinking so don't do it anymore.

2.

1. Reward-less offline RL

2. Emergent vision from interaction (experience shapes perception, going beyond offline supervised/SSL)

go.bsky.app/3WPHcHg

go.bsky.app/3WPHcHg