tim.darcet.fr

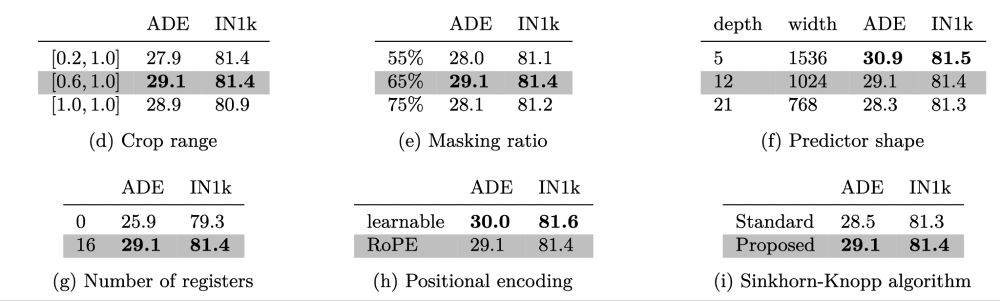

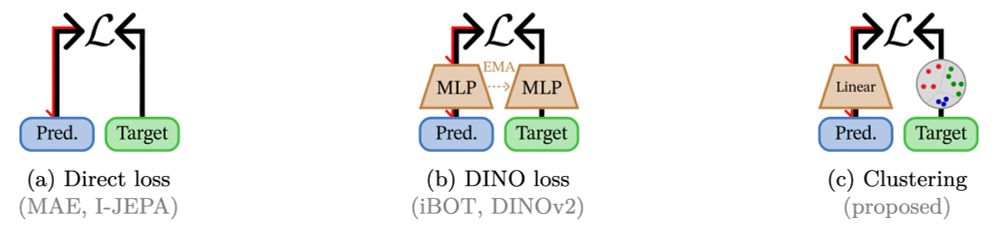

To prevent empty clusters we use a Sinkhorn-Knopp like in SwAV

To prevent empty clusters we use a Sinkhorn-Knopp like in SwAV

The idea is to cluster the whole distribution of model outputs (not a single sample or batch) with an online process that is slightly updated after each iteration (like a mini-batch k-means)

The idea is to cluster the whole distribution of model outputs (not a single sample or batch) with an online process that is slightly updated after each iteration (like a mini-batch k-means)

With this third paper I’m wrapping up my PhD. An amazing journey, thanks to the excellent advisors and colleagues!

With this third paper I’m wrapping up my PhD. An amazing journey, thanks to the excellent advisors and colleagues!

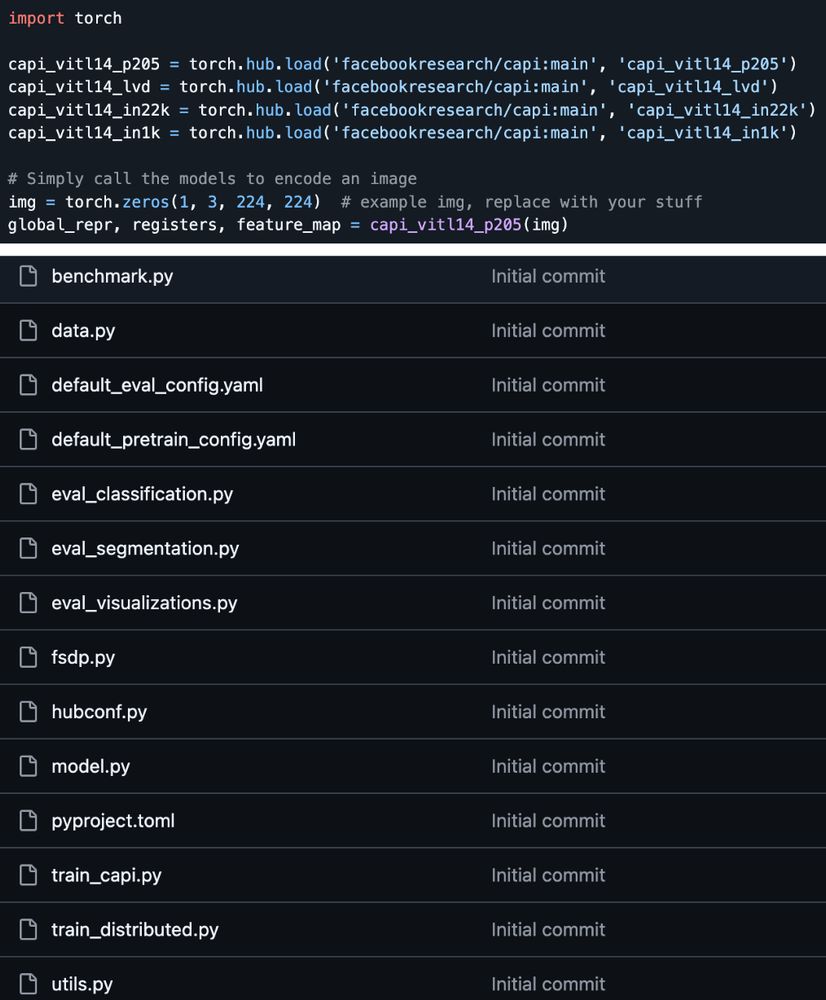

If you have torch you can load the models in a single line anywhere

The repo is a flat folder of like 10 files, it should be pretty readable

If you have torch you can load the models in a single line anywhere

The repo is a flat folder of like 10 files, it should be pretty readable

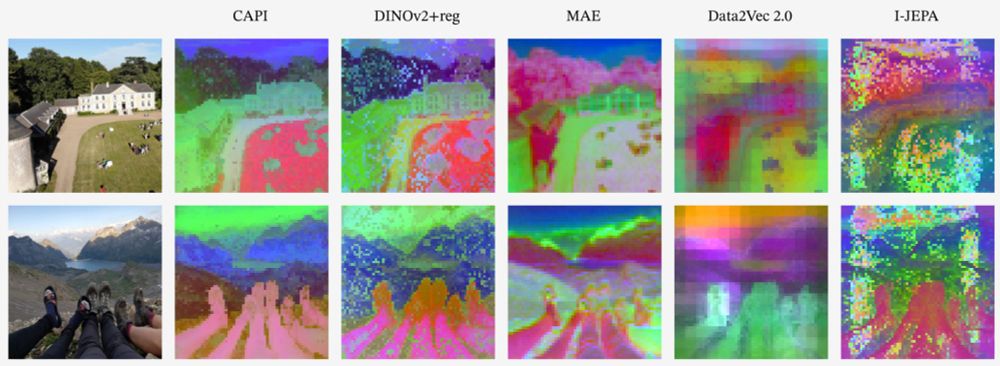

DINOv2+reg still has artifacts (despite my best efforts), while the MAE features are mostly color, not semantics (see shadow in the first image, or rightmost legs in the second)

CAPI has both semantic and smooth feature maps

DINOv2+reg still has artifacts (despite my best efforts), while the MAE features are mostly color, not semantics (see shadow in the first image, or rightmost legs in the second)

CAPI has both semantic and smooth feature maps

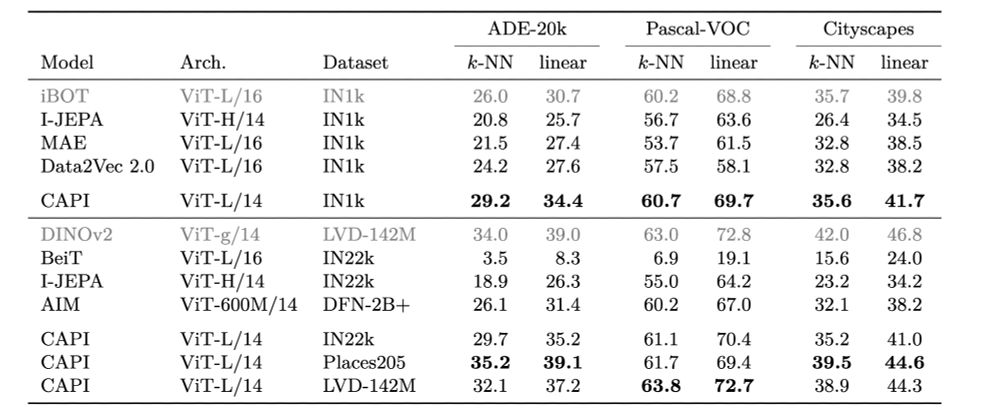

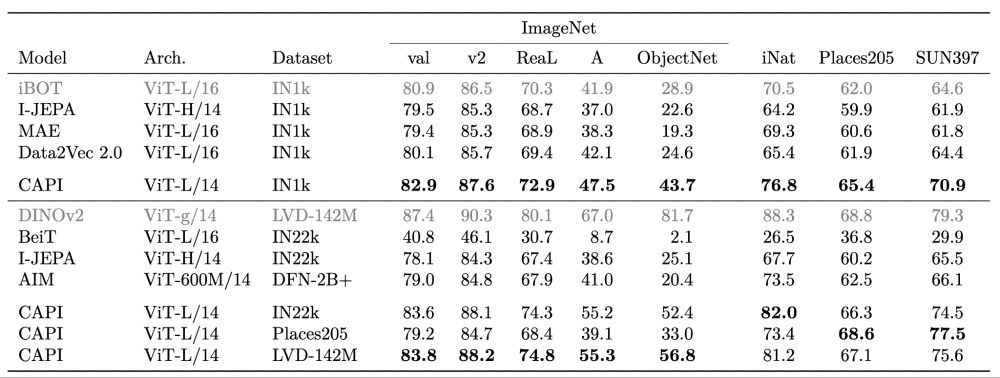

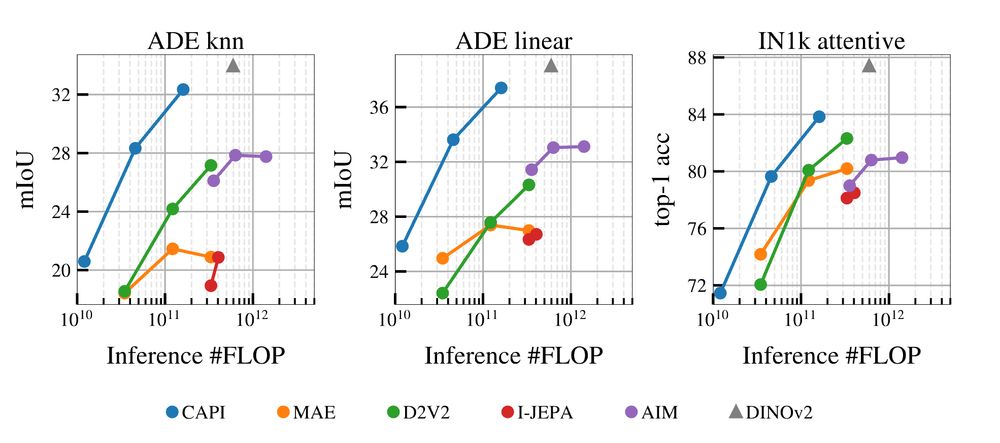

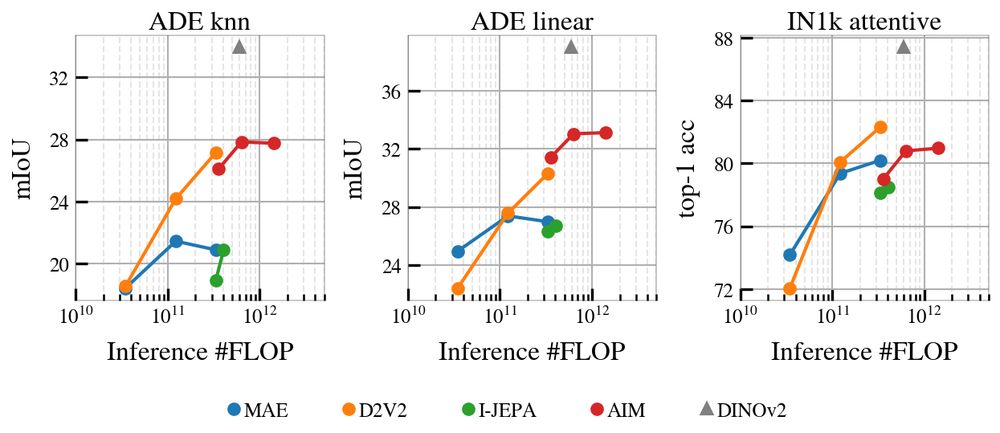

Compared to baselines, again, it’s quite good, with eg +8 points compared to MAE trained on the same dataset

Compared to baselines, again, it’s quite good, with eg +8 points compared to MAE trained on the same dataset

In both we significantly improve over previous models

Training on a Places205, is better for scenes (P205, SUN) but worse for object-centric

In both we significantly improve over previous models

Training on a Places205, is better for scenes (P205, SUN) but worse for object-centric

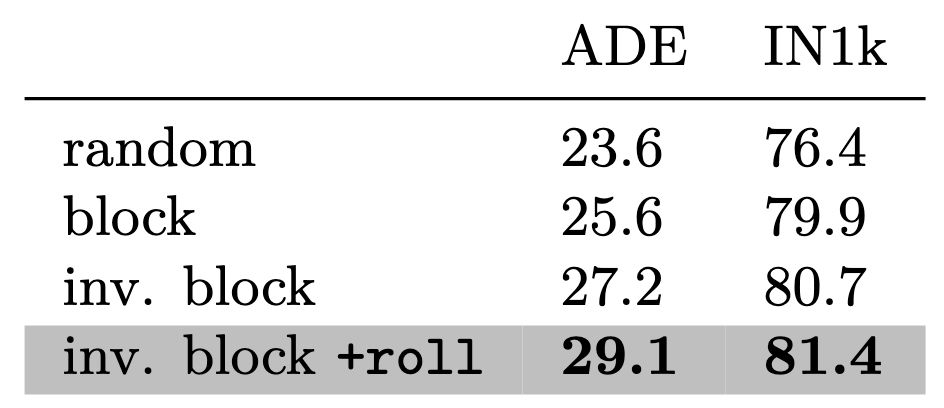

“Inverse block” > “block” > “random”

*But* w/ inv. block, you will oversample the center to be masked out

→we propose a random circular shift (torch.roll). Prevents that, gives us a good boost.

“Inverse block” > “block” > “random”

*But* w/ inv. block, you will oversample the center to be masked out

→we propose a random circular shift (torch.roll). Prevents that, gives us a good boost.

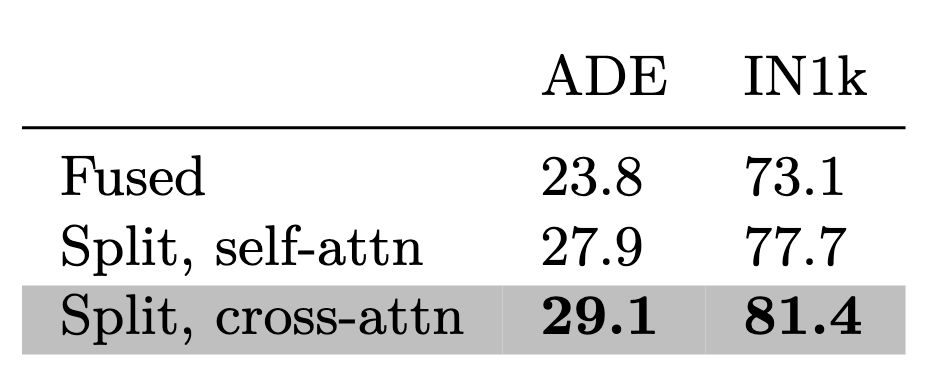

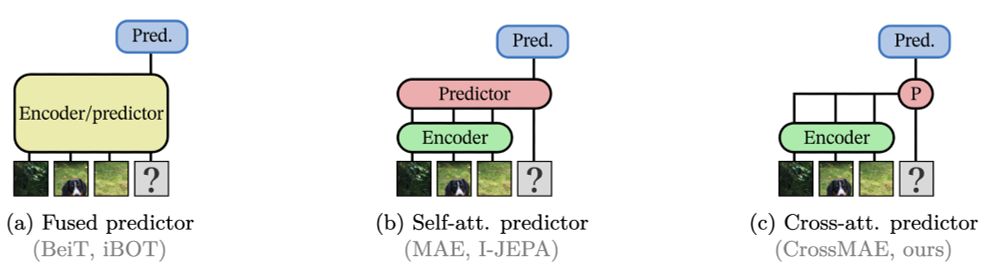

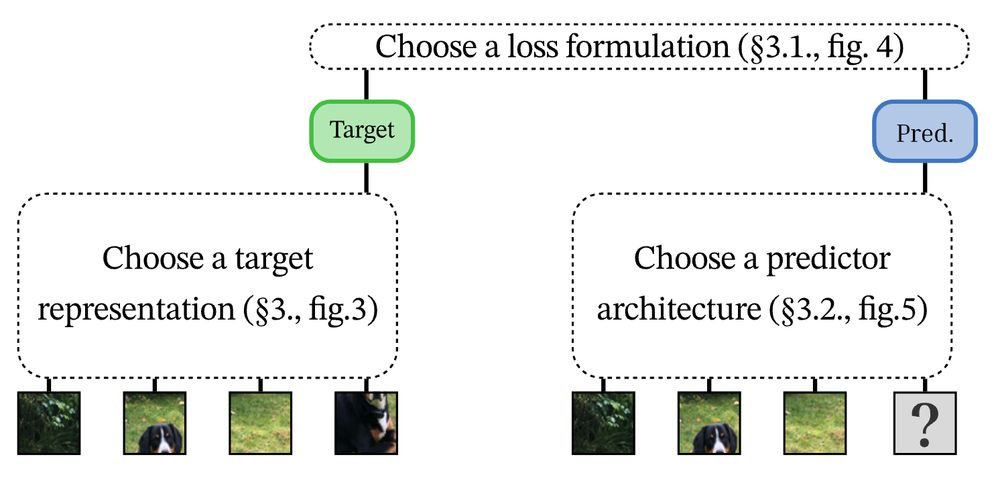

fused (a): 1 transformer w/ all tokens

split (b): enc w/ no [MSK], pred w/ all tokens

cross (c): no patch tokens in pred, cross-attend to them

Patch tokens are the encoder’s problem, [MSK] are the predictor’s problem. Tidy. Good.

fused (a): 1 transformer w/ all tokens

split (b): enc w/ no [MSK], pred w/ all tokens

cross (c): no patch tokens in pred, cross-attend to them

Patch tokens are the encoder’s problem, [MSK] are the predictor’s problem. Tidy. Good.

So we use exactly that.

So we use exactly that.

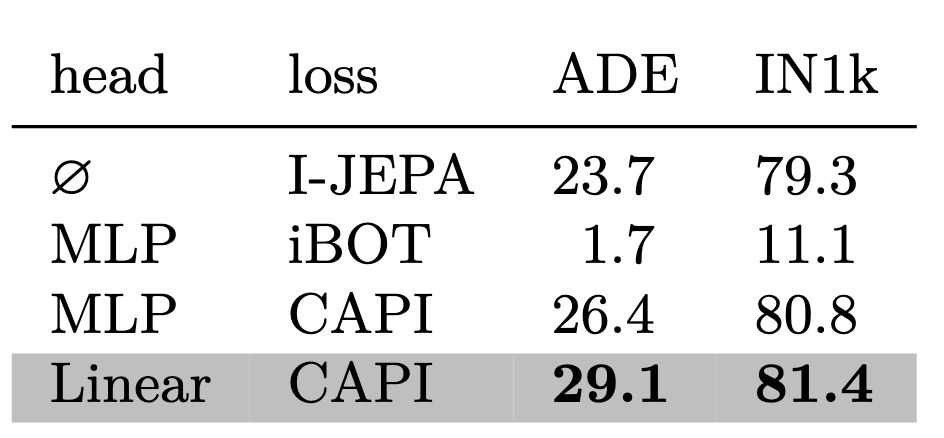

“DINO head”: good results, too unstable

Idea: preds and targets have diff. distribs, so EMA head does not work on targets → need to separate the 2 heads

So we just use a clustering on the target side instead, and it works

“DINO head”: good results, too unstable

Idea: preds and targets have diff. distribs, so EMA head does not work on targets → need to separate the 2 heads

So we just use a clustering on the target side instead, and it works

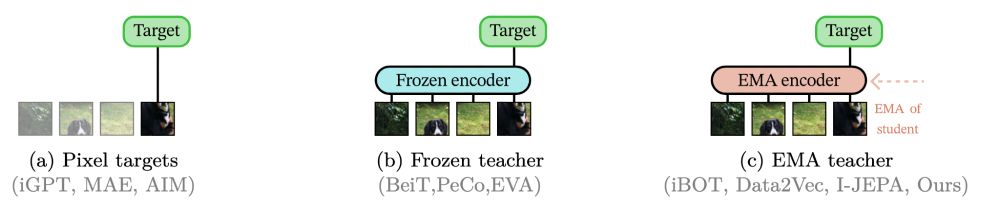

MAE used raw pixels, BeiT a VQ-VAE. It works, it’s stable. But not good enough.

We use the model we are currently training. Promising (iBOT, D2V2), but super unstable. We do it not because it is easy but because it is hard etc

MAE used raw pixels, BeiT a VQ-VAE. It works, it’s stable. But not good enough.

We use the model we are currently training. Promising (iBOT, D2V2), but super unstable. We do it not because it is easy but because it is hard etc

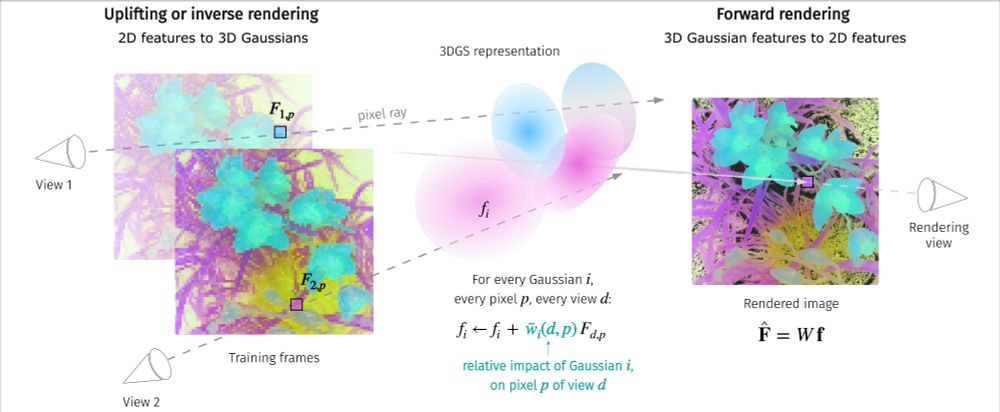

1. take an image, convert its patches to representations.

2. given part of this image, train a model to predict the content of the missing parts

3. measure a loss between pred and target

1. take an image, convert its patches to representations.

2. given part of this image, train a model to predict the content of the missing parts

3. measure a loss between pred and target

The issue? It’s hard. BeiT/MAE are not great for representations. iBOT works well, but is too unstable to train without DINO.

→Pure MIM lags behind DINOv2

The issue? It’s hard. BeiT/MAE are not great for representations. iBOT works well, but is too unstable to train without DINO.

→Pure MIM lags behind DINOv2