Until then, here's some system designs:

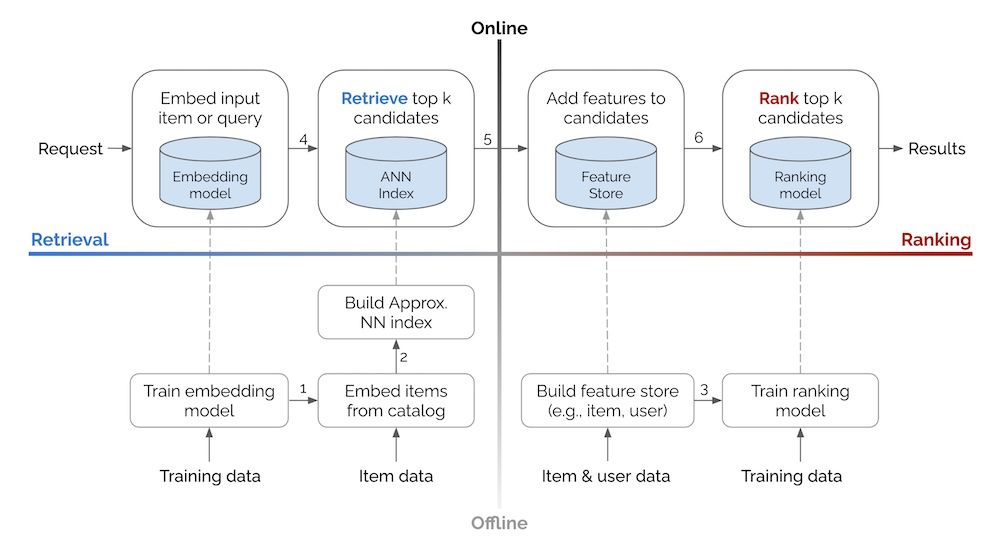

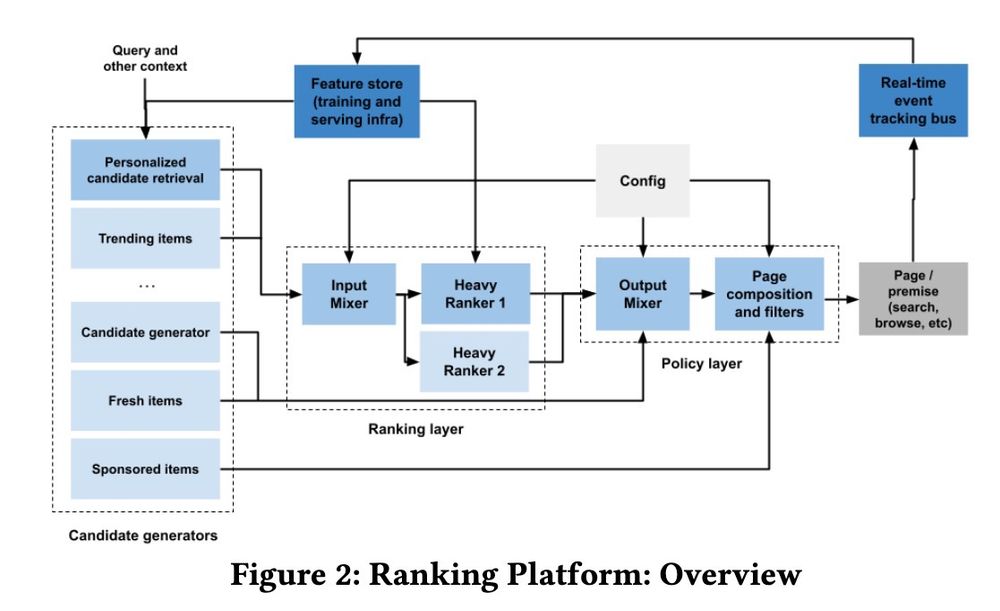

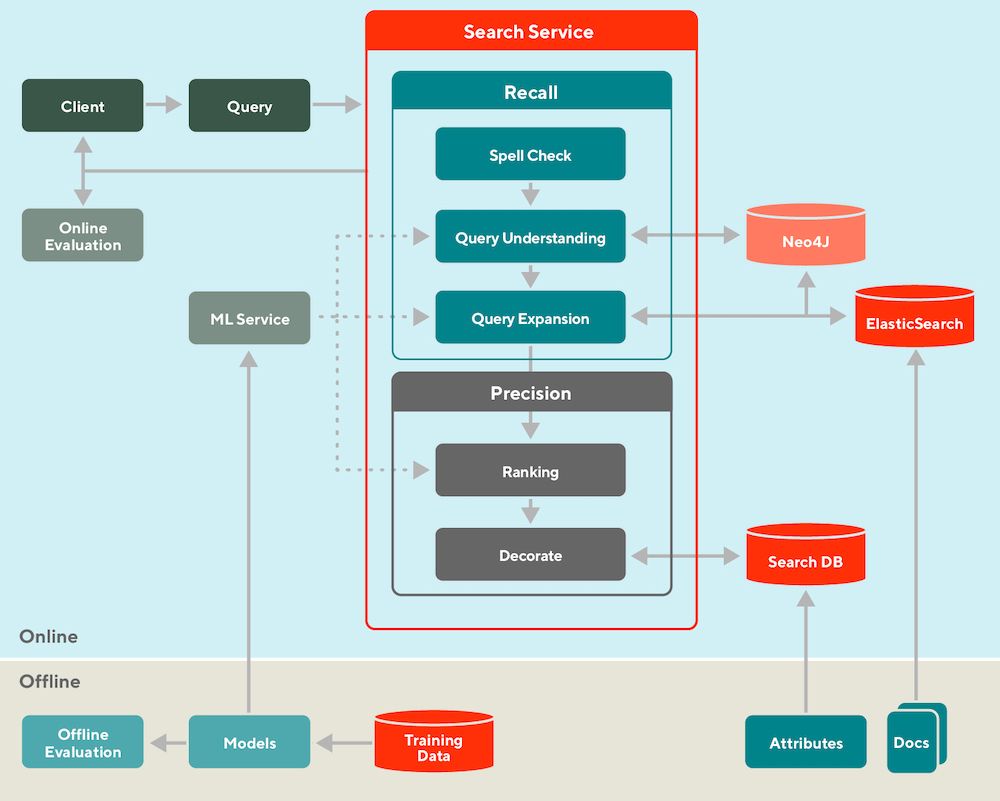

• Retrieval vs. Ranking: eugeneyan.com/writing/syst...

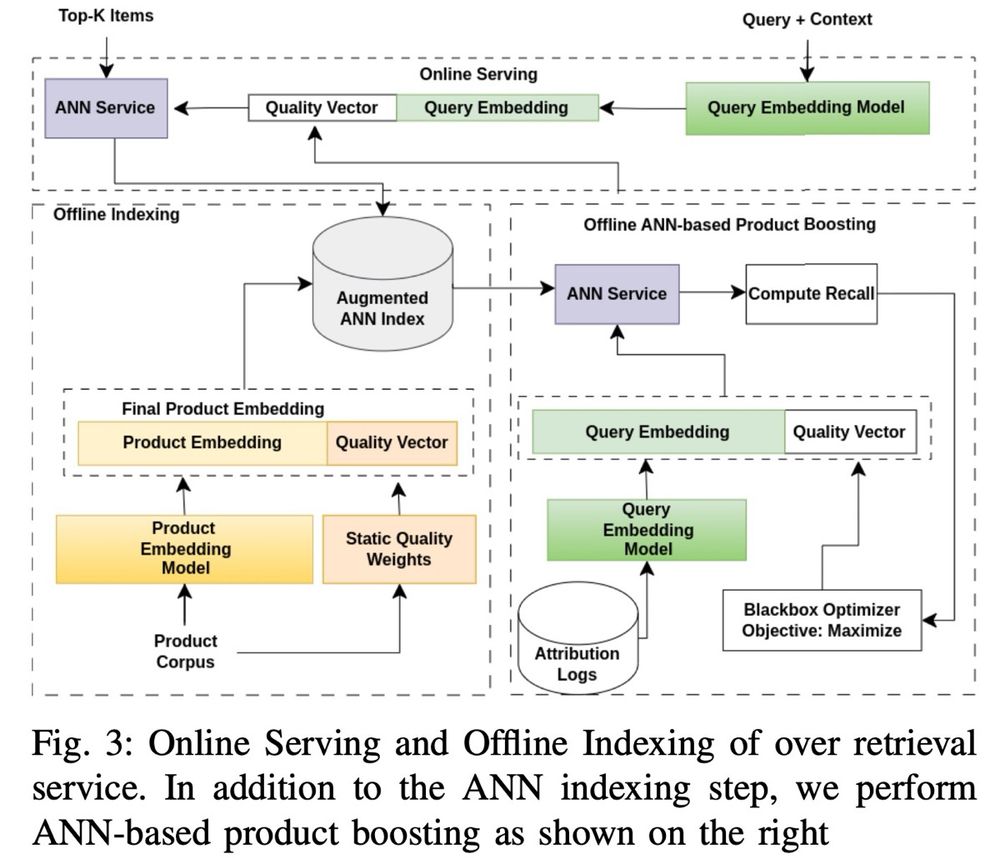

• Real-time retrieval: eugeneyan.com/writing/real...

• Personalization: eugeneyan.com/writing/patt...

Until then, here's some system designs:

• Retrieval vs. Ranking: eugeneyan.com/writing/syst...

• Real-time retrieval: eugeneyan.com/writing/real...

• Personalization: eugeneyan.com/writing/patt...

Language-specific Neurons Do Not Facilitate Cross-Lingual Transfer

https://arxiv.org/abs/2503.17456

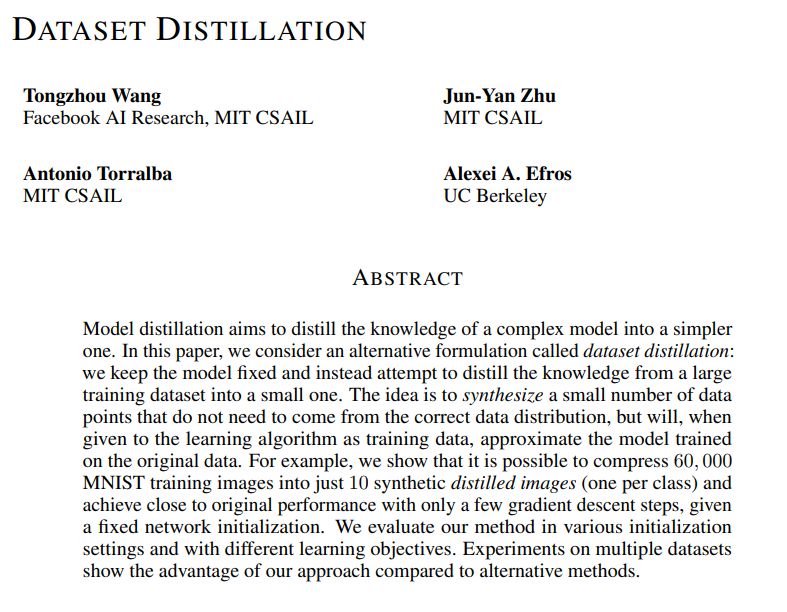

They show that it is possible to compress 60,000 MNIST training images into just 10 synthetic distilled images (one per class) and achieve close to original performance with only a few gradient descent steps, given a fixed network initialization.

They show that it is possible to compress 60,000 MNIST training images into just 10 synthetic distilled images (one per class) and achieve close to original performance with only a few gradient descent steps, given a fixed network initialization.

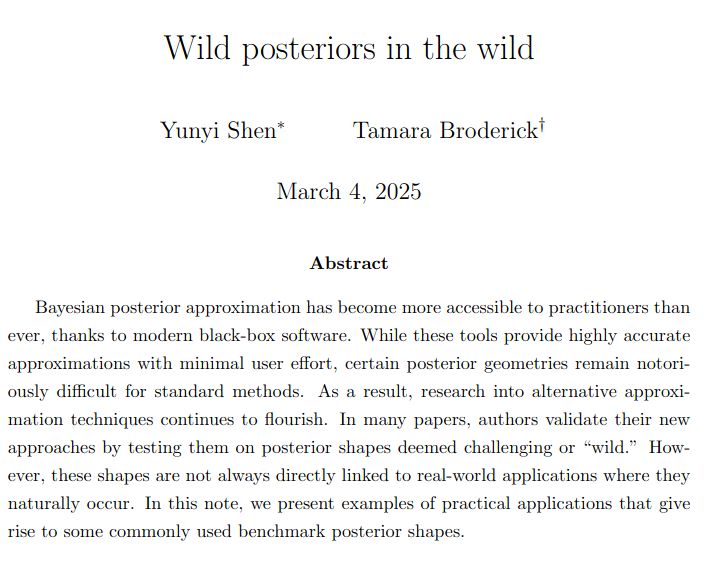

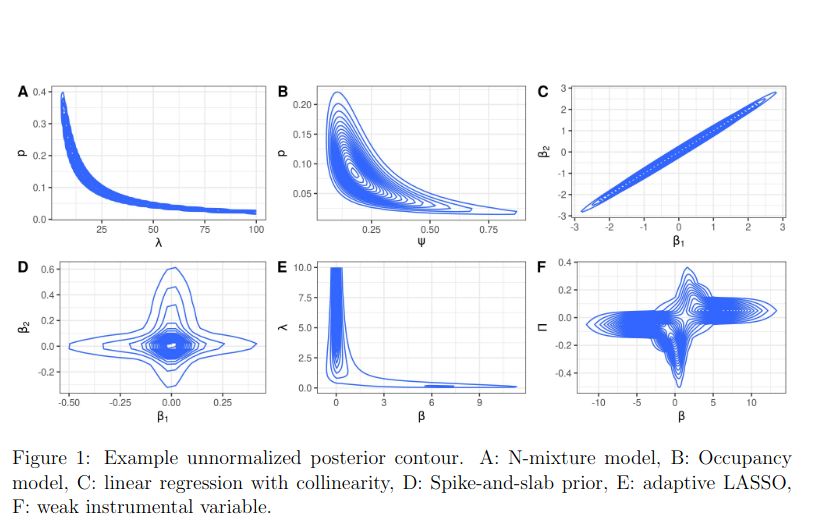

A cool-looking paper if you're interested in funky posterior geometry

Link: arxiv.org/abs/2503.00239

Code: github.com/YunyiShen/we...

#stats

A cool-looking paper if you're interested in funky posterior geometry

Link: arxiv.org/abs/2503.00239

Code: github.com/YunyiShen/we...

#stats

This is a little tool I made to experiment with generating like murder mysteries automatically~

This is a little tool I made to experiment with generating like murder mysteries automatically~

ChallengeMe: An Adversarial Learning-enabled Text Summarization Framework

https://arxiv.org/abs/2502.05084

ChallengeMe: An Adversarial Learning-enabled Text Summarization Framework

https://arxiv.org/abs/2502.05084

CoCoA: A Generalized Approach to Uncertainty Quantification by Integrating Confidence and Consistency of LLM Outputs

https://arxiv.org/abs/2502.04964

CoCoA: A Generalized Approach to Uncertainty Quantification by Integrating Confidence and Consistency of LLM Outputs

https://arxiv.org/abs/2502.04964

Commonality and Individuality! Integrating Humor Commonality with Speaker Individuality for Humor Recognition

https://arxiv.org/abs/2502.04960

Commonality and Individuality! Integrating Humor Commonality with Speaker Individuality for Humor Recognition

https://arxiv.org/abs/2502.04960

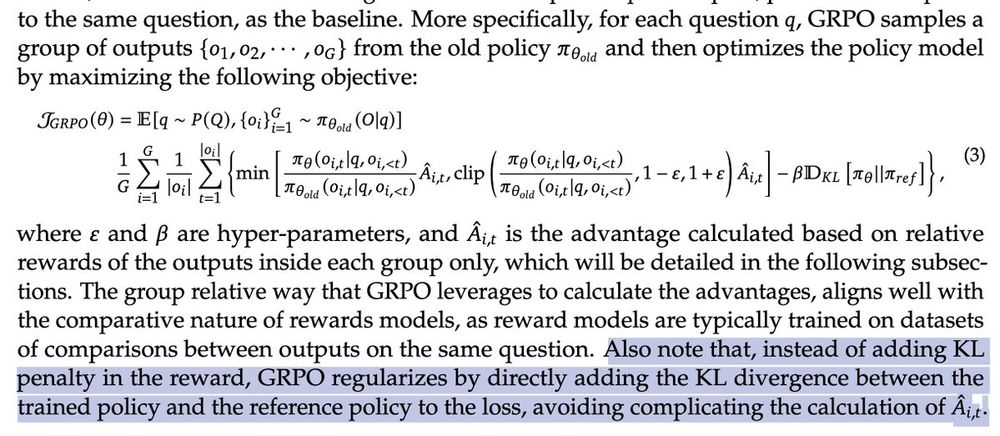

It's a little hard to reason about what this does to the objective. 1/

It's a little hard to reason about what this does to the objective. 1/

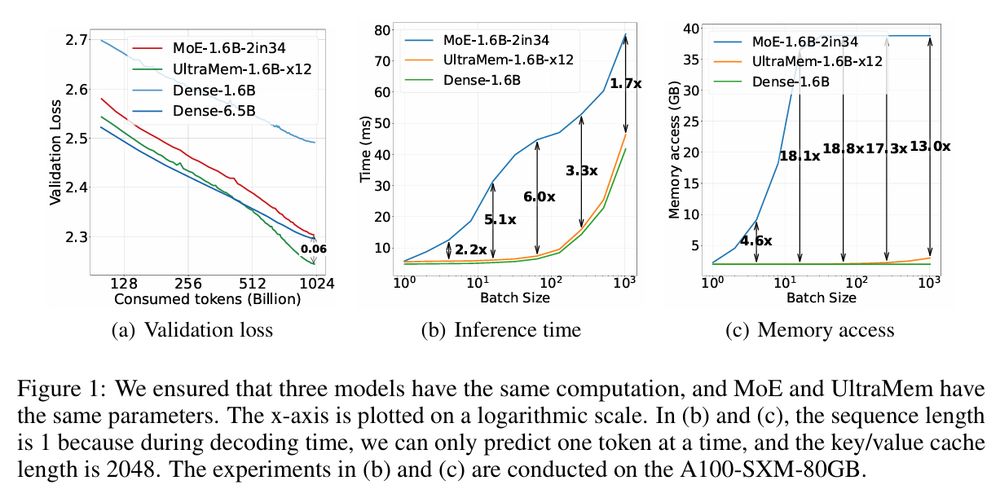

A new ultra sparse model that

- exhibits favorable scaling properties but outperforms MoE

- inference speed is 1.7x to 6.0x faster than MoE

Paper: Ultra-Sparse Memory Network ( arxiv.org/abs/2411.12364 )

A new ultra sparse model that

- exhibits favorable scaling properties but outperforms MoE

- inference speed is 1.7x to 6.0x faster than MoE

Paper: Ultra-Sparse Memory Network ( arxiv.org/abs/2411.12364 )

Developmentally-plausible Working Memory Shapes a Critical Period for Language Acquisition

https://arxiv.org/abs/2502.04795

Developmentally-plausible Working Memory Shapes a Critical Period for Language Acquisition

https://arxiv.org/abs/2502.04795

Self-Rationalization in the Wild: A Large Scale Out-of-Distribution Evaluation on NLI-related tasks

https://arxiv.org/abs/2502.04797

Self-Rationalization in the Wild: A Large Scale Out-of-Distribution Evaluation on NLI-related tasks

https://arxiv.org/abs/2502.04797

Enhancing Disinformation Detection with Explainable AI and Named Entity Replacement

https://arxiv.org/abs/2502.04863

Enhancing Disinformation Detection with Explainable AI and Named Entity Replacement

https://arxiv.org/abs/2502.04863

Claim Extraction for Fact-Checking: Data, Models, and Automated Metrics

https://arxiv.org/abs/2502.04955

Claim Extraction for Fact-Checking: Data, Models, and Automated Metrics

https://arxiv.org/abs/2502.04955

The pipeline that creates the data mix:

The pipeline that creates the data mix:

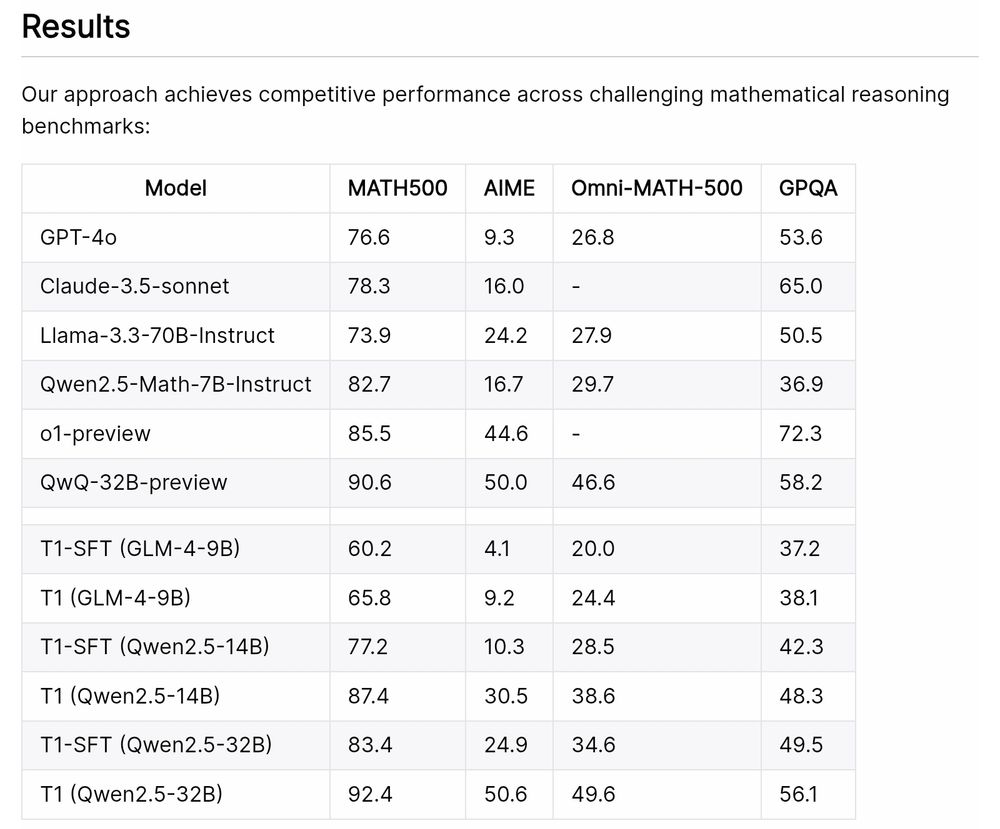

Advancing Language Model Reasoning through Reinforcement Learning and Inference Scaling

T1 is trained by scaling RL by encouraging exploration and understand inference scaling.

Advancing Language Model Reasoning through Reinforcement Learning and Inference Scaling

T1 is trained by scaling RL by encouraging exploration and understand inference scaling.

Towards Better Understanding Table Instruction Tuning: Decoupling the Effects from Data versus Models

https://arxiv.org/abs/2501.14717

Towards Better Understanding Table Instruction Tuning: Decoupling the Effects from Data versus Models

https://arxiv.org/abs/2501.14717

Investigating the (De)Composition Capabilities of Large Language Models in Natural-to-Formal Language Conversion

https://arxiv.org/abs/2501.14649

Investigating the (De)Composition Capabilities of Large Language Models in Natural-to-Formal Language Conversion

https://arxiv.org/abs/2501.14649

WanJuanSiLu: A High-Quality Open-Source Webtext Dataset for Low-Resource Languages

https://arxiv.org/abs/2501.14506

WanJuanSiLu: A High-Quality Open-Source Webtext Dataset for Low-Resource Languages

https://arxiv.org/abs/2501.14506

Evaluating and Improving Graph to Text Generation with Large Language Models

https://arxiv.org/abs/2501.14497

Evaluating and Improving Graph to Text Generation with Large Language Models

https://arxiv.org/abs/2501.14497

Analyzing the Effect of Linguistic Similarity on Cross-Lingual Transfer: Tasks and Experimental Setups Matter

https://arxiv.org/abs/2501.14491

Analyzing the Effect of Linguistic Similarity on Cross-Lingual Transfer: Tasks and Experimental Setups Matter

https://arxiv.org/abs/2501.14491

Understanding and Mitigating Gender Bias in LLMs via Interpretable Neuron Editing

https://arxiv.org/abs/2501.14457

Understanding and Mitigating Gender Bias in LLMs via Interpretable Neuron Editing

https://arxiv.org/abs/2501.14457

Domaino1s: Guiding LLM Reasoning for Explainable Answers in High-Stakes Domains

https://arxiv.org/abs/2501.14431

Domaino1s: Guiding LLM Reasoning for Explainable Answers in High-Stakes Domains

https://arxiv.org/abs/2501.14431

DRESSing Up LLM: Efficient Stylized Question-Answering via Style Subspace Editing

https://arxiv.org/abs/2501.14371

DRESSing Up LLM: Efficient Stylized Question-Answering via Style Subspace Editing

https://arxiv.org/abs/2501.14371