We tested every major parser on real enterprise documents.

The results will change how you think about OCR accuracy 🧵

We tested every major parser on real enterprise documents.

The results will change how you think about OCR accuracy 🧵

In the free Qdrant Essentials Course, learn how to:

- Architect vector-powered data lakes

- Optimize ETL pipelines

- Create knowledge graphs

- Integrate @langchain.bsky.social agents for natural language queries

t.co/OoPZswrL7z

In the free Qdrant Essentials Course, learn how to:

- Architect vector-powered data lakes

- Optimize ETL pipelines

- Create knowledge graphs

- Integrate @langchain.bsky.social agents for natural language queries

t.co/OoPZswrL7z

That means:

❌ Lost audit trails

❌ Manual review of revision history

❌ No programmatic access to reviewer comments

❌ Workflows that can't route based on specific edits

That means:

❌ Lost audit trails

❌ Manual review of revision history

❌ No programmatic access to reviewer comments

❌ Workflows that can't route based on specific edits

Section 2.2 becomes a top-level header (##) instead of nested (###).

We just shipped automatic header correction.

🧵 How it works:

Section 2.2 becomes a top-level header (##) instead of nested (###).

We just shipped automatic header correction.

🧵 How it works:

Once your chunks carry anchors, retrieval doesn’t change. You can use the dense, hybrid, or reranker setup you already have. Consider hiding the anchors in prose, while keeping them in output and making IDs clickable.

Once your chunks carry anchors, retrieval doesn’t change. You can use the dense, hybrid, or reranker setup you already have. Consider hiding the anchors in prose, while keeping them in output and making IDs clickable.

Iterate through page fragment objects and create appropriately sized chunks by combining them. As you create the chunks, you can create contextualized metadata to help during retrieval.

Iterate through page fragment objects and create appropriately sized chunks by combining them. As you create the chunks, you can create contextualized metadata to help during retrieval.

Using our Document AI API you get a full document layout. For each page fragment you have access to the page number, fragment type, content, and bounding box. Making it easy to add metadata and anchor points to chunks before embedding.

Using our Document AI API you get a full document layout. For each page fragment you have access to the page number, fragment type, content, and bounding box. Making it easy to add metadata and anchor points to chunks before embedding.

But flatten that data like most parsers do and trust is lost.

Tensorlake restores trust by preserving structure, generating summaries for effective embeddings, and attaching evidence via b-boxes.

But flatten that data like most parsers do and trust is lost.

Tensorlake restores trust by preserving structure, generating summaries for effective embeddings, and attaching evidence via b-boxes.

This is the beginning of better integration with Microsoft Azure and Tensorlake.

If you are using Azure, and need better Document Ingestion and ETL for unstructured data reach out to us!

This is the beginning of better integration with Microsoft Azure and Tensorlake.

If you are using Azure, and need better Document Ingestion and ETL for unstructured data reach out to us!

Get citations for every field extracted with Tensorlake.

Read the blog and try our citations with the example notebooks: tlake.link/blog/citations

Get citations for every field extracted with Tensorlake.

Read the blog and try our citations with the example notebooks: tlake.link/blog/citations

This is the fun part, use Tensorlake to extract key claims from news articles, then use your @langchain.bsky.social agent to query ChromaDB and determine whether the claims are rooted in fact.

This is the fun part, use Tensorlake to extract key claims from news articles, then use your @langchain.bsky.social agent to query ChromaDB and determine whether the claims are rooted in fact.

Don't rely on users to make queries that are specific for your data. Instead, make sure you contextualize your query so that during hybrid search you're finding the most relevant and accurate chunks.

In our example, we used @langchain.bsky.social to help

Don't rely on users to make queries that are specific for your data. Instead, make sure you contextualize your query so that during hybrid search you're finding the most relevant and accurate chunks.

In our example, we used @langchain.bsky.social to help

With clean, structured, and accurate data you can chunk and embed your documents in a way that is effectively and accurately retrievable by agents.

In our example, we used @chonkieai.bsky.social and ChromaDB.

With clean, structured, and accurate data you can chunk and embed your documents in a way that is effectively and accurately retrievable by agents.

In our example, we used @chonkieai.bsky.social and ChromaDB.

With a single API call, you can turn messy PDFs into page-aware, table-preserving, structured context. Simply:

1. Define page classes for different documents

2. Define schemas to extract relevant data

3. Parse

With a single API call, you can turn messy PDFs into page-aware, table-preserving, structured context. Simply:

1. Define page classes for different documents

2. Define schemas to extract relevant data

3. Parse

What’s dead is cosine‑N without a retrieval plan.

We ship advanced RAG...out of the box:

• Classify pages → target sections

• Extract structured fields → filter by form_type, fiscal_period

• Verify data; cite page/bbox

Want to know how? 🧵👇

What’s dead is cosine‑N without a retrieval plan.

We ship advanced RAG...out of the box:

• Classify pages → target sections

• Extract structured fields → filter by form_type, fiscal_period

• Verify data; cite page/bbox

Want to know how? 🧵👇

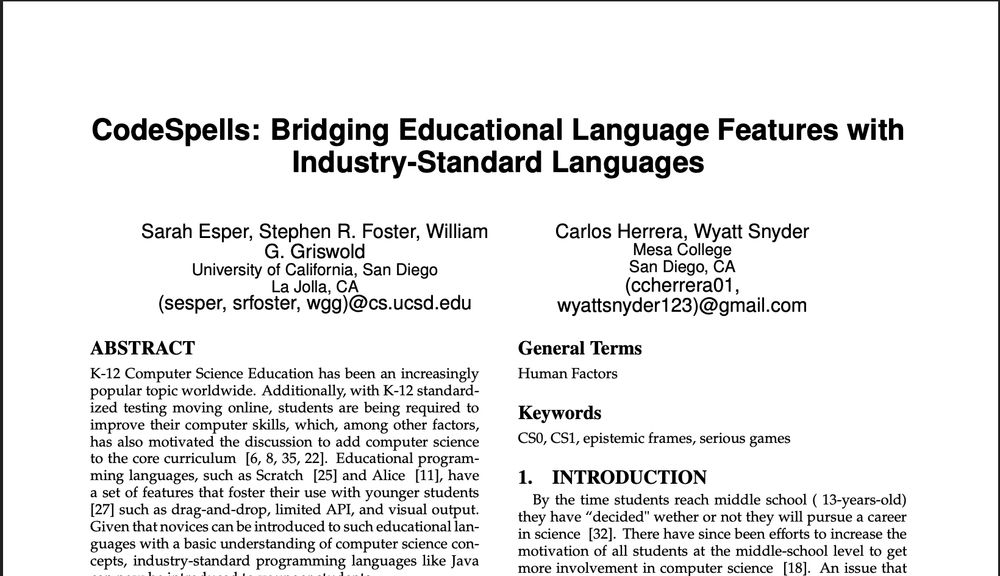

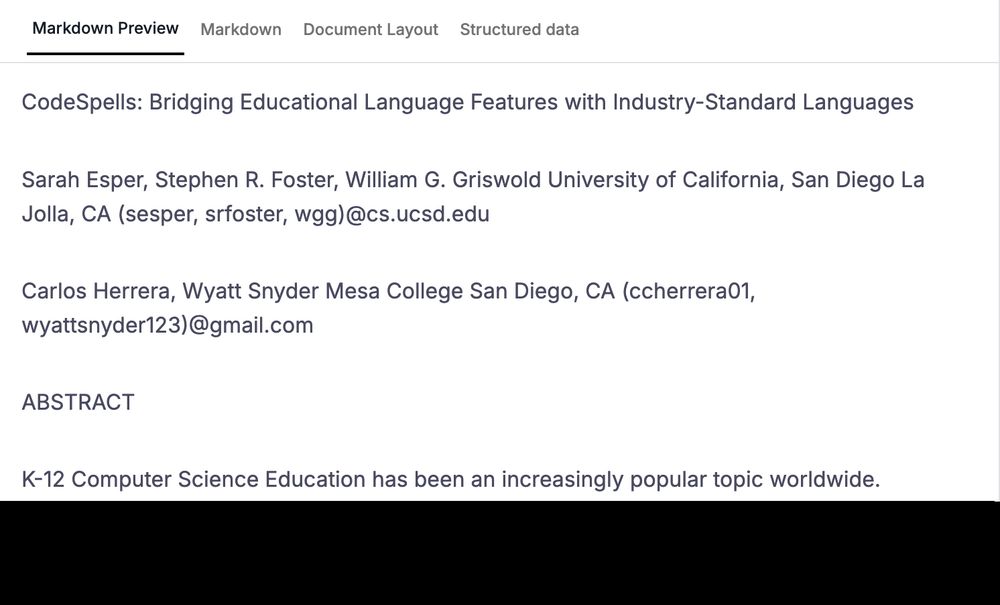

multiple columns, fragmented text blocks, mixed reading order

Tensorlake doesn't.

✅ Authors parsed as one clean chunk

✅ Abstract follows, exactly as it should

Unstructured ≠ unordered

Preserve reading order. Parse with Tensorlake.

multiple columns, fragmented text blocks, mixed reading order

Tensorlake doesn't.

✅ Authors parsed as one clean chunk

✅ Abstract follows, exactly as it should

Unstructured ≠ unordered

Preserve reading order. Parse with Tensorlake.

Huge thanks to everyone supporting Tensorlake 🎉

From devs wrangling PDFs to teams automating high-stakes workflows.

If you haven’t yet, check us out 👇

Huge thanks to everyone supporting Tensorlake 🎉

From devs wrangling PDFs to teams automating high-stakes workflows.

If you haven’t yet, check us out 👇

If you’ve dealt with messy document workflows and trying to parse complex documents (insurance claims, financial docs, multi-page forms), this is for you.

Would love your support 💚

www.producthunt.com/products/te...

If you’ve dealt with messy document workflows and trying to parse complex documents (insurance claims, financial docs, multi-page forms), this is for you.

Would love your support 💚

www.producthunt.com/products/te...