Apply by December 15. More info and application form available here:

fas.org/career/senio...

Apply by December 15. More info and application form available here:

fas.org/career/senio...

AI bias is real & measurable. Yet the plan tells NIST to drop “diversity, equity & inclusion” from its AI Risk Mgmt Framework and requires federal models be “free from ideological bias.” Lots depends on implementation but this is hiding real problems.

AI bias is real & measurable. Yet the plan tells NIST to drop “diversity, equity & inclusion” from its AI Risk Mgmt Framework and requires federal models be “free from ideological bias.” Lots depends on implementation but this is hiding real problems.

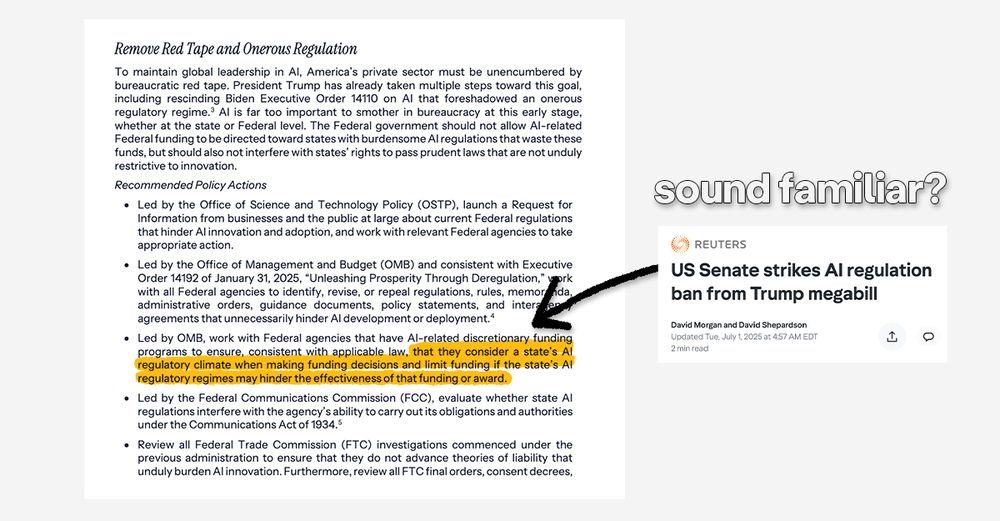

Last month the Senate stripped a clause from OBBBA that would have restricted state AI rules. The plan tries again to block state guardrails even as Congress sets no federal standard.

Last month the Senate stripped a clause from OBBBA that would have restricted state AI rules. The plan tries again to block state guardrails even as Congress sets no federal standard.

➡️ Interpretability: We need to see inside AI's black box. With FAS AI Fellow Matteo Pistillo, we've drafted a federal roadmap to advance AI interpretability: fas.org/publication/...

➡️ Interpretability: We need to see inside AI's black box. With FAS AI Fellow Matteo Pistillo, we've drafted a federal roadmap to advance AI interpretability: fas.org/publication/...