huggingface.co/spaces/econo...

huggingface.co/spaces/econo...

Models disclosing training data fell ~79% (2022) → ~39% (2025). And for the first time, open-weights > truly open-source—raising accountability and reproducibility concerns.

Models disclosing training data fell ~79% (2022) → ~39% (2025). And for the first time, open-weights > truly open-source—raising accountability and reproducibility concerns.

Community repos that quantize, repack, and adapt base models now move a large fraction of real-world usage.

MLX-Community, SD Concepts Library, LMStudio-Community and others are consolidating models for deployment, & artistic adaptation.

Community repos that quantize, repack, and adapt base models now move a large fraction of real-world usage.

MLX-Community, SD Concepts Library, LMStudio-Community and others are consolidating models for deployment, & artistic adaptation.

Average downloaded size rose 17× with 7× MoE and 5× quantization adoption; multimodal & video downloads grew ~3.4×.

Average downloaded size rose 17× with 7× MoE and 5× quantization adoption; multimodal & video downloads grew ~3.4×.

US big-tech share (Google/Meta/OpenAI) dominance has dissipated while community/unaffiliated devs surged, and Chinese industry (DeepSeek, Qwen) now commands a major share—hinting at a new consolidation wave among open-weights.

US big-tech share (Google/Meta/OpenAI) dominance has dissipated while community/unaffiliated devs surged, and Chinese industry (DeepSeek, Qwen) now commands a major share—hinting at a new consolidation wave among open-weights.

Our new study Economies of Open Intelligence maps @hf.co 851k models' downloads 2020→2025.

1) Power rebalance: US tech ↓; China + community ↑

2) Models size & efficient ↑ (MoE, quant, multimodal)

3) Intermediary layers ↑ (adapters/quantizers)

4) Transparency ↓

/🧵

Our new study Economies of Open Intelligence maps @hf.co 851k models' downloads 2020→2025.

1) Power rebalance: US tech ↓; China + community ↑

2) Models size & efficient ↑ (MoE, quant, multimodal)

3) Intermediary layers ↑ (adapters/quantizers)

4) Transparency ↓

/🧵

🌟Answer: We found compute-optimal crossover points for every model size.

Rough rule of thumb: finetune if your compute budget C is < 10^10 x N ^1.54, otherwise pretrain.

8/

🌟Answer: We found compute-optimal crossover points for every model size.

Rough rule of thumb: finetune if your compute budget C is < 10^10 x N ^1.54, otherwise pretrain.

8/

🌟Answer: We derived closed-form equations! To go from K to 4K languages while maintaining performance: scale data by 2.74×, model size by 1.4×.

6/

🌟Answer: We derived closed-form equations! To go from K to 4K languages while maintaining performance: scale data by 2.74×, model size by 1.4×.

6/

Languages sharing writing systems (e.g., Latin) show dramatically better transfer (mean: -0.23) vs different scripts (mean: -0.39).

Also important: transfer is often asymmetric—A helping B ≠ B helping A.

5/

Languages sharing writing systems (e.g., Latin) show dramatically better transfer (mean: -0.23) vs different scripts (mean: -0.39).

Also important: transfer is often asymmetric—A helping B ≠ B helping A.

5/

🌟Answer: We measure this empirically. We built a 38×38 transfer matrix, or 1,444 language pairs—the largest such resource to date.

We highlight the top 5 most beneficial source languages for each target language.

4/

🌟Answer: We measure this empirically. We built a 38×38 transfer matrix, or 1,444 language pairs—the largest such resource to date.

We highlight the top 5 most beneficial source languages for each target language.

4/

Without modeling transfer, existing laws fail on multilingual settings.

3/

Without modeling transfer, existing laws fail on multilingual settings.

3/

🌟Answer: Yes! ATLAS outperforms prior work with R²(N)=0.88 vs 0.68, and R²(M)=0.82 vs 0.69 for mixture generalization.

2/

🌟Answer: Yes! ATLAS outperforms prior work with R²(N)=0.88 vs 0.68, and R²(M)=0.82 vs 0.69 for mixture generalization.

2/

🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

BigGen Bench introduces fine-grained, scalable, & human-aligned evaluations:

📈 77 hard, diverse tasks

🛠️ 765 exs w/ ex-specific rubrics

📋 More human-aligned than previous rubrics

🌍 10 languages, by native speakers

1/

BigGen Bench introduces fine-grained, scalable, & human-aligned evaluations:

📈 77 hard, diverse tasks

🛠️ 765 exs w/ ex-specific rubrics

📋 More human-aligned than previous rubrics

🌍 10 languages, by native speakers

1/

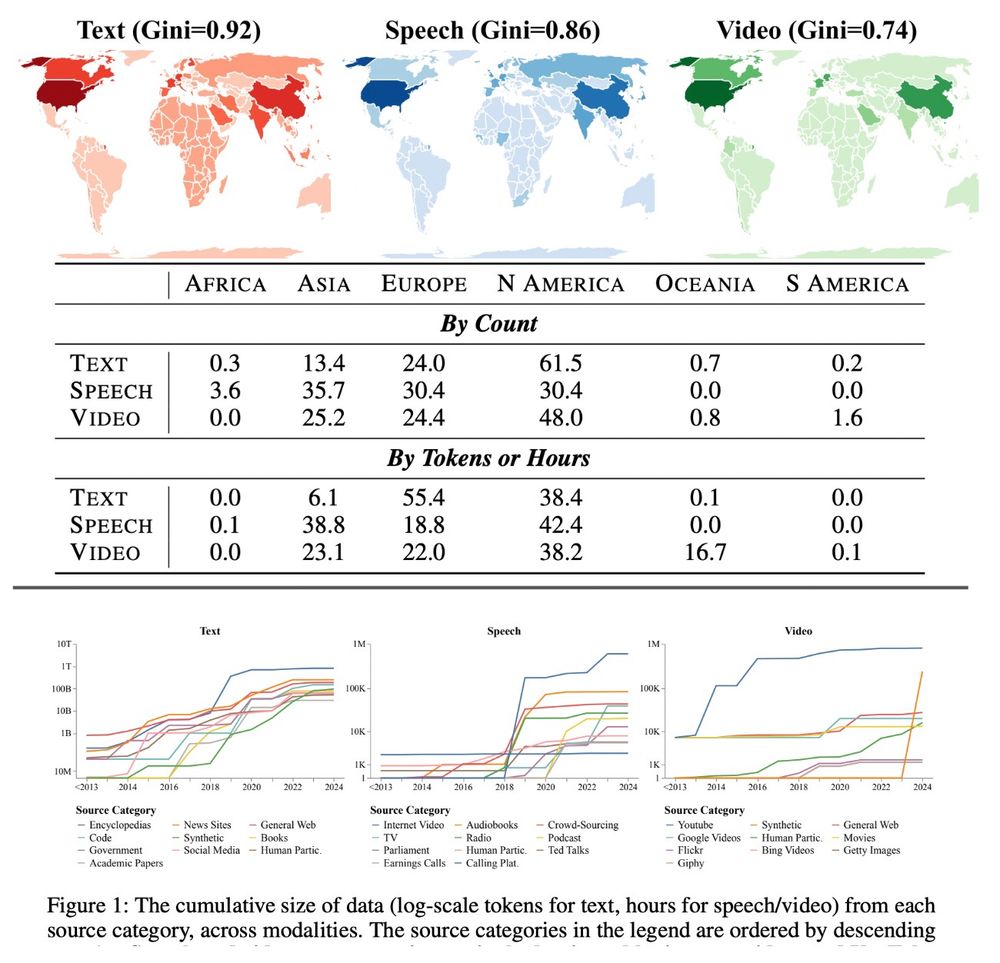

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

1/

1/

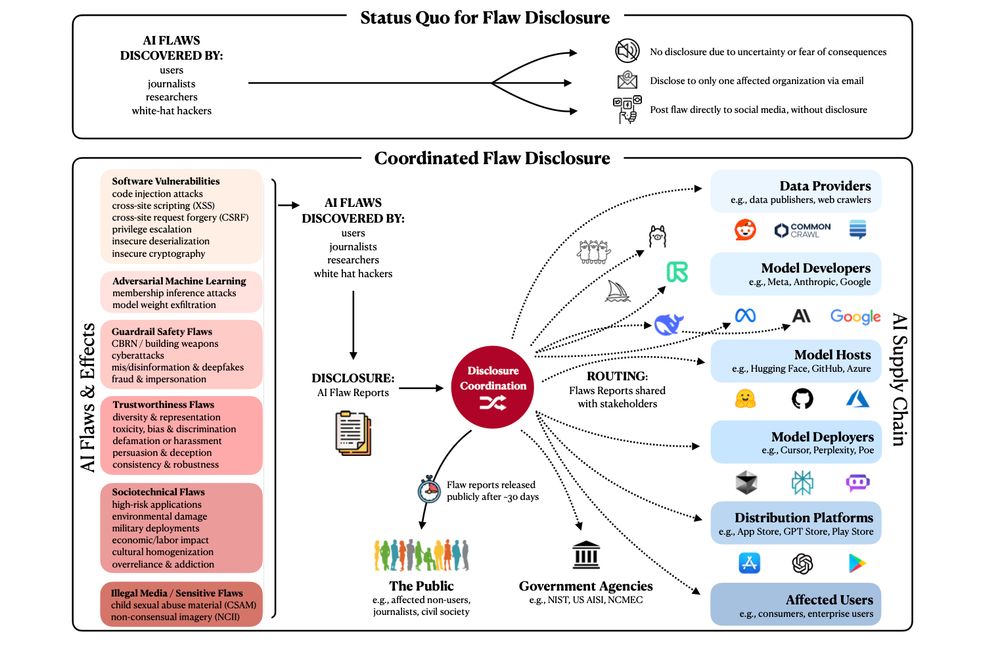

For transferable AI flaws (e.g., jailbreaks affecting multiple systems), we need to inform all relevant developers and stakeholders who must act to mitigate these issues.

See the Figure to understand the before and after of flaw disclosure.

6/

For transferable AI flaws (e.g., jailbreaks affecting multiple systems), we need to inform all relevant developers and stakeholders who must act to mitigate these issues.

See the Figure to understand the before and after of flaw disclosure.

6/

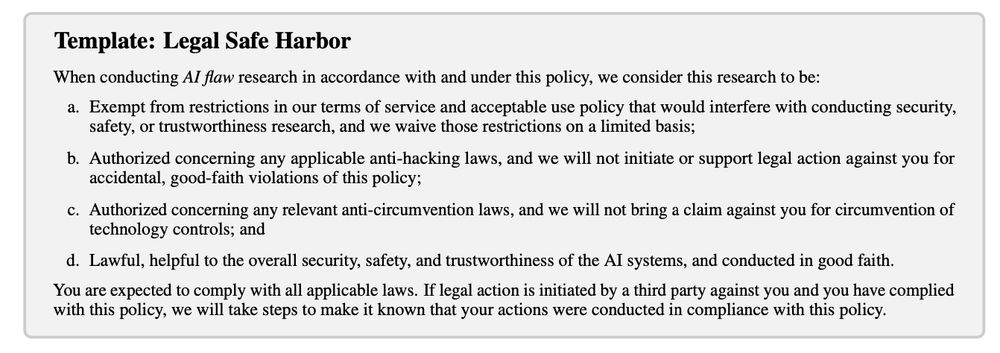

Security-only or invite-only bug bounties from OpenAI and Anthropic are a great start.

But eventually we need disclosure programs to cover the full range of AI issues, and protect independent researchers.

5/

Security-only or invite-only bug bounties from OpenAI and Anthropic are a great start.

But eventually we need disclosure programs to cover the full range of AI issues, and protect independent researchers.

5/

1️⃣ adoption of standardized AI flaw reports, to improve flaw reproducibility, triaging, coordination across stakeholders, and ultimately AI safety.

4/

1️⃣ adoption of standardized AI flaw reports, to improve flaw reproducibility, triaging, coordination across stakeholders, and ultimately AI safety.

4/

Today, GPAI serves 300M+ users globally, w/ diverse & unforeseen uses across modalities and languages.

➡️ We need third-party evaluation for its broad expertise, participation and independence, including from real users, academic researchers, white-hat hackers, and journalists

2/

Today, GPAI serves 300M+ users globally, w/ diverse & unforeseen uses across modalities and languages.

➡️ We need third-party evaluation for its broad expertise, participation and independence, including from real users, academic researchers, white-hat hackers, and journalists

2/

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

We’re presenting the state of transparency, tooling, and policy, from the Foundation Model Transparency Index, Factsheets, the the EU AI Act to new frameworks like @MLCommons’ Croissant.

1/

We’re presenting the state of transparency, tooling, and policy, from the Foundation Model Transparency Index, Factsheets, the the EU AI Act to new frameworks like @MLCommons’ Croissant.

1/

➡️ why is copyright an issue for AI?

➡️ what is fair use?

➡️ why are memorization and generation important?

➡️ how does it impact the AI data supply / web crawling?

🧵

➡️ why is copyright an issue for AI?

➡️ what is fair use?

➡️ why are memorization and generation important?

➡️ how does it impact the AI data supply / web crawling?

🧵

Increasingly, they block or charge all non-human traffic, not just AI crawlers.

3/

Increasingly, they block or charge all non-human traffic, not just AI crawlers.

3/