Stephanie Chan

@scychan.bsky.social

Staff Research Scientist at Google DeepMind. Artificial and biological brains 🤖 🧠

It was such a pleasure to co-supervise this research, but

@aaditya6284.bsky.social should really take the bulk of the credit :)

And thank you so much to all our wonderful collaborators, who made fundamental contributions as well!

Ted Moskovitz, Sara Dragutinovic, Felix Hill, @saxelab.bsky.social

@aaditya6284.bsky.social should really take the bulk of the credit :)

And thank you so much to all our wonderful collaborators, who made fundamental contributions as well!

Ted Moskovitz, Sara Dragutinovic, Felix Hill, @saxelab.bsky.social

March 11, 2025 at 6:18 PM

It was such a pleasure to co-supervise this research, but

@aaditya6284.bsky.social should really take the bulk of the credit :)

And thank you so much to all our wonderful collaborators, who made fundamental contributions as well!

Ted Moskovitz, Sara Dragutinovic, Felix Hill, @saxelab.bsky.social

@aaditya6284.bsky.social should really take the bulk of the credit :)

And thank you so much to all our wonderful collaborators, who made fundamental contributions as well!

Ted Moskovitz, Sara Dragutinovic, Felix Hill, @saxelab.bsky.social

This paper is dedicated to our collaborator

Felix Hill, who passed away recently. This is our last ever paper with him.

It was bittersweet to finish this research, which contains so much of the scientific spark that he shared with us. Rest in peace Felix, and thank you so much for everything.

Felix Hill, who passed away recently. This is our last ever paper with him.

It was bittersweet to finish this research, which contains so much of the scientific spark that he shared with us. Rest in peace Felix, and thank you so much for everything.

March 11, 2025 at 6:18 PM

This paper is dedicated to our collaborator

Felix Hill, who passed away recently. This is our last ever paper with him.

It was bittersweet to finish this research, which contains so much of the scientific spark that he shared with us. Rest in peace Felix, and thank you so much for everything.

Felix Hill, who passed away recently. This is our last ever paper with him.

It was bittersweet to finish this research, which contains so much of the scientific spark that he shared with us. Rest in peace Felix, and thank you so much for everything.

Some general takeaways for interp:

March 11, 2025 at 6:18 PM

Some general takeaways for interp:

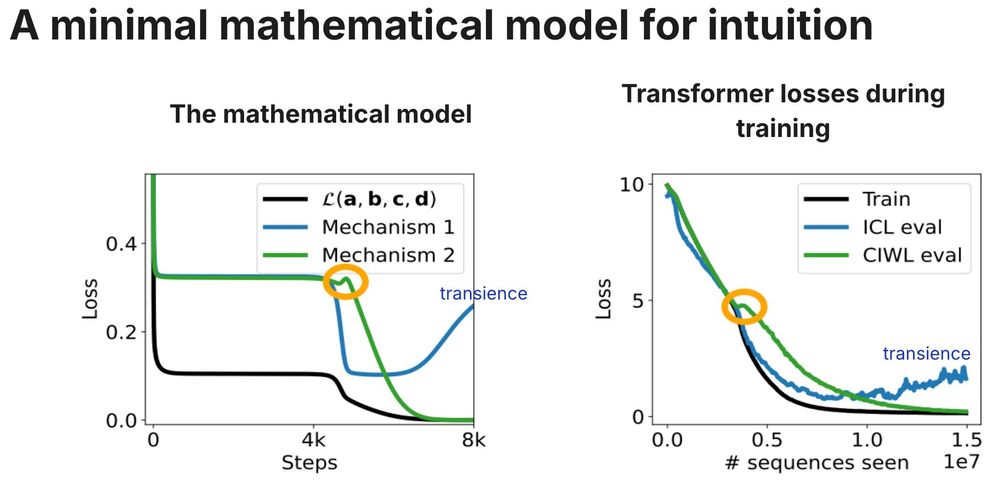

4. We provide intuition for these dynamics through a simple mathematical model.

March 11, 2025 at 6:18 PM

4. We provide intuition for these dynamics through a simple mathematical model.

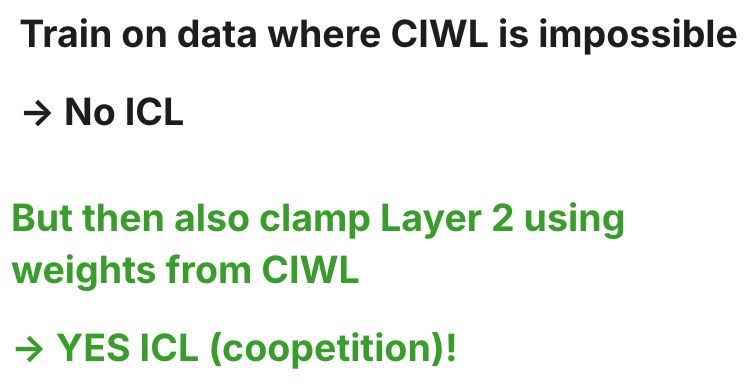

3. A lot of previous work (including our own), has emphasized *competition* between in-context and in-weights learning.

But we find that cIWL and ICL actually compete AND cooperate, via shared subcircuits. In fact, ICL cannot emerge if cIWL is blocked from emerging, even though ICL emerges first!

But we find that cIWL and ICL actually compete AND cooperate, via shared subcircuits. In fact, ICL cannot emerge if cIWL is blocked from emerging, even though ICL emerges first!

March 11, 2025 at 6:18 PM

3. A lot of previous work (including our own), has emphasized *competition* between in-context and in-weights learning.

But we find that cIWL and ICL actually compete AND cooperate, via shared subcircuits. In fact, ICL cannot emerge if cIWL is blocked from emerging, even though ICL emerges first!

But we find that cIWL and ICL actually compete AND cooperate, via shared subcircuits. In fact, ICL cannot emerge if cIWL is blocked from emerging, even though ICL emerges first!

2. At the end of training, ICL doesn't give way to in-weights learning (IWL), as we previously thought. Instead, the model prefers a surprising strategy that is a *combination* of the two!

We call this combo "cIWL" (context-constrained in-weights learning).

We call this combo "cIWL" (context-constrained in-weights learning).

March 11, 2025 at 6:18 PM

2. At the end of training, ICL doesn't give way to in-weights learning (IWL), as we previously thought. Instead, the model prefers a surprising strategy that is a *combination* of the two!

We call this combo "cIWL" (context-constrained in-weights learning).

We call this combo "cIWL" (context-constrained in-weights learning).

1. We aimed to better understand the transience of in-context-learning (ICL) -- where ICL can emerge but then disappear after long training times.

March 11, 2025 at 6:18 PM

1. We aimed to better understand the transience of in-context-learning (ICL) -- where ICL can emerge but then disappear after long training times.

Dropping a few high-level takeaways in this thread.

For more details please see Aaditya's thread,

or the paper itself.

bsky.app/profile/aadi...

arxiv.org/abs/2503.05631

For more details please see Aaditya's thread,

or the paper itself.

bsky.app/profile/aadi...

arxiv.org/abs/2503.05631

March 11, 2025 at 6:18 PM

Dropping a few high-level takeaways in this thread.

For more details please see Aaditya's thread,

or the paper itself.

bsky.app/profile/aadi...

arxiv.org/abs/2503.05631

For more details please see Aaditya's thread,

or the paper itself.

bsky.app/profile/aadi...

arxiv.org/abs/2503.05631

Reposted by Stephanie Chan

Introducing the :milkfoamo: emoji

December 9, 2024 at 8:12 PM

Introducing the :milkfoamo: emoji

Hahaha. We need a cappuccino emoji?!

December 9, 2024 at 4:56 PM

Hahaha. We need a cappuccino emoji?!