We've partnered with @datacamp.bsky.social to create an interactive course that covers the fundamentals so you can write your next query with Polars.

The course is free till the end of August: www.datacamp.com/courses/intr...

We've partnered with @datacamp.bsky.social to create an interactive course that covers the fundamentals so you can write your next query with Polars.

The course is free till the end of August: www.datacamp.com/courses/intr...

Learn the fundamentals and get familiar with our API through hands-on exercises. The course is available for everyone and free until the end of August.

Start the free course here: www.datacamp.com/courses/intr...

Learn the fundamentals and get familiar with our API through hands-on exercises. The course is available for everyone and free until the end of August.

Start the free course here: www.datacamp.com/courses/intr...

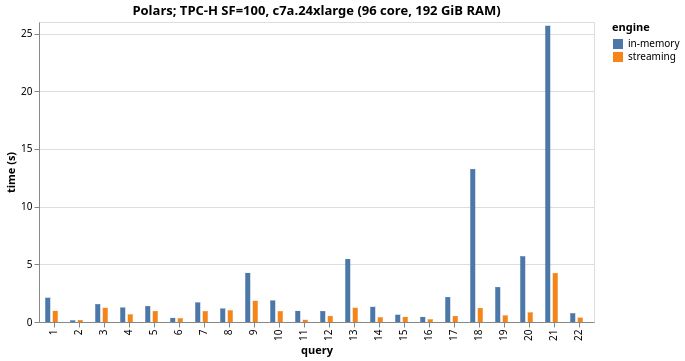

In the last months, the team has worked incredibly hard on the new-streaming engine and the results pay off. It is incredibly fast, and beats the Polars in-memory engine by a factor of 4 on a 96vCPU machine.

In the last months, the team has worked incredibly hard on the new-streaming engine and the results pay off. It is incredibly fast, and beats the Polars in-memory engine by a factor of 4 on a 96vCPU machine.

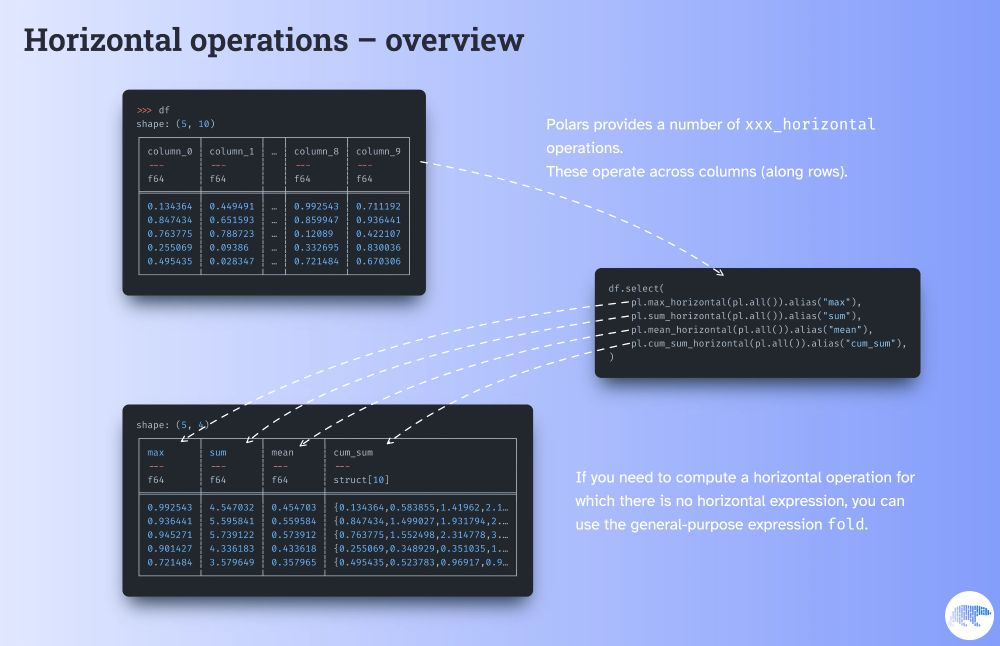

These expressions perform computations across columns. (Or along rows, depending on how you look at it.)

If your horizontal operation isn’t implemented, you can use the general-purpose fold.

These expressions perform computations across columns. (Or along rows, depending on how you look at it.)

If your horizontal operation isn’t implemented, you can use the general-purpose fold.

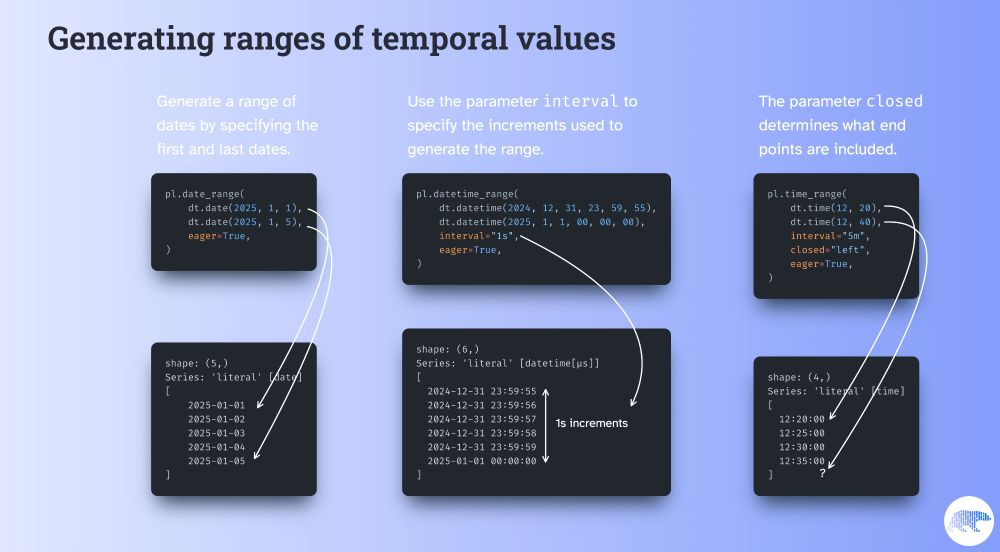

date_range, datetime_range, and time_range.

These can be executed eagerly or lazily.

You can also customize the interval between consecutive values and whether the start/end points are included.

date_range, datetime_range, and time_range.

These can be executed eagerly or lazily.

You can also customize the interval between consecutive values and whether the start/end points are included.

This means you can do computations per group without having to group first and then explode after.

In this example, we rank swimmers based on their time, but within their race type.

This means you can do computations per group without having to group first and then explode after.

In this example, we rank swimmers based on their time, but within their race type.

Within an aggregation, you can also use filter to filter values from aggregated groups.

In this example we ignore unverified times when computing the current record.

Within an aggregation, you can also use filter to filter values from aggregated groups.

In this example we ignore unverified times when computing the current record.

You provide a lower and an upper bound, and Polars makes sure all values fall within those bounds.

If a value is too small/too large, it's replaced by the bound.

Bounds can be literals, other columns, or arbitrary expressions.

You provide a lower and an upper bound, and Polars makes sure all values fall within those bounds.

If a value is too small/too large, it's replaced by the bound.

Bounds can be literals, other columns, or arbitrary expressions.

Sign up at info.nvidia.com/nvidia-polar...

See you there?

Sign up at info.nvidia.com/nvidia-polar...

See you there?

This means that inequality joins are now more flexible than ever!

Here is a small example of something you couldn't do before:

This means that inequality joins are now more flexible than ever!

Here is a small example of something you couldn't do before:

To use it, you specify a date(time) column to group by, and then determine the windows over which values are aggregated.

Note how data points can fall within multiple windows 👇

To use it, you specify a date(time) column to group by, and then determine the windows over which values are aggregated.

Note how data points can fall within multiple windows 👇

(Me neither!)

Memorise this Polars snippet instead.

Using some calendar-aware functions, we can get the answer in a tidy dataframe, as the diagram below shows.

(Me neither!)

Memorise this Polars snippet instead.

Using some calendar-aware functions, we can get the answer in a tidy dataframe, as the diagram below shows.

Polars lets you do that with `join_where`, which supports inequality joins through the use of inequality predicates.

Here's an example 👇

Polars lets you do that with `join_where`, which supports inequality joins through the use of inequality predicates.

Here's an example 👇

If you are unsure what each type is, the conversion table below might help you.

Each Polars data type is presented next to the **most similar** Python type.

If you are unsure what each type is, the conversion table below might help you.

Each Polars data type is presented next to the **most similar** Python type.

This comes straight from our Discord server (discord.com/invite/4UfP5...)

This comes straight from our Discord server (discord.com/invite/4UfP5...)

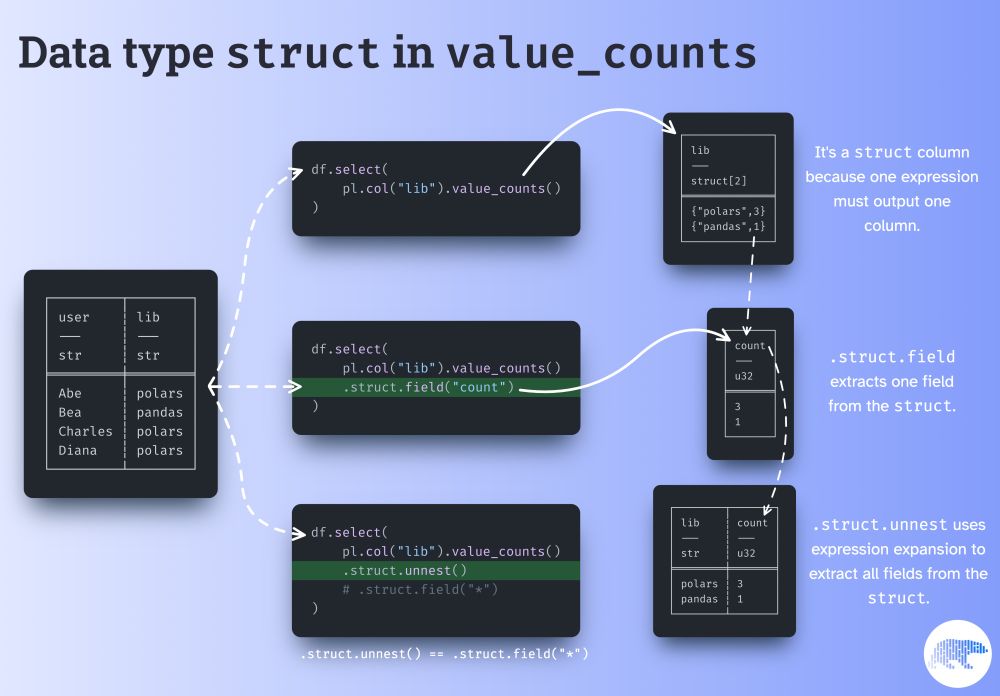

A single expression produces a single column, so expressions like `value_counts` need to output structs to map the values to their counts.

With that said, do you understand why `.struct.unnest` doesn't break the 1 expr = 1 column principle?

A single expression produces a single column, so expressions like `value_counts` need to output structs to map the values to their counts.

With that said, do you understand why `.struct.unnest` doesn't break the 1 expr = 1 column principle?