💻https://github.com/apple/ml-lineas

📄https://arxiv.org/abs/2503.10679

💻https://github.com/apple/ml-lineas

📄https://arxiv.org/abs/2503.10679

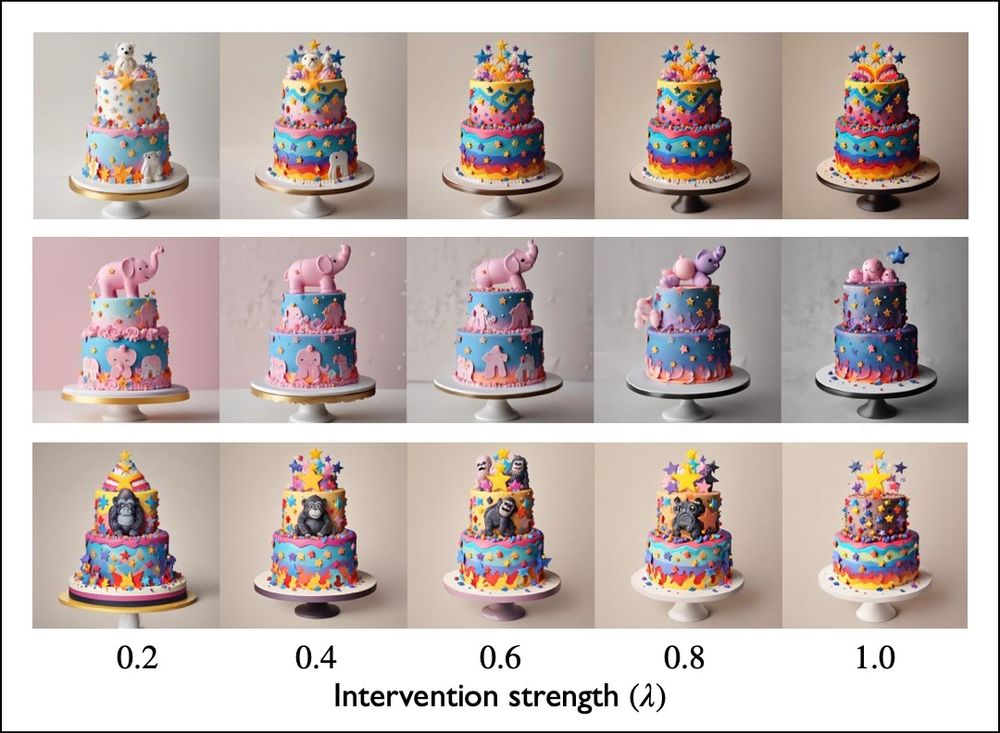

In the image, StableDiffusion XL prompted with: “2 tier cake with multicolored stars attached to it and no {white bear, pink elephant, gorilla} can be seen.”

✨Linear-AcT makes the negated concept disappear✨

In the image, StableDiffusion XL prompted with: “2 tier cake with multicolored stars attached to it and no {white bear, pink elephant, gorilla} can be seen.”

✨Linear-AcT makes the negated concept disappear✨

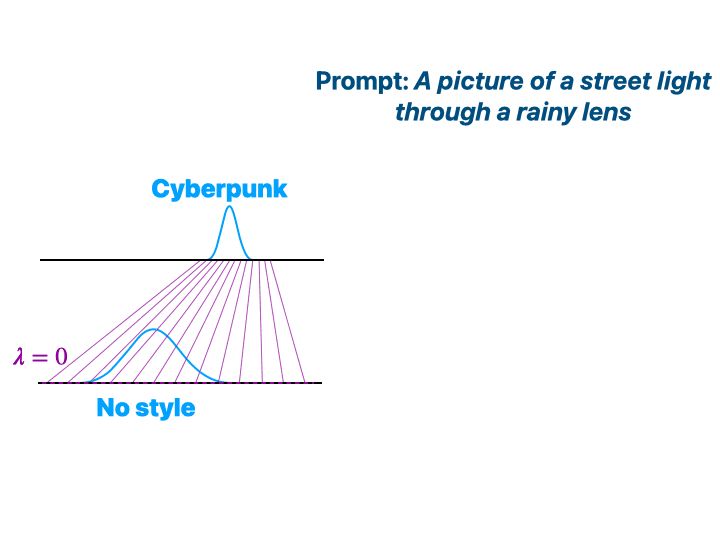

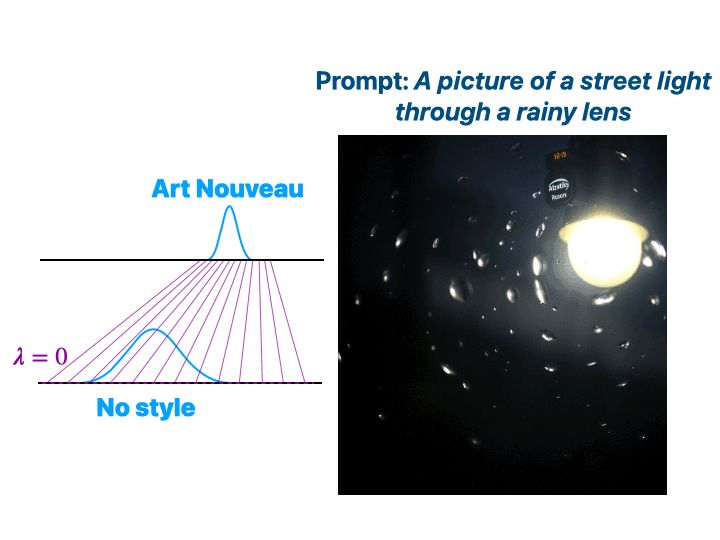

In this example, we induce a specific style (Art Nouveau 🎨), which we can accurately control with our λ parameter.

In this example, we induce a specific style (Art Nouveau 🎨), which we can accurately control with our λ parameter.

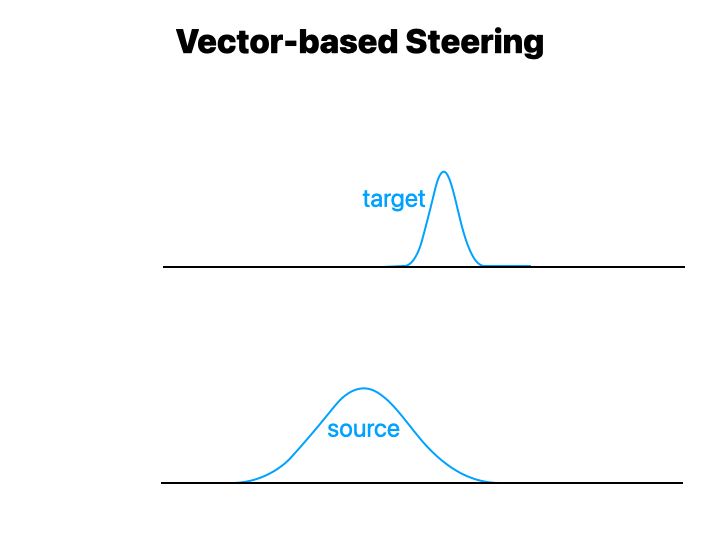

And the best result is always obtained at λ=1, as opposed to vector-based steering methods!

And the best result is always obtained at λ=1, as opposed to vector-based steering methods!

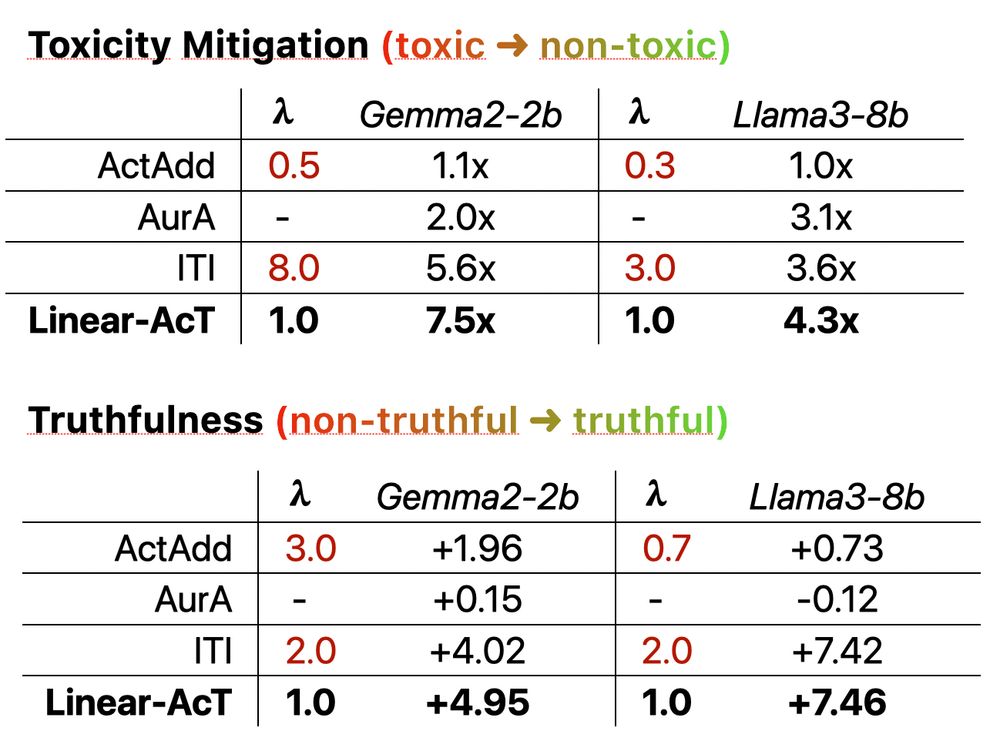

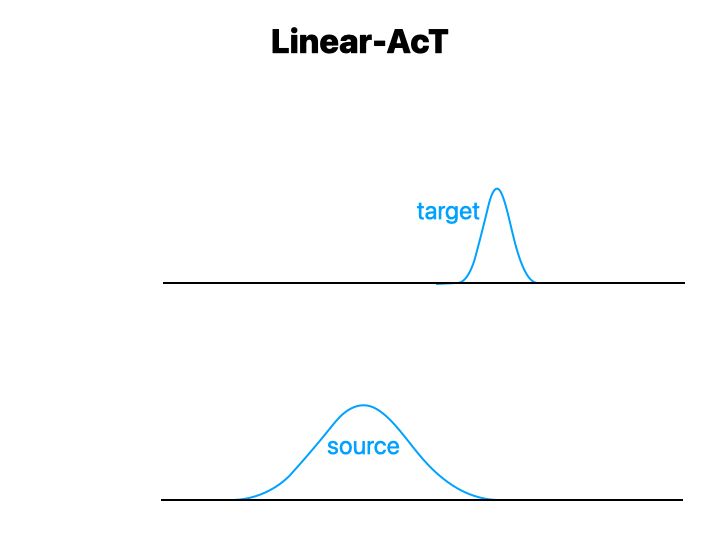

🍰 All we need is two small sets of sentences {a},{b} from source and target distributions to estimate the Optimal Transport (OT) map 🚚

🚀 We linearize the map for speed/memory, thus ⭐Linear-AcT⭐

🍰 All we need is two small sets of sentences {a},{b} from source and target distributions to estimate the Optimal Transport (OT) map 🚚

🚀 We linearize the map for speed/memory, thus ⭐Linear-AcT⭐

@Apple

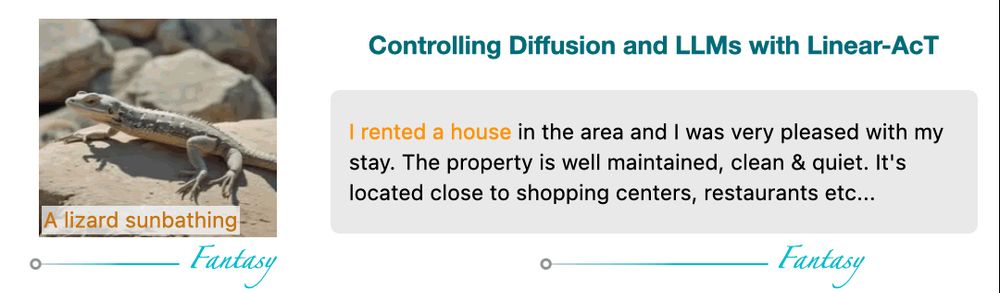

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

@Apple

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵