Re mitigation: we find that showing users contrastive explanations—reasoning both why a claim may be true and why it may be false—helps counter over-reliance to some extent.

Re mitigation: we find that showing users contrastive explanations—reasoning both why a claim may be true and why it may be false—helps counter over-reliance to some extent.

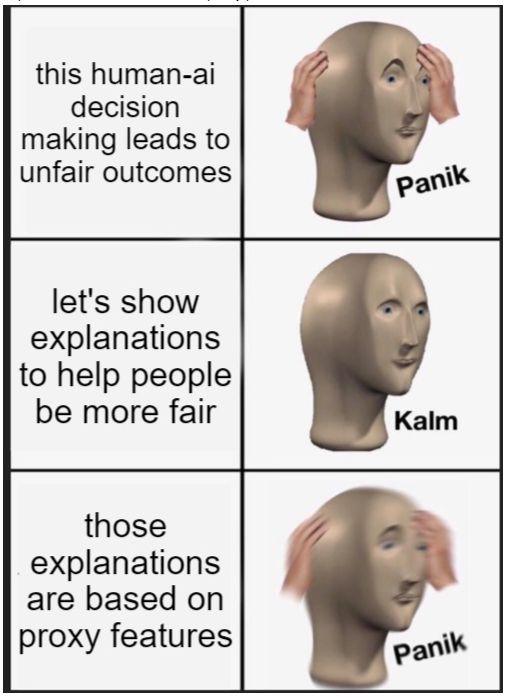

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>